LLM

Message boards :

Number crunching :

LLM

Message board moderation

Previous · 1 · 2

| Author | Message |

|---|---|

|

Send message Joined: 27 Aug 21 Posts: 38 Credit: 7,254,068,306 RAC: 0 Level  Scientific publications

|

If I am interpreting this correctly (and by looking at a failed task we attempted to run), this package is not included with the downloaded work unit? While analyzing the failure log, it timed out because it could not connect to huggingface.co which seems to host LLMs and such. Can this data not be included with the actual work unit? |

|

Send message Joined: 19 Aug 07 Posts: 46 Credit: 45,339,082 RAC: 0 Level  Scientific publications

|

Does LLM stand for larger language model? |

ServicEnginIC ServicEnginICSend message Joined: 24 Sep 10 Posts: 593 Credit: 12,143,186,510 RAC: 4,210,512 Level  Scientific publications

|

|

|

Send message Joined: 21 Feb 20 Posts: 1116 Credit: 40,876,970,595 RAC: 423,674 Level  Scientific publications

|

the download package for LLM is already huge at 8+GB in size. if they included everything it would be even larger. and would make updating the package for small changes very cumbersome for the devs. your problem is likely the network policies at your school. talk with the IT team and see if they can whitelist this URL for you to download GPUGRID work.

|

|

Send message Joined: 6 Mar 18 Posts: 38 Credit: 1,343,792,080 RAC: 61,750 Level  Scientific publications

|

Not getting anything on my Windows machine with a 5090, server status page reports units ready to go? Edit looks like its tried and failed "NVIDIA GeForce RTX 5090 with CUDA capability sm_120 is not compatible with the current PyTorch installation." https://gpugrid.net/gpugrid/result.php?resultid=38499856 Also looks like my Linux box is also failing, cant figure out from the logs why though https://gpugrid.net/gpugrid/result.php?resultid=33800993 |

|

Send message Joined: 13 Dec 17 Posts: 1423 Credit: 9,186,946,190 RAC: 1,288,374 Level  Scientific publications

|

Your link is to a Quantum Chemistry task from last year that everyone failed because the task was misconfigured. So what's your point? |

|

Send message Joined: 6 Mar 18 Posts: 38 Credit: 1,343,792,080 RAC: 61,750 Level  Scientific publications

|

Ah sorry ignore that, the 5090 was the LLM unit though |

|

Send message Joined: 21 Feb 20 Posts: 1116 Credit: 40,876,970,595 RAC: 423,674 Level  Scientific publications

|

i think the project is aware of this. they just need to update the Pytorch package that's included in the app.

|

ServicEnginIC ServicEnginICSend message Joined: 24 Sep 10 Posts: 593 Credit: 12,143,186,510 RAC: 4,210,512 Level  Scientific publications

|

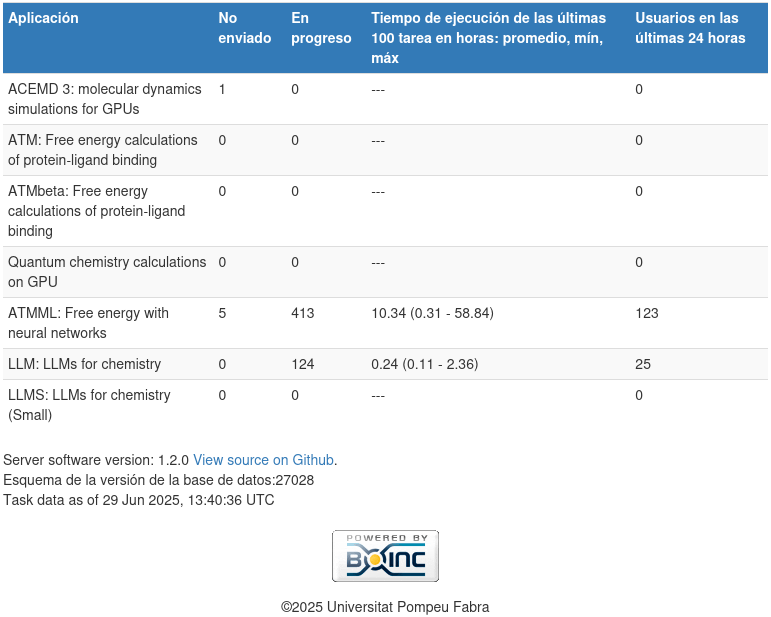

As of today, after several days of available tasks for "ATMML" and "LLM" gpugrid apps:  (Screenshot taken from Server status page) 123 users are actively contibuting for ATMML tasks with one or more of their hosts. Comparing to that, 25 users are doing the same for LLM tasks. I guess that the difference is due to a higher GPU VRAM requirements for LLM tasks, 24 GB or higher. Possibly, many of these 25 LLM contributors, are also included in the 123 ATMML count. I'm curious to know how many users could contribute for LLMS tasks, with 16 GB VRAM or higher required. I would be one user to add, with one of my hosts being compliant... |

|

Send message Joined: 28 Aug 24 Posts: 9 Credit: 471,821,955 RAC: 449,793 Level  Scientific publications

|

I would potentially be an eligible user too, with one of my hosts for LLMS, but I fear the app is not updated for Blackwell 2.0 I have had 5 with ATMML, but it got them all wrong (except 1 that was completely unresolved). |

Sabroe_SMC Sabroe_SMCSend message Joined: 30 Aug 08 Posts: 26 Credit: 790,486,757 RAC: 68,477 Level  Scientific publications

|

Could it be that the constant growth of the computing time for LLM while not growing the credits is a rather idiotic move the developer is ???? Or was the whole thing wanted and also planned? 38535249 31513141 7 Jul 2025, 14:34:07 UTC 7 Jul 2025, 16:32:30 UTC Completed and validated 2,139.60 1,973.78 187,000.00 LLM: LLMs for chemistry v1.01 (cuda124L) 38535278 31513161 7 Jul 2025, 15:10:57 UTC 7 Jul 2025, 17:08:52 UTC Completed and validated 2,168.09 2,019.39 187,000.00 LLM: LLMs for chemistry v1.01 (cuda124L) 38535113 31513054 7 Jul 2025, 13:16:09 UTC 7 Jul 2025, 15:11:13 UTC Completed and validated 2,197.06 2,049.77 187,000.00 LLM: LLMs for chemistry v1.01 (cuda124L) 38535184 31513101 7 Jul 2025, 13:02:35 UTC 7 Jul 2025, 14:34:23 UTC Completed and validated 2,267.26 2,105.20 187,000.00 LLM: LLMs for chemistry v1.01 (cuda124L) 38535178 31513096 7 Jul 2025, 12:34:54 UTC 7 Jul 2025, 13:16:25 UTC Completed and validated 2,271.79 2,092.81 187,000.00 LLM: LLMs for chemistry v1.01 (cuda124L) 38535344 31513201 7 Jul 2025, 17:08:35 UTC 7 Jul 2025, 19:07:58 UTC Completed and validated 2,276.79 2,132.36 187,000.00 LLM: LLMs for chemistry v1.01 (cuda124L) 38535013 31513009 7 Jul 2025, 15:56:21 UTC 7 Jul 2025, 17:48:12 UTC Completed and validated 2,346.57 2,186.47 187,000.00 LLM: LLMs for chemistry v1.01 (cuda124L) 38535215 31512993 7 Jul 2025, 11:55:09 UTC 7 Jul 2025, 12:35:11 UTC Completed and validated 2,357.16 2,106.70 187,000.00 LLM: LLMs for chemistry v1.01 (cuda124L) 38535136 31513053 7 Jul 2025, 12:34:54 UTC 7 Jul 2025, 13:56:23 UTC Completed and validated 2,384.64 2,222.14 187,000.00 LLM: LLMs for chemistry v1.01 (cuda124L) 38535334 31513193 7 Jul 2025, 17:47:55 UTC 7 Jul 2025, 19:48:45 UTC Completed and validated 2,436.70 2,268.34 187,000.00 LLM: LLMs for chemistry v1.01 (cuda124L) 38535320 31513186 7 Jul 2025, 16:32:14 UTC 7 Jul 2025, 18:29:45 UTC Completed and validated 2,480.06 2,320.22 187,000.00 LLM: LLMs for chemistry v1.01 (cuda124L) 38535250 31513142 7 Jul 2025, 13:56:06 UTC 7 Jul 2025, 15:56:37 UTC Completed and validated 2,710.99 2,546.77 187,000.00 LLM: LLMs for chemistry v1.01 (cuda124L) 38535262 31513149 7 Jul 2025, 18:29:29 UTC 7 Jul 2025, 20:38:11 UTC Completed and validated 2,963.49 2,791.89 187,000.00 LLM: LLMs for chemistry v1.01 (cuda124L) 38535409 31513240 7 Jul 2025, 19:48:31 UTC 8 Jul 2025, 12:31:35 UTC Completed and validated 3,350.13 3,181.92 187,000.00 LLM: LLMs for chemistry v1.01 (cuda124L) 38535395 31513228 7 Jul 2025, 19:07:40 UTC 8 Jul 2025, 11:35:19 UTC Completed and validated 3,900.95 3,667.45 187,000.00 LLM: LLMs for chemistry v1.01 (cuda124L) 38535814 31513538 8 Jul 2025, 14:54:57 UTC 8 Jul 2025, 16:03:59 UTC Completed and validated 3,942.01 3,781.09 187,000.00 LLM: LLMs for chemistry v1.01 (cuda124L) 38535739 31513481 8 Jul 2025, 11:35:04 UTC 8 Jul 2025, 13:39:58 UTC Completed and validated 4,093.75 3,919.09 187,000.00 LLM: LLMs for chemistry v1.01 (cuda124L) 38535917 31513568 8 Jul 2025, 18:39:45 UTC 8 Jul 2025, 19:51:35 UTC Completed and validated 4,113.83 3,948.52 187,000.00 LLM: LLMs for chemistry v1.01 (cuda124L) 38535885 31513582 8 Jul 2025, 17:23:13 UTC 8 Jul 2025, 18:36:34 UTC Completed and validated 4,187.50 4,020.72 187,000.00 LLM: LLMs for chemistry v1.01 (cuda124L) 38535947 31513622 8 Jul 2025, 19:51:51 UTC 8 Jul 2025, 22:19:15 UTC Completed and validated 4,212.17 4,052.92 187,000.00 LLM: LLMs for chemistry v1.01 (cuda124L) 38535987 31513645 8 Jul 2025, 22:18:58 UTC 8 Jul 2025, 23:34:03 UTC Completed and validated 4,284.40 4,123.52 187,000.00 LLM: LLMs for chemistry v1.01 (cuda124L) 38535760 31513495 8 Jul 2025, 12:31:18 UTC 8 Jul 2025, 14:51:43 UTC Completed and validated 4,289.93 4,124.69 187,000.00 LLM: LLMs for chemistry v1.01 (cuda124L) 38535851 31513558 8 Jul 2025, 16:07:14 UTC 8 Jul 2025, 17:23:29 UTC Completed and validated 4,369.52 4,203.80 187,000.00 LLM: LLMs for chemistry v1.01 (cuda124L) 38535945 31513621 8 Jul 2025, 19:51:51 UTC 8 Jul 2025, 21:08:45 UTC Completed and validated 4,420.05 4,253.17 187,000.00 LLM: LLMs for chemistry v1.01 (cuda124L) 38535988 31513650 8 Jul 2025, 22:19:15 UTC 9 Jul 2025, 11:04:12 UTC Completed and validated 5,452.34 5,140.70 187,000.00 LLM: LLMs for chemistry v1.01 (cuda124L) 38536288 31513652 9 Jul 2025, 15:33:10 UTC 9 Jul 2025, 17:13:36 UTC Completed and validated 5,925.90 5,698.23 187,000.00 LLM: LLMs for chemistry v1.01 (cuda124L) 38536018 31513674 8 Jul 2025, 23:33:46 UTC 9 Jul 2025, 13:11:58 UTC Completed and validated 5,987.65 5,728.09 187,000.00 LLM: LLMs for chemistry v1.01 (cuda124L) 38536324 31513894 9 Jul 2025, 17:14:07 UTC 9 Jul 2025, 20:39:19 UTC Completed and validated 6,036.94 5,814.98 187,000.00 LLM: LLMs for chemistry v1.01 (cuda124L) 38536214 31513823 9 Jul 2025, 11:31:45 UTC 9 Jul 2025, 14:53:05 UTC Completed and validated 6,043.68 5,813.52 187,000.00 LLM: LLMs for chemistry v1.01 (cuda124L) 38536323 31513893 9 Jul 2025, 17:13:20 UTC 9 Jul 2025, 18:58:19 UTC Completed and validated 6,088.55 5,857.84 187,000.00 LLM: LLMs for chemistry v1.01 (cuda124L) 38536379 31513661 9 Jul 2025, 20:42:31 UTC 9 Jul 2025, 22:36:04 UTC Completed and validated 6,607.62 6,370.31 187,000.00 LLM: LLMs for chemistry v1.01 (cuda124L) 38536405 31513946 9 Jul 2025, 22:35:47 UTC 10 Jul 2025, 0:34:32 UTC Completed and validated 6,903.19 6,658.91 187,000.00 LLM: LLMs for chemistry v1.01 (cuda124L) 38536448 31513979 10 Jul 2025, 2:14:08 UTC 10 Jul 2025, 4:18:34 UTC Completed and validated 7,433.25 7,164.05 187,000.00 LLM: LLMs for chemistry v1.01 (cuda124L) 38536496 31514023 10 Jul 2025, 5:51:04 UTC 10 Jul 2025, 8:07:30 UTC Completed and validated 8,149.68 7,852.95 187,000.00 LLM: LLMs for chemistry v1.01 (cuda124L) 38536524 31513913 10 Jul 2025, 8:15:16 UTC 10 Jul 2025, 10:54:14 UTC Completed and validated 9,500.91 9,167.94 187,000.00 LLM: LLMs for chemistry v1.01 (cuda124L) 38536526 31513920 10 Jul 2025, 8:15:16 UTC 10 Jul 2025, 13:37:30 UTC Completed and validated 9,780.50 9,436.34 187,000.00 LLM: LLMs for chemistry v1.01 (cuda124L) 38536525 31513919 10 Jul 2025, 8:15:16 UTC 10 Jul 2025, 16:29:40 UTC Completed and validated 10,315.16 9,961.91 187,000.00 LLM: LLMs for chemistry v1.01 (cuda124L) |

|

Send message Joined: 29 Aug 24 Posts: 71 Credit: 3,330,790,989 RAC: 113,338 Level  Scientific publications

|

Just looking at averages from the server page, ATMML is 121,500 credits per hour (all GPUs) and LLM is 111,900 (premium GPUs). It doesn't motivate me to get a premium card or run LLM if I had one. |

Sabroe_SMC Sabroe_SMCSend message Joined: 30 Aug 08 Posts: 26 Credit: 790,486,757 RAC: 68,477 Level  Scientific publications

|

LOL You can completely forget about the server-side values. They're days behind the real-world values. Number 5 in the GPU Users Group, GWGeorge007, ran an Nvidia RTX 3090 on LLM from July 7th to July 9th, 2025. The times there increased from just over 6000 seconds to more than 18000 seconds. All within two days. How is this supposed to continue? |

|

Send message Joined: 21 Feb 20 Posts: 1116 Credit: 40,876,970,595 RAC: 423,674 Level  Scientific publications

|

this project for the most part operates on static credit per task. not credit per unit time. there should be no expectation that X amount of credit per time will be standardized

|

|

Send message Joined: 29 Aug 24 Posts: 71 Credit: 3,330,790,989 RAC: 113,338 Level  Scientific publications

|

I was just giving some basic evidence in agreement with Sabroe. I, for one, don't have any expectations. It's quite a mixed bag across the projects. |

©2026 Universitat Pompeu Fabra