ATM

Message boards :

News :

ATM

Message board moderation

Previous · 1 . . . 14 · 15 · 16 · 17 · 18 · 19 · 20 . . . 35 · Next

| Author | Message |

|---|---|

|

Send message Joined: 18 Jul 13 Posts: 79 Credit: 210,528,292 RAC: 0 Level  Scientific publications

|

When will atm be unsuspended? |

|

Send message Joined: 18 Jul 13 Posts: 79 Credit: 210,528,292 RAC: 0 Level  Scientific publications

|

Good evening, only on one of my PCs with Windows 11, I7-13700KF and RTX 2080 Ti, none of the GPUGRID ATMbeta tasks (CUDA 1121) can be processed. By now more than a hundred have ended after a few tens of seconds. Other tasks (for example based on CUDA 1131) are also processed on this PC and without any problems. I have no idea what could be causing it so I do not know how to fix it. Thanks in advance to anyone who can help me solve the problem. I just had a theory that cmd could fail because both you and i had set default command processor to Windows terminal instead of Console Window Host. Unfortunately i can't test it because there are no more ATM tasks. |

|

Send message Joined: 18 Jul 13 Posts: 79 Credit: 210,528,292 RAC: 0 Level  Scientific publications

|

Didn't help. |

|

Send message Joined: 28 Mar 09 Posts: 490 Credit: 11,731,645,728 RAC: 57 Level  Scientific publications

|

Didn't help. It could be a hardware problem (processor, RAM, etc), not software. I have 2 computers crunching here. One is a Core 7 intel, with 32 Gigs RAM, and it completes both ACEMDs and ATMbetas successfully. https://www.gpugrid.net/results.php?hostid=608721 There other is an AMD Phenol II, with 16 Gigs RAM, and it completes ACEMDs successfully, while ATMbetas error out. (I can't put any more RAM on this MB.) https://www.gpugrid.net/results.php?hostid=607570 They both have the same OS. |

|

Send message Joined: 18 Jul 13 Posts: 79 Credit: 210,528,292 RAC: 0 Level  Scientific publications

|

In my case it crashes instantly on Wrapper: running C:/Windows/system32/cmd.exe (/c call run.bat) |

|

Send message Joined: 28 Mar 09 Posts: 490 Credit: 11,731,645,728 RAC: 57 Level  Scientific publications

|

These units still crash when shutdown and then restarted. The progress bar goes to 100% done after a few minutes, when you get to the subsequent units in the thread. Looks like nothing has been updated. |

|

Send message Joined: 11 May 10 Posts: 68 Credit: 12,293,491,875 RAC: 2,606 Level  Scientific publications

|

ATM Beta still crashes after 40 seconds on a RTX 4080. |

|

Send message Joined: 1 Jan 15 Posts: 1166 Credit: 12,260,898,501 RAC: 1 Level  Scientific publications

|

after some time, today I resumed crunching ATM tasks. However, I notice a strange behaviour of BOINC when trying to download a second task per GPU: When pushing the "update" button, no second task will download (although plenty of them available), and the event log says: 17.07.2023 20:00:19 | GPUGRID | Requesting new tasks for NVIDIA GPU 17.07.2023 20:00:21 | GPUGRID | Scheduler request completed: got 0 new tasks 17.07.2023 20:00:21 | GPUGRID | No tasks sent 17.07.2023 20:00:21 | GPUGRID | No tasks are available for ATM: Free energy calculations of protein-ligand binding 17.07.2023 20:00:21 | GPUGRID | Tasks won't finish in time: BOINC runs 96.7% of the time; computation is enabled 100.0% of that I had downloaded hundreds of ATM tasks before on this system, and always I could download a second one which stayed in "waiting position" until the first one got finished. Never before I saw this kind of statement. Can anyone tell me what's wrong, and what I can do in order to get a second task downloaded? |

|

Send message Joined: 21 Feb 20 Posts: 1116 Credit: 40,839,470,595 RAC: 5,269 Level  Scientific publications

|

the estimate time to completion is too long. so BOINC thinks they wont finish by their listed 5 day deadline. that's why. you can try editing the DCF in the client state file manually, or just wait for it to adjust itself.

|

|

Send message Joined: 1 Jan 15 Posts: 1166 Credit: 12,260,898,501 RAC: 1 Level  Scientific publications

|

the estimate time to completion is too long. so BOINC thinks they wont finish by their listed 5 day deadline. ... that's what I suspected first (I had that before on another machine), then I took a look at the times, and surprise: right now, a task has been running for 2:32 hrs, indicated completion time: 34:50 minutes(!). So the problem must be somewhere else :-( |

|

Send message Joined: 21 Feb 20 Posts: 1116 Credit: 40,839,470,595 RAC: 5,269 Level  Scientific publications

|

the estimate time to completion is too long. so BOINC thinks they wont finish by their listed 5 day deadline. ... it has to do with the estimated completion time of the task it's trying to download + the tasks you have. not just the tasks you have. a brand new task might say it will take 90hrs to finish. you have 34hrs remaining on your work. so it thinks it would be 5.1 days before the new task would finish, so it decides to not download any.

|

|

Send message Joined: 1 Jan 15 Posts: 1166 Credit: 12,260,898,501 RAC: 1 Level  Scientific publications

|

... you have 34hrs remaining on your work. ... NOT 34hrs, but 34 minutes ! |

|

Send message Joined: 21 Feb 20 Posts: 1116 Credit: 40,839,470,595 RAC: 5,269 Level  Scientific publications

|

... you have 34hrs remaining on your work. ... that's inconsequential, it was just an example. the point was that it depends mostly on the time estimate of the task to be downloaded. which could be in excess of 5 days already and you're in the same situation. several of mine show initial estimates like 200+ days.

|

ServicEnginIC ServicEnginICSend message Joined: 24 Sep 10 Posts: 592 Credit: 11,972,186,510 RAC: 1,187 Level  Scientific publications

|

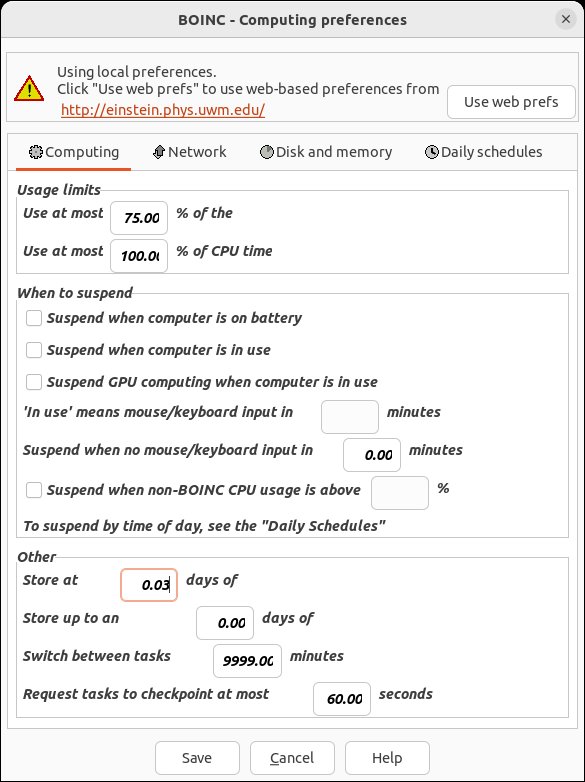

right now, a task has been running for 2:32 hrs, indicated completion time: 34:50 minutes(!). When trying to download a second task, set the "Store at least X days of work" parameter at BOINC local preferences as tight in excess as possible to remaining calculated time for the task in progress. At your example: about 34 minutes remaining, try setting the "Store at least X days of work" parameter to 0.03 days (about 43 minutes). And parameter "Store up to an additional X days of work" set to 0.00  |

|

Send message Joined: 1 Jan 15 Posts: 1166 Credit: 12,260,898,501 RAC: 1 Level  Scientific publications

|

@ ServicEnginIC, thanks for your hints. However, I finally did not need to do anything: all of a sudden, two tasks per GPU got downlaoded :-) |

|

Send message Joined: 28 Feb 23 Posts: 35 Credit: 0 RAC: 0 Level  Scientific publications  |

Sorry for missing out for a while. We were testing ATM in a setup not available for GPUGRID. But we're back to crunching :) I've seen that more or less everything is running fine. Albeit for some crashes that can happen everything seems to come back to me fine. Is there anything in specific I should look into it? I already know about the progress reporting issue (if it persists) but there's not much more I can do on my end. If they plan to update the GPUGRID app at some point I'll insist. |

|

Send message Joined: 28 Mar 09 Posts: 490 Credit: 11,731,645,728 RAC: 57 Level  Scientific publications

|

Sorry for missing out for a while. We were testing ATM in a setup not available for GPUGRID. But we're back to crunching :) I have question regarding the minimum hardware requirements (i.e. Amount, speed, type of RAM, CPU speed and type, motherboard speed and requirements, etc.) for the computer to be able to complete successfully, these units for either windows and linux OS? One of my computers has been running these units successfully, the other has not. They both have the same OS, but have different hardware. I just want to know the limits. |

|

Send message Joined: 28 Feb 23 Posts: 35 Credit: 0 RAC: 0 Level  Scientific publications  |

Sorry for missing out for a while. We were testing ATM in a setup not available for GPUGRID. But we're back to crunching :) I'm not sure to be the most adequate to answer this question but I might try my best. AFAIK it should run anywhere, maybe the issue is more driver related? We recently tested on 40 series GPUs locally and it run fine, since I saw some comments in the thread. |

|

Send message Joined: 1 Jan 15 Posts: 1166 Credit: 12,260,898,501 RAC: 1 Level  Scientific publications

|

this kind of error tar: run.log: file changed as we read it tar: r*/*.xml: Cannot stat: No such file or directory tar: Exiting with failure status due to previous errors has happened quite often lately. Quico, anything you can do about it? |

|

Send message Joined: 28 Mar 09 Posts: 490 Credit: 11,731,645,728 RAC: 57 Level  Scientific publications

|

Sorry for missing out for a while. We were testing ATM in a setup not available for GPUGRID. But we're back to crunching :) Both computers are running the same driver, and both computers have the same type of video card rtx 2080ti. Here is the portion from the log from the computer that has the errors: Running command git clone --filter=blob:none --quiet https://github.com/raimis/AToM-OpenMM.git /var/lib/boinc-client/slots/0/tmp/pip-req-build-9y8_6t1d Running command git rev-parse -q --verify 'sha^d7931b9a6217232d481731f7589d64b100a514ac' Running command git fetch -q https://github.com/raimis/AToM-OpenMM.git d7931b9a6217232d481731f7589d64b100a514ac Running command git checkout -q d7931b9a6217232d481731f7589d64b100a514ac error: subprocess-exited-with-error × python setup.py egg_info did not run successfully. │ exit code: -4 ╰─> [0 lines of output] [end of output] note: This error originates from a subprocess, and is likely not a problem with pip. error: metadata-generation-failed × Encountered error while generating package metadata. ╰─> See above for output. note: This is an issue with the package mentioned above, not pip. hint: See above for details. 15:34:05 (42979): bin/bash exited; CPU time 3.604100 15:34:05 (42979): app exit status: 0x1 15:34:05 (42979): called boinc_finish(195) </stderr_txt> https://www.gpugrid.net/result.php?resultid=33535521 Would this be a software or hardware problem? |

©2025 Universitat Pompeu Fabra