Managing non-high-end hosts

Message boards :

Number crunching :

Managing non-high-end hosts

Message board moderation

Previous · 1 · 2 · 3

| Author | Message |

|---|---|

|

Send message Joined: 21 Feb 20 Posts: 1116 Credit: 40,876,970,595 RAC: 423,674 Level  Scientific publications

|

Definitely interested to know if the reading goes back after the switch back to 470 when you have time. I see that you picked up a task today :)

|

|

Send message Joined: 13 Dec 17 Posts: 1423 Credit: 9,186,946,190 RAC: 1,288,374 Level  Scientific publications

|

The later kernels are not necessarily considered beta. In fact the latest stable kernel is 5.15.7 as per the kernel.org site. https://www.kernel.org/ Some kernels are considered long-term or LTS and that is what distros ship that aren't rolling release distros. Generally stated, later kernels are more secure and have more bug fixes and are faster than earlier kernels, not withstanding some speed regressions that pop up in the beta branches every once in a while. Michael Larabel at Phoronix.com does the Linux community a great service by continually testing new kernels and does kernel bisecting regularly for the community to identify where the kernel regression is occurring and passes that info along to the developers and kernel maintainers. We should all support his efforts by turning off adblockers on his website or contribute as a paying member. I do. |

ServicEnginIC ServicEnginICSend message Joined: 24 Sep 10 Posts: 593 Credit: 12,143,936,510 RAC: 4,251,066 Level  Scientific publications

|

I see that you picked up a task today :) Yes! They come and go, and I caught one today :) Ctrl + click over next two links, and new browser tabs will open for direct comparison between Nvidia Driver Version: 495.44 and Nvidia Driver Version: 470.86 at the same Host #186626. You're usually very insightful in your analysis. There is a drastic change in notified PCIe % usage between Regular Driver Branch V470.86 (31-37 %) and New Feature Driver Branch V495.44 (0-2 %) on this PCIe rev. 3.0 x16 test host. Now, immediately, a new question arises: Is it due to some bug at measuring/notifying algorithm on this new driver branch, or is it due to a true improvement in PCIe bandwidth management? I suppose that Nvidia driver developers usually have no time to read forums like this for clarifying... (?) By the moment, I don't know if I'll keep in using V470 or I'll reinstall V495. It will depend on processing times be better or worse. In a few days with steady work I'll collect enough data to evaluate and decide. |

|

Send message Joined: 21 Feb 20 Posts: 1116 Credit: 40,876,970,595 RAC: 423,674 Level  Scientific publications

|

glad to see my hunch about the driver being the culprit was correct. my second hunch is that it might not impact processing speed, and that the issue is solely with what the driver is reporting, not what's actually being used. I think your PCIe actual use with the 495 driver is normal for GPUGRID (~30%), but that the driver is incorrectly reporting it as 1%. another test might be to put the older 470 driver on your old LGA775 system and see what the PCIe % reports then. maybe 100%? that might narrow it down to a scaling issue. or maybe the old system and older GPUs are using an older/legacy API that's fetching the correct data, but newer GPUs on a newer API are fetching the wrong data. there's precedent for this kind of thing, where you have different API calls that fetch the same "thing" but return a different result. For example, in the stock BOINC code, everyone knows that Nvidia GPUs with >4GB memory will only report 4GB, no matter the true size, only returning the true size for values less than 4GB. this is because stock code uses a very old function that clamps the result to 32-bits (4095MB value). but a newer function exists that BOINC is not reading, that returns the correct result. both functions are trying to fetch the same thing, memory size, and in the cases of >4GB VRAM, both functions return a different result.

|

|

Send message Joined: 13 Dec 17 Posts: 1423 Credit: 9,186,946,190 RAC: 1,288,374 Level  Scientific publications

|

The Nvidia 495.46 driver isn't considered a beta release now. Just a New Feature branch. I would posit the main difference between the PCIe usage between the 470 and 495 branch drivers is that the 495 branch now has the GBM (Generic Buffer Manager) API built into it in support of the Wayland compositor. Don't know exactly what the API is doing but surmise that since the name involves "buffer" that is might be decreasing the amount of traffic going over the PCIe bus. |

|

Send message Joined: 21 Feb 20 Posts: 1116 Credit: 40,876,970,595 RAC: 423,674 Level  Scientific publications

|

I dont think the PCIe use at 1% is "real". I think it's just misreporting the real value. GBM doesnt seem to have anything to do with PCIe traffic. that would be up to the app. It seems the app is coded to store a bunch of stuff in system memory rather than GPU memory and it's constantly sending data back and forth. this is up to the app, not the driver.

|

ServicEnginIC ServicEnginICSend message Joined: 24 Sep 10 Posts: 593 Credit: 12,143,936,510 RAC: 4,251,066 Level  Scientific publications

|

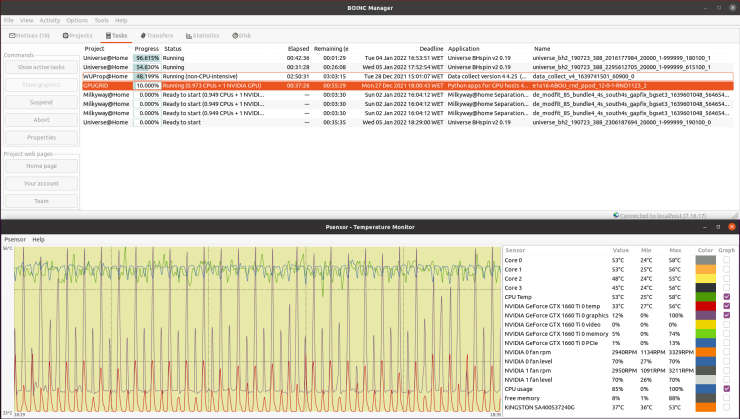

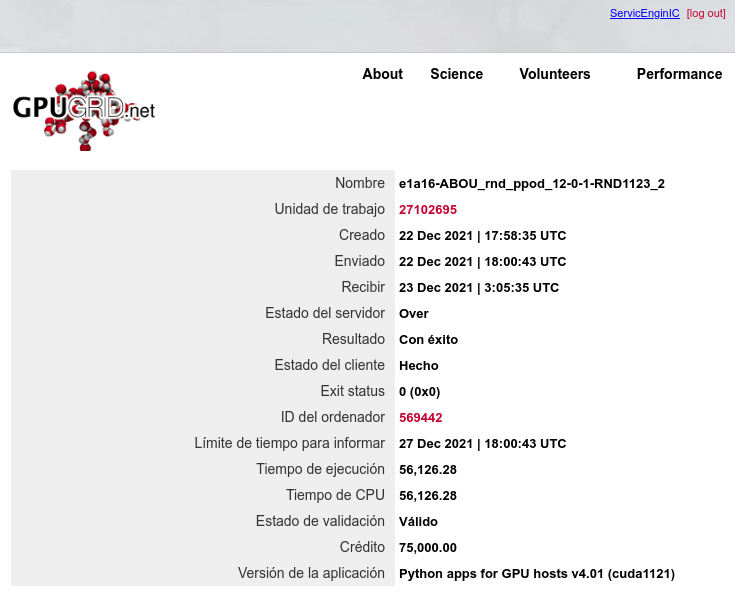

With the arrival of the last phase of Python GPU beta tasks for Linux systems, every of my hosts started to fail them. Then I read an explanation from abouh, stating that minimum GPU dedicated RAM should be 6 GB or more. I've temporary configured Gpugrid preferences for not to request beta apps at every of my 4 GB RAM GPUs. I also deduced from Ian&Steve C. Message #58172 that minimun system RAM should be 16 GB. Picking elements from several of my non-high-end hosts, I configured one of them that passed a first task, e1a18-ABOU_rnd_ppod_11-0-1-RND5755_4. It was at my Test Host #540272 Then, I reproduced that configuration at my regular Host #569442 and a second task e1a16-ABOU_rnd_ppod_12-0-1-RND1123_2 also succeeded. Therefore, I'm taking this configuration as of "Python GPU tasks minimum requirements" for me, with my currently available hardware. - GPU: Asus DUAL-GTX1660TI-O6G (reworked), endowed with 6 GB dedicated RAM - CPU: Intel CORE I3-9100F @3.60 GHZ, 4 cores / 4 threads - System RAM: 16 GB DDR4 @2666 MHz - Operating system: Ubuntu Linux 20.04.3 LTS - Nvidia drivers: V495.44 I was fortunate to be present when catching the second mentioned task, and I was able to document several data since it started and got processing stability. When this happened, my Host #569442 was previously processing a PrimeGrid GPU task. When Python task started, an evident drop in GPU activity was observed. The %progress of task was increasing from 0% to 10%, while free system RAM was decreasing from 44% to 1% and GPU RAM usage increased until almost reaching 100% of its 6 GB. Then, %progress of task got fixed at 10% up to the end, after about 9 hours of true execution time. As can be followed at this image, about 4 minutes of erratic CPU activity, followed by another 8 more minutes of intense CPU activity, gave way to an stable eye-catching periodic pattern:  This image can be better understood by reading abouh explanation at his Message #58194 During the task, the agent first interacts with the environments for a while, then uses the GPU to process the collected data and learn from it, then interacts again with the environments, and so on. GPU keeps cycling between brief peaks of high activity (95% here) followed by valleys of low activity (11% here). CPU, on the other hand, presents peaks of lower activity in an anti cyclic pattern: When GPU activity increases, CPU activity decreases, and vice versa. Period for this cycling was about 21 seconds at this system. Result for this task:  |

ServicEnginIC ServicEnginICSend message Joined: 24 Sep 10 Posts: 593 Credit: 12,143,936,510 RAC: 4,251,066 Level  Scientific publications

|

Lately, In Canary Islands (Spain), electricity supply costs have been getting higher and higher, month after month. I have an ongoing "experiment": I've swapped my currently highest performance graphics card, this reworked GTX 1660 Ti, to my daily use host, and I've switched off every of my other crunching machines. On next month(s) I should be able to estimate the effects for this. It should bring some savings in my electricity bill (and collaterally, in my BOINC RAC ;-) ... |

©2026 Universitat Pompeu Fabra