New version of ACEMD 2.17 on multi GPU hosts

Message boards :

Number crunching :

New version of ACEMD 2.17 on multi GPU hosts

Message board moderation

| Author | Message |

|---|---|

ServicEnginIC ServicEnginICSend message Joined: 24 Sep 10 Posts: 593 Credit: 12,143,936,510 RAC: 4,251,066 Level  Scientific publications

|

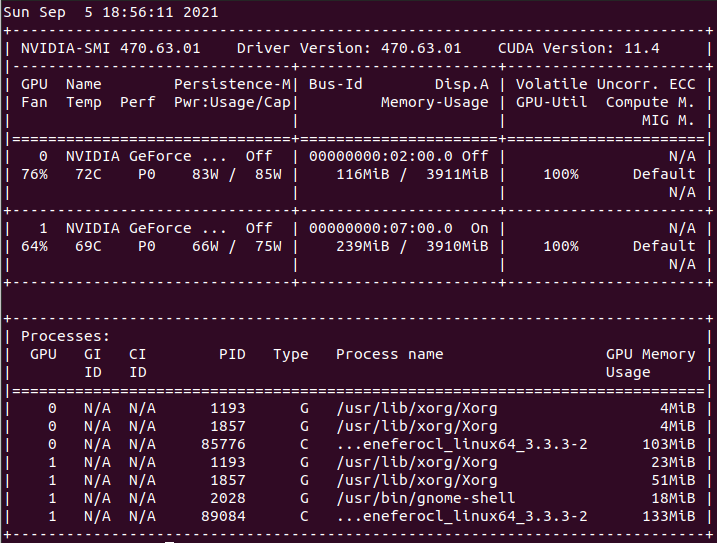

A New version of ACEMD 2.17 was introduced on September 2nd 2021. This was announced by GDF at message #57257 Current and previous program versions can be consulted at Gpugrid apps page. This new version presents a peculiar behavior on multi GPU hosts, not seen on previous app versions, and first mentioned by Ian&Steve C. at message #57261. For a deeper study, I happened to have two tasks of this new app version 2.17 simultaneously running at this twin GTX 1650 GPU system. Device #0 at this host is an ASUS ROG-STRIX-GTX1650-O4G-GAMING graphics card. I took screenshot for Boinc Manager readings. As seen at this image, Boinc Manager "thinks" that the first task is running at device #0, and the second task in device #1. However, looking at Psensor monitoring utility, while device #0 is running at 100% activity, memory usage 77% and PCIe usage 61%, device #1 is really cold and inactive. This lead me to think that both tasks were running in fact at the same device #0, being its resources splitted between these two tasks. And it was confirmed when results came out: Both task #27078190 and task #27078191 are shown to have run at device #0. Then I happened to catch one more only task for the same system, that was executed in exclusive. Result for this task #27078213 can be seen here. While tasks #27078190 and #27078191 that were run concurrently took 32451 and 32473 seconds respectively, task #27078213 that run in exclusive, took 15328 seconds. That is, well less than half the execution times of the tasks executed concurrently. For additional confirmation. At other of my hosts, this triple GTX 1650 GPU system, were simultaneously executed these three tasks. Device #0 at this system is the same ASUS ROG-STRIX-GTX1650-O4G-GAMING graphics card than in previous mentioned twin GPU system. While executing, Boinc Manager was showing that they were running at devices #0, #1 and #2. But, as can be seen at this Psensor image, only device #0 is performing at full rate, while devices #1 and #2 are actually idle. After finishing, execution times for these three tasks were 46512, 46677 and 46719 seconds. If we take 15328 seconds as the run time for a single task executed in exclusive, above times are about 3x longer than this time. And the other two GPUs #1 and #2 have stayed unused in the meantime. For both Gpugrid and other GPU projects. In massive multi GPU hosts, like may be this impressive Ian&Steve C. 8x GPU one, the potential performance drop is multiplied by N. And maybe even Device #0 resources can result overflowed when attempting to simultaneously execute such a number of tasks... |

|

Send message Joined: 11 Jul 09 Posts: 1639 Credit: 10,159,968,649 RAC: 3 Level  Scientific publications

|

BOINC has had two different ways of signalling to a science app which device number it should utilise. OLD (deprecated) - pass the device number on the command line NEW (current) - pass the device number in an XML file init_data.xml in the slot directory. The situation is complicated here by the use of the wrapper app in the calling chain. Your screen shot (and every task I've looked at so far) is showing that the wrapper is calling the acemd3 application, and it looks like it's using the command line convention. That shouldn't be happening. I've still failed to catch any of these new tasks. I need to maintain my Linux hosts soon, so I'll look for a newer driver while I'm at it. But if anyone catches a live task, and can examine init_data.xml, we might get some extra clues. Edit - look for lines like <gpu_type>NVIDIA</gpu_type> <gpu_device_num>1</gpu_device_num> <gpu_opencl_dev_index>1</gpu_opencl_dev_index> around 40 lines into the file. |

|

Send message Joined: 21 Feb 20 Posts: 1116 Credit: 40,876,970,595 RAC: 423,674 Level  Scientific publications

|

The fact that the BOINC “thinks” it’s running on the wrong device exposes two issues. 1. BOINC assigned it to the device it intended, but that was not properly communicated to the application. Maybe a disconnect through the wrapper process, or possibly it’s hard coded for device 0, as an artifact from dev testing. 2. This also shows that BOINC doesn’t do any verification of what device a process is ACTUALLY running on. It just trusts that the message was received. If it did verification it would show it running on the wrong device. It goes further than simply checking psensor for device activity. If you run nvidia-smi, you can see that the acemd is actually assigned to and running on device0, leaving the idle device with no process running. Since I only had one GPUGRID task running, and GPUGRID has a 100 resource share, with Einstein at 0 RS, when I rebooted BOINC, the GPUGRID process restarted on 0 and BOINC showed/assigned at 0 as well. This was likely a “when stars align” scenario. If I had more GPUGRID tasks, I would have undoubtedly continued to experience the issue. Regarding the method of passing the arguments, it should depend on what server version is being used (Einstein with its old server version has no problem with this method). it does appear to be some kind of command line argument, but at which step of the process (pre or post wrapper) is referred to by the stderr file is not clear. The command is being sent as --boinc input --device 0. This is the exact same command structure as used with no issue on previous apps/tasks.

|

ServicEnginIC ServicEnginICSend message Joined: 24 Sep 10 Posts: 593 Credit: 12,143,936,510 RAC: 4,251,066 Level  Scientific publications

|

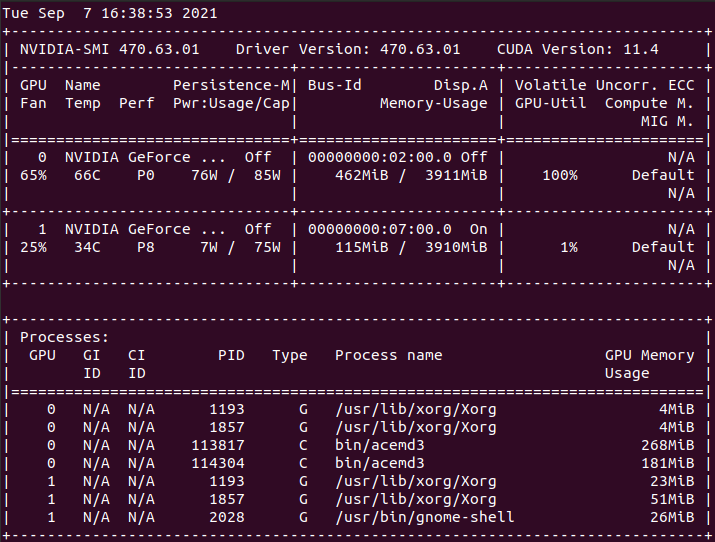

Thank you both for sharing your knowledge once more. I'm prioritizing my twin GPU host for requesting new tasks (although at the cost of penalizing my other hosts chances). I'll consider your tips if getting some (currently scarce) Gpugrid task. Currently this system is running two Genefer 21 Primegrid tasks, also very GPU power demanding. Device #0 task properties Device #1 task properties I found their assignments as described by Richard Haselgrove in their init_data.xml files at directories /var/lib/boinc-client/slots/4 and /var/lib/boinc-client/slots/5 respectively. At this situation, nvidia-smi command returns as follows:  |

|

Send message Joined: 11 Jul 09 Posts: 1639 Credit: 10,159,968,649 RAC: 3 Level  Scientific publications

|

Regarding the method of passing the arguments, it should depend on what server version is being used (Einstein with its old server version has no problem with this method). it does appear to be some kind of command line argument, but at which step of the process (pre or post wrapper) is referred to by the stderr file is not clear. The command is being sent as --boinc input --device 0. This is the exact same command structure as used with no issue on previous apps/tasks. We can discount the server theory - the snippet I posted from init_data.xml came from an Einstein task. What it actually depends on is the version of the BOINC API library linked in to the science application at compile time. They used API_VERSION_7.15.0 for the autodock v7.17 app: I forget exactly when the transition took place, but it was much, much, longer ago than that. The mechanism is very crude: search the application with a hex editor for api_version, and the numeric follows that string - the number in the previous paragraph is a direct paste using that method. Edit - the API_Version should also appear in the <app_version> tag in client_state.xml. If it's missing, the client is likely to revert to using the old, deprecated, calling syntax. |

ServicEnginIC ServicEnginICSend message Joined: 24 Sep 10 Posts: 593 Credit: 12,143,936,510 RAC: 4,251,066 Level  Scientific publications

|

Today when returning home I've got a surprise waiting: Two new version of ACEMD 2.17 long tasks are running concurrently at mentioned twin GPU host. First of all, I've ceased requesting new tasks from all my hosts, to increase the chance for any newly generated tasks to be catched by other users. Then, I've checked that the mentioned behavior at the beginning of this thread is reproducing with these two tasks also. Task #32640189 Task #32640190 Boinc Manager reading Device #0 task properties (as shown by Boinc Manager) Device #1 task properties (as shown by Boinc Manager) init_data.xml slots/5 (Device #1 task) <gpu_type>NVIDIA</gpu_type> init_data.xml slots/6 (Device #0 task) <gpu_type>NVIDIA</gpu_type> At this situation, nvidia-smi command returns as follows:  That is, although assignments at init_data.xml files look as expected, and Boinc Manager shows that both devices #0 and #1 are running their own tasks, really both tasks are running at the same device #0, and device #1 is idle. I've copied at a safe location for reference the following three whole folders: /var/lib/boinc-client/slots/5 /var/lib/boinc-client/slots/6 /var/lib/boinc-client/projects/www.gpugrid.net I can examine them in search for any other clue, but I need further advice for this... |

|

Send message Joined: 11 Jul 09 Posts: 1639 Credit: 10,159,968,649 RAC: 3 Level  Scientific publications

|

I can think of three things I'd really like to see: 1) In the slot directories: is there an init_data.xml file, and does the content match the <device_number> reported by BOINC for that slot? 2) What command line was used to launch the ACEMD3 process? I'd use process explorer to see that in Windows. 3) Does the ACEMD3 v2.17 binary have an API_VERSION text string embedded within it? |

ServicEnginIC ServicEnginICSend message Joined: 24 Sep 10 Posts: 593 Credit: 12,143,936,510 RAC: 4,251,066 Level  Scientific publications

|

1) In the slot directories: is there an init_data.xml file, and does the content match the <device_number> reported by BOINC for that slot? Yes, they did exist, and they matched the devices reported by BOINC Manager. But task reported by BOINC Manager as running at device 1 difers the physical device where it actually ran as reported by wrapper (and nvidia-smi command). I'll upload images for future reference, given that tasks will vanish from Gpugrid database in short. - Slots/6: Task e1s6_I6-ADRIA_test_acemd3_newapp_KIXCMYB-0-2-RND9347_2. Reported by BOINC Manager as running at device 0, the same where it actually ran. - Slots/5: Task e1s1_I2-ADRIA_test_acemd3_newapp_KIXCMYB-1-2-RND8042_0. Reported by BOINC Manager as running at device 1, but it ran actually at device 0. As shown by nvidia-smi command, device 1 was actually idle (P8 state, 1% overall activity), while the two mentioned tasks were running concurrently at device 0. I (barely) rescued the last previous version 2.12 task that ran at a device other than 0 on any of my hosts. v2.12 Task 1_3-CRYPTICSCOUT_pocket_discovery_6cacc905_1fa2_4ed0_98e6_1139c20e13df-0-2-RND1223_2. It ran at device 2 at this triple GTX 1650 GPU host. v2.17 Task e1s5_I6-ADRIA_test_acemd3_newapp_KIXCMYB-0-2-RND2315_0. It ran at device 0 on the same host. There are subtle differences between them: - Wrapper version is different: Previous v2.12 wrapper version was 7.7.26016. Current v2.17 wrapper version is 7.5.25014 (older?) - At previous v2.12 version, wrapper was running directly the acemd3 process. At current v2.17 version, a preliminary decompressing stage seems to be executed, then bin/acemd3 process is run. - v2.12 wrapper device assignment dialog: (--boinc input --device 2); v2.17 wrapper device assignment dialog: (--boinc --device 0) - Any other difference that I can't see? |

|

Send message Joined: 11 Jul 09 Posts: 1639 Credit: 10,159,968,649 RAC: 3 Level  Scientific publications

|

Thanks. I finally got my own task(s), so I can answer my own other questions. 2) I used htop to view the actual command lines. There are a huge number of acemd3 threads - at least 18 (??!!). but the command line is uniform: bin/acemd3 --boinc --device 0 (which was right for this particular task - BOINC had assigned it to device 0) 3) There's no API_VERSION string in the acemd3 binary, so it doesn't have the ability to read the init_data.xml file natively. But it does have strings for the parameters --boinc --device '--boinc' doesn't appear in the Wrapper documentation, but --device does. It appears to be correctly specified in the job.xml file as $GPU_DEVICE_NUM, per documentation. My task was 'Created 9 Sep 2021 | 6:49:00 UTC', and correctly specifies wrapper version 26014 - the latest available precompiled from BOINC. 25014 may have been a typo, since corrected. 26016 was pre-compiled for Windows only. Now, I just have to wait for a new task, and force it to run on device 1 to see what happens then. [afterthought: the precompiled wrapper 26014 is very old - April 2015. I do hope they're recompiling from source] |

|

Send message Joined: 11 Jul 09 Posts: 1639 Credit: 10,159,968,649 RAC: 3 Level  Scientific publications

|

- At previous v2.12 version, wrapper was running directly the acemd3 process. At current v2.17 version, a preliminary decompressing stage seems to be executed, then bin/acemd3 process is run. The decompressing stage produces two huge folders in the slot directory: bin lib The conda-pack.tar archive alone is 1 GB, and decompressing it, for each task, expands it to 1.8 GB. Watch out for your storage quotas and SSD write limits! But at least the libbost 1.74 filesystem library is in that lib folder, so hopefully that'll be an end to that class of error. |

|

Send message Joined: 21 Feb 20 Posts: 1116 Credit: 40,876,970,595 RAC: 423,674 Level  Scientific publications

|

there seems to be a difference in command used for the device assignment from the past (I missed this before) old tasks/apps used this: --boinc input --device n the 2.17 app uses this: --boinc --device 0 feels like it's hard coded to 0 and not using what BOINC assigns.

|

|

Send message Joined: 11 Jul 09 Posts: 1639 Credit: 10,159,968,649 RAC: 3 Level  Scientific publications

|

The command line for acemd3 is set by the wrapper. I can't see a meaningful use for the word 'input' on that line. Keywords are set with '--' leading, values without. So the old syntax might have been --boinc [filename] --device [integer] It feels odd, but it's possible - there is a file in the workunit bundle which is given the logical name 'input' at runtime. In which case, why is it missing from the 217 app template, and what is the effect likely to be? (noting that the 217 apps are actually running with the input files supplied) Just possibly, the sequence might be Detected keyword --boinc That might show up if I can make a task run standalone in a terminal window - though that'll be mightily fiddly with all these weird filenames around. |

|

Send message Joined: 21 Feb 20 Posts: 1116 Credit: 40,876,970,595 RAC: 423,674 Level  Scientific publications

|

just noting the obvious difference between what worked in the past vs what doesn't work now. it worked before, but not now. what's different? the lack of "input" in the command.

|

|

Send message Joined: 11 Jul 09 Posts: 1639 Credit: 10,159,968,649 RAC: 3 Level  Scientific publications

|

And I'm trying to think like a computer, and trying to work out why the observed difference might cause the observed effect. |

|

Send message Joined: 21 Feb 20 Posts: 1116 Credit: 40,876,970,595 RAC: 423,674 Level  Scientific publications

|

based on the assumption that the string "input" relates to a filename. rather could be a preprogrammed specific command, telling the app/wrapper to perform some function. without full knowledge of the code, it's just speculation at this point.

|

|

Send message Joined: 21 Feb 20 Posts: 1116 Credit: 40,876,970,595 RAC: 423,674 Level  Scientific publications

|

API_VERSION does appear in the wrapper binary too. you can see this by converting "API_VERSION" to a hex string and searching the binary with hexedit. which makes sense since I was under the impression that the whole point of the wrapper was to be a middle man between BOINC and the science app, allowing some greater flexibility in how the project packages and delivers their apps. older 2.12 apps have wrapper API_VERSION_7.7.0, the new 2.17 app has wrapper API_VERSION_7.5.0

|

|

Send message Joined: 11 Jul 09 Posts: 1639 Credit: 10,159,968,649 RAC: 3 Level  Scientific publications

|

Yes, I'd expect that. The whole point of the wrapper is to be 'boinc-aware': it will need to read init_data.xml, and export the readings (like device number) into an external format like the one that the new version of acemd3 can act on. Or not, as the case may be. |

ServicEnginIC ServicEnginICSend message Joined: 24 Sep 10 Posts: 593 Credit: 12,143,936,510 RAC: 4,251,066 Level  Scientific publications

|

Finally, this problem has been corrected by the deployment of New Version of ACEMD 2.18 on Sep 14 2021 | 11:44:39 UTC The wrapper version packed with this new app is the same that already was with previous New Version of ACEMD 2.12: 7.7.26016 It seems that this has been the solution. To Gpugrid Project Developers, once more: Well done!!! |

|

Send message Joined: 13 Dec 17 Posts: 1423 Credit: 9,186,946,190 RAC: 1,288,374 Level  Scientific publications

|

Have you actually received the new app with some new work? No luck here for the past few days. |

ServicEnginIC ServicEnginICSend message Joined: 24 Sep 10 Posts: 593 Credit: 12,143,936,510 RAC: 4,251,066 Level  Scientific publications

|

Have you actually received the new app with some new work? Yes, I did. e1s10_I18-ADRIA_test_acemd3_devicetest_KIXCMYB-0-2-RND3507_1 (Link to Gpugrid webpage) e1s10_I18-ADRIA_test_acemd3_devicetest_KIXCMYB-0-2-RND3507_1 (Image) This task was actually processed at device 1 on my twin GTX 1650 GPU host. I had previously reset Gpugrid project in BOINC Manager at that system. |

©2026 Universitat Pompeu Fabra