Ampere 10496 & 8704 & 5888 fp32 cores!

Message boards :

Graphics cards (GPUs) :

Ampere 10496 & 8704 & 5888 fp32 cores!

Message board moderation

Previous · 1 · 2 · 3 · 4 · 5 · 6 · Next

| Author | Message |

|---|---|

|

Send message Joined: 22 May 20 Posts: 110 Credit: 115,525,136 RAC: 0 Level  Scientific publications

|

Kudos to you! From what I understand so far, I have to support Zoltan's opinion. I really must stress that I am very keen on efficiency as that is sth that everyone should factor in their hardware decisions. Anyway, it still seems to offer a valid value proposition for me. Especially at this price point. And it is yet very early and future support of RTX 30xx cards could definitely offer some potential for optimisation. |

|

Send message Joined: 21 Feb 20 Posts: 1116 Credit: 40,876,970,595 RAC: 423,674 Level  Scientific publications

|

I got my EVGA 3070 black today and did some testingThank you for all the effort you have put in this benchmark! take it with a grain of salt so far, which is exactly why I made the disclaimer. as you yourself mentioned, the types of calculations are different, and if SETI is performing a large number of INT calculations than this result wouldn't be unexpected. Ampere should see the most benefit from a pure FP32 load, and according to your previous comments, GPUGRID should be mostly FP32. It could also be that source code changes might be necessary to take full advantage of the new architecture. the new SETI app has ZERO source code changes from the older 10.2 app, I simply compiled it with the 11.1 CUDA library instead of 10.2. that's why I was attempting to build a FAHbench version with CUDA 11.1, but I hit a snag there and will have to wait. I don't do FAH, but since users here have said that GPUGRID is similar in work performed and software used, the 3070 should perform on par with the 2080ti. check this page for a comparison: https://folding.lar.systems/folding_data/gpu_ppd_overall showing the F@h PPD of a 3070 *just* behind the 2080ti. at $500 and 220W, that makes sense. not as power efficient as I'd like, but pushing it beyond the 2080ti nonetheless

|

|

Send message Joined: 13 Dec 17 Posts: 1423 Credit: 9,187,696,190 RAC: 1,276,885 Level  Scientific publications

|

From the issue raised on the OpenMM github repo, it seems they let their SSL certificate expire back in September. And no one has done anything about it. https://github.com/openmm/openmm-org/issues/38 https://github.com/openmm/openmm This OpenMM forum? https://simtk.org/plugins/phpBB/indexPhpbb.php?group_id=161&pluginname=phpBB |

|

Send message Joined: 13 Dec 17 Posts: 1423 Credit: 9,187,696,190 RAC: 1,276,885 Level  Scientific publications

|

The compiler optimizations for Zen 3 haven't made it into any linux kernel yet either. GCC11 and CLANG12 are supposed to get znver3 targets in the upcoming 5.10 kernel next April for the 21.04 distro release. https://www.phoronix.com/scan.php?page=news_item&px=AMD-Zen-3-Linux-Expectations |

|

Send message Joined: 21 Feb 20 Posts: 1116 Credit: 40,876,970,595 RAC: 423,674 Level  Scientific publications

|

got the 3070 up and running on Einstein@home for some more testing. As far as Einstein performance: the 2080ti does the current batch GW tasks in about 4 minutes, using 225W (360 t/day) the 3070 does the current batch GW tasks in about 5 minutes, using 170W (288 t/day) the 2080ti does the FGRP tasks in about 6 minutes, using 225W (240 t/day) the 3070 does the FGRP tasks in about 7:20 minutes, using 150W (196.4 t/day) so for Einstein GW tasks, the 3070 is about 5% more efficient and for the Einstein GR tasks, the 3070 is about 19% more efficient now this isnt to say you should buy Ampere (or even nvidia cards) for Einstein, since some of the newer AMD cards perform much better there (faster and overall more efficient). But this will serve as an additional data point with a different processing type. you can see the efficiency improvements here, especially on the GR tasks.

|

Retvari Zoltan Retvari ZoltanSend message Joined: 20 Jan 09 Posts: 2380 Credit: 16,897,957,044 RAC: 0 Level  Scientific publications

|

I wonder if you slowed down the 2080Ti (reducing GPU voltage accordingly as well) by 20% to match the speed of the 3070, would it be about the same effective? (or even better in the case of GW tasks) |

|

Send message Joined: 21 Feb 20 Posts: 1116 Credit: 40,876,970,595 RAC: 423,674 Level  Scientific publications

|

the 2080ti was already power limited to 225W. its not possible (under Linux) to reduce the voltage. there are just no tools for it. reducing the power limit will have the indirect effect of reducing voltage, but I don't have control of the voltage directly. I could power limit further, but it will only slow the card further. you start to lose too much clock speed below 215-225W in my experience (across 6 different 2080tis). the 2080ti was also watercooled with temps never exceeding 40C so it had as much of an advantage as it could have had. the 3070 was run at a 200W power limit, but it never even came close to that in these Einstein loads, with speed probably only limited by temp/clock boost bins. But that's really par for the course on mid-level Nvidia cards running Einstein tasks, the GR tasks are just light weight and dont pull a lot of power, and the GW tasks are more CPU bound than anything else so you dont get full GPU utilization. further efficiency gains could probably be made here on the 3070 with more power limiting and overclocking, but I didn't bother for this test.

|

|

Send message Joined: 22 Aug 19 Posts: 7 Credit: 168,393,363 RAC: 0 Level  Scientific publications

|

Any apps i can use yet on my 30s cards? |

|

Send message Joined: 21 Feb 20 Posts: 1116 Credit: 40,876,970,595 RAC: 423,674 Level  Scientific publications

|

Any apps i can use yet on my 30s cards? nope.

|

|

Send message Joined: 21 Feb 20 Posts: 1116 Credit: 40,876,970,595 RAC: 423,674 Level  Scientific publications

|

https://www.gpugrid.net/workunit.php?wuid=27026028 with no Ampere compatible app, and no mechanism to prevent work from being sent to incompatible systems, situations like this will only become more common as more and more users upgrade to these new cards. 3 out of the 6 systems that have handled this WU were using Ampere cards and failed because of it. If we had an Ampere compatible CUDA 11.1 app, this would have been completed by the first system.

|

Retvari Zoltan Retvari ZoltanSend message Joined: 20 Jan 09 Posts: 2380 Credit: 16,897,957,044 RAC: 0 Level  Scientific publications

|

I've "saved" a workunit earlier (my host was the 7th crunching it - the 1st successful one). It had been sent to two ampere cards before. |

|

Send message Joined: 21 Feb 20 Posts: 1116 Credit: 40,876,970,595 RAC: 423,674 Level  Scientific publications

|

i have a couple like that that I similarly saved. even some _7s (8th) this one, 50% of the users had RTX 30-series https://www.gpugrid.net/workunit.php?wuid=27025291

|

|

Send message Joined: 30 Oct 19 Posts: 7 Credit: 405,900 RAC: 0 Level  Scientific publications

|

Is there any update regarding nVidia Ampere Workunits?? My Ampere cards are getting bored -.- |

|

Send message Joined: 21 Feb 20 Posts: 1116 Credit: 40,876,970,595 RAC: 423,674 Level  Scientific publications

|

my 3080Ti (limited to 300W mind you) did this ADRIA task in under 9.5hrs https://www.gpugrid.net/result.php?resultid=32642251 anyone with a high power 3090 or 3080Ti run faster? my model 3080Ti will only go up to 366W, but I know some 3080Tis and 3090s can reach into the 400-500W range.

|

|

Send message Joined: 27 Aug 21 Posts: 38 Credit: 7,254,068,306 RAC: 0 Level  Scientific publications

|

I am not running 3090s or 3080ti cards but I do have some times/comparisons for high-end Turing and Ampere GPUs for Adria tasks. Nvidia Quatro RTX6000- 8.4 hours Nvidia RTX A6000- 6.7 hours |

Retvari Zoltan Retvari ZoltanSend message Joined: 20 Jan 09 Posts: 2380 Credit: 16,897,957,044 RAC: 0 Level  Scientific publications

|

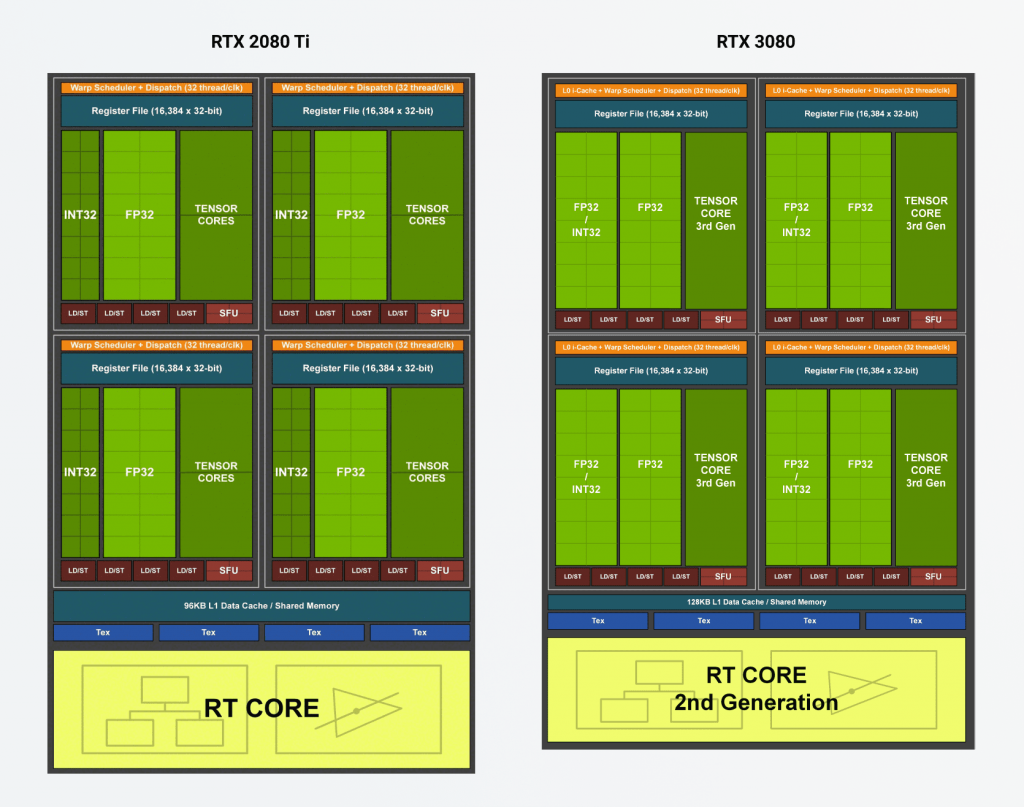

I am not running 3090s or 3080ti cards but I do have some times/comparisons for high-end Turing and Ampere GPUs for Adria tasks.The NVidia Quadro RTX 6000 is a "full chip" version of the RTX 2080Ti (4608 vs 4352 CUDA cores) while the NVidia RTX A6000 is the "full chip" version of the RTX 3090 (10752 vs 10496 CUDA cores). The rumoured RTX 3090Ti will have the "full chip" also. The RTX 3080 Ti has 10240 CUDA cores. (The real world GPUGrid performance of the Ampere architecture cards scales with the half of the advertised number of CUDA cores). |

|

Send message Joined: 27 Aug 21 Posts: 38 Credit: 7,254,068,306 RAC: 0 Level  Scientific publications

|

Is that true for all NVidia GPUs or just Ampere? Just out of curiosity, why is it this way? |

|

Send message Joined: 13 Dec 17 Posts: 1423 Credit: 9,187,696,190 RAC: 1,276,885 Level  Scientific publications

|

Just guessing here but since every new generation of Nvidia cards has basically doubled or at least increased the CUDA core count and since the GPUGrid app as well as a very few other project apps are really well coded for parallelization of computation, you can state that the crunch time scales with more cores. You can tell how well optimized an application is by how much sustained utilization it produces and how close to the max TDP of the card the app runs. The GPUGrid apps and the Minecraft apps are the only two apps that I know of that will run at 97-100 utilization through the entire computation at the full power capability of the card. Kudos to the app developers of these projects. Job well done! |

Retvari Zoltan Retvari ZoltanSend message Joined: 20 Jan 09 Posts: 2380 Credit: 16,897,957,044 RAC: 0 Level  Scientific publications

|

It's true only for the Ampere architecture.(The real world GPUGrid performance of the Ampere architecture cards scales with the half of the advertised number of CUDA cores).  As you can see on the picture above, the number of FP32 units have been doubled in the Ampere architecture (the INT32 units have been "upgraded"), but it resides within the (almost) same streaming multiprocessor (SM), so it could not feed much better that many cores within the SM. From a cruncher's point of view the number of SMs should have been doubled as well (by making "smaller" SMs). The other limiting factor is the power consumption, as the RTX 3080Ti (RTX3090 etc) easily hits the 350W power limit with this architecture. https://www.reddit.com/r/hardware/comments/ikok1b/explaining_amperes_cuda_core_count/ https://www.tomshardware.com/features/nvidia-ampere-architecture-deep-dive https://support.passware.com/hc/en-us/articles/1500000516221-The-new-NVIDIA-RTX-3080-has-double-the-number-of-CUDA-cores-but-is-there-a-2x-performance-gain- |

|

Send message Joined: 13 Dec 17 Posts: 1423 Credit: 9,187,696,190 RAC: 1,276,885 Level  Scientific publications

|

As long as you can keep an INT32 operation out of the warp scheduler, then Ampere series can do two FP32 operations on the same clock. |

©2026 Universitat Pompeu Fabra