Important: hardware and app deprecation for 2015 / ha-ha thread!

Message boards :

News :

Important: hardware and app deprecation for 2015 / ha-ha thread!

Message board moderation

Previous · 1 · 2 · 3 · 4 · Next

| Author | Message |

|---|---|

MJH MJHSend message Joined: 12 Nov 07 Posts: 696 Credit: 27,266,655 RAC: 0 Level  Scientific publications

|

You can see from our live performance data http://www.gpugrid.net/graphs/trend-cc-scaled.svg, which breaks down our throughput by compute capability that Geforce 400 & 500 series cards (red, green) still contribute a bit more than 10% of our work. We'll probably not discontinue support until it falls to <5%, or NVIDIA deprecates support in the compiler, whichever comes first. Matt |

|

Send message Joined: 28 Jul 12 Posts: 819 Credit: 1,591,285,971 RAC: 0 Level  Scientific publications

|

What a lot of you don't realize is everyday there are fewer and fewer people using BOINC. Maybe users are stable on GPUGRID, however, overall people see to get bored, even long time users and eventually stop running BOINC. GPUGrid (and others) have maintained a high degree of backward-compatibility, so if people are leaving, it is not for that purpose. And if they do leave, it is the ones with the least-efficient cards. What the BOINC projects should be doing is keeping the ones with the most efficient cards happy. |

|

Send message Joined: 9 Mar 09 Posts: 2 Credit: 16,719,078 RAC: 0 Level  Scientific publications

|

Hi Folks, running a 660GTX here, I hope it's still ok. It runs 24/7 but if need be, depending on pricing, what should I get? I do play a few games, but I take it most good crunching cards are very good to excellent gaming cards anyways, a win win situation. If my card is still supported, I will wait until after xmas and I am planning on building a brand new system anyways. Current setup is a Xeon socket775 quad core @ 3.6Ghz and one 660 GTX. Might just add new card and run 2 cards for Crunching! take care folks! Sean |

|

Send message Joined: 28 Jul 12 Posts: 819 Credit: 1,591,285,971 RAC: 0 Level  Scientific publications

|

Hi Folks, running a 660GTX here, I hope it's still ok. That is a Kepler card, and should be fine for several years more since it can run CUDA 6.5, which is the latest version. As for the future, the Maxwell cards are the way to go, being the GTX 750/750 Ti and the GTX 970/980 at the moment. But by the time you need to buy anything, there will be a lot of others out. |

|

Send message Joined: 17 Aug 08 Posts: 2705 Credit: 1,311,122,549 RAC: 0 Level  Scientific publications                          |

Qax, you do have a point there. In BOINC "user motivation" may actually be the most important aspect of all. However, I think the project staff made it clear that they're not depracating the old app / GPU support to push power/monetary efficiency, but rather to keep development efforts in check (I think you're well aware of this). MrS Scanning for our furry friends since Jan 2002 |

|

Send message Joined: 9 Mar 09 Posts: 2 Credit: 16,719,078 RAC: 0 Level  Scientific publications

|

Well then, I guess I'm set for a little while still. Thanks, hope to output more results this coming year. |

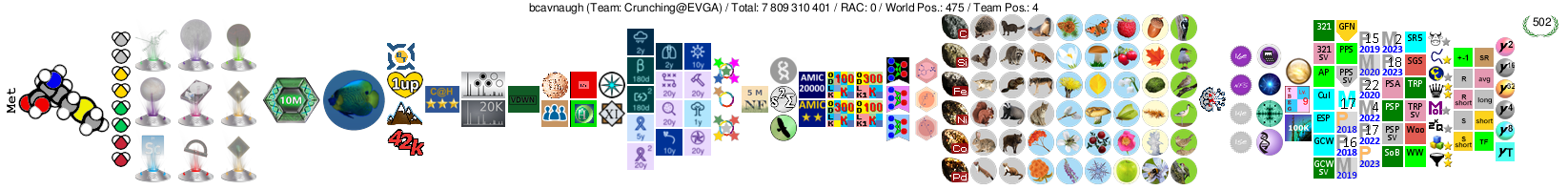

bcavnaugh bcavnaughSend message Joined: 8 Nov 13 Posts: 56 Credit: 1,002,640,163 RAC: 0 Level  Scientific publications

|

Are you going to update the GPU Model List? From what I can tell any GTX 6xx and above will still be supported? Thanks, bcavnaugh  Crunching@EVGA The Number One Team in the BOINC Community. Folding@EVGA The Number One Team in the Folding@Home Community. |

|

Send message Joined: 4 Apr 11 Posts: 11 Credit: 130,184,303 RAC: 0 Level  Scientific publications

|

I was very dismayed by the announcement that you plan to no longer support NVIDIA GPUs using the 340 driver. I am a long time BOINC supporter and recent GPUGRID supporter. I've recently completed testing of a Linux Cluster for my home that I expect will be idle most of the time other than running BOINC jobs. This cluster is now running about 500 GLOPS for CPUs and 7000 GFLOPS for NVIDIA GPUs. These GPUs are NVIDIA Telsa C1060 cards and GTX 770 cards. These are not the fastest GPUs, but they are able to run your Long tasks in about 24 hours, which is about half as fast as you claim the fastest cards are able to run. These NVIDIA Telsa C1060 cards are not supported by the 343 drivers for Linux. Their 343 driver is buggy and on UBUNTU fails on most cards including my GTX 770 cards, so I am forced to use the 340 driver on all my machines. I am very interested in the research you are doing and would very much like to continue work on your BOINC tasks beyond the end of 2014. If you proceed with your plans to drop support for the 340 drivers, you will not only lose my machines, but most UBUNTU Linux users as well. Are you sure that the performance improvements you will gain on the newest cards by changing to 343 will offset the loss of all Linux Users? These Tesla C1060 cards are now being sold on the used market for under $75, so hobbyist are just now beginning to have access to these cards, which are fast and support double precision as well. Windows users with these Telsa cards will be forced to stat with the 340 driver as well. I'm sure that BOINC projects like SETI and POEM are hoping that you proceed with your plan, as they will inherit many GFLOPS from users like me. I currently run those tasks when I cannot get work from your project, which in 2015 will be all the time. Thank you, Greg Tippitt BOINC GTIPPITT - Team Leader for STARFLEET - Star Trek Fan Association |

|

Send message Joined: 9 May 13 Posts: 171 Credit: 4,594,296,466 RAC: 171 Level  Scientific publications

|

Greg, Sorry to hear about your bad luck with the NVIDIA 343 drivers. I would like to say though that I am running Ubuntu 14.04, a GTX 770 GPU, and Nvidia 343.22 drivers and it seems to be working just fine. Most long units complete in the 8-10 hour range. What kind of problems are you having when using the 343 drivers on the GTX 770 cards? |

MJH MJHSend message Joined: 12 Nov 07 Posts: 696 Credit: 27,266,655 RAC: 0 Level  Scientific publications

|

Dear Greg, Quite apart from the driver issue, the C1060 is a compute capability 1.3 device and we intend to drop support for these entirely. As you'll see from http://www.gpugrid.net/graphs/trend-cc-scaled.svg, cc 1.3 devices,represented by the the blue sliver, constitute less than 1% of our throughput. I hope you'll continue to crunch with the 770s, which will give you better performance and a lower power bill. I'm sure one of the other volunteers will be able to help you out with your driver problems. Matt |

|

Send message Joined: 17 Feb 13 Posts: 181 Credit: 144,871,276 RAC: 0 Level  Scientific publications

|

Hi, Matt Many thanks for your response. I have attempted to update my drivers as you suggest. I have not succeeded as Win7 reports my drivers are up to date. I will search further... John John, |

Retvari Zoltan Retvari ZoltanSend message Joined: 20 Jan 09 Posts: 2380 Credit: 16,897,957,044 RAC: 0 Level  Scientific publications

|

Hi, Matt John, The Microsoft update don't have the latest 3rd party drivers, you should check and download them directly from the vendor's website (http://www.nvidia.com, http://www.geforce.com), or download the latest driver with the vendor's updater application (GeForce Experience). |

|

Send message Joined: 17 Aug 08 Posts: 2705 Credit: 1,311,122,549 RAC: 0 Level  Scientific publications                          |

Greg, I hope this linux driver mess can somehow be sorted out. Maybe the confusion appears when you're mixing C1060's and GTX770's? Would it help to seperate them and keep only the boxes with GTX770 at GPU-Grid? BTW: Tesla C1060 use the GT200 chip from 2008. They may be cheap now, but I have a really hard time to come up with any scenario where they would actually be a better choice than current offerings. The only variant which comes to my mind would be software which insists on running on a Tesla but refuses a Geforce. For SP performance: C1060 provides 622 GFlops (MAD)at 190 W TDP, whereas a GTX750Ti provides 1300 GFlops (MAD) at 60 W TDP. It should cost about 100$ and quickly pay for itself via the electricity bill. In Germany it would take just about 1.5 months of sustained load to make up for those 30$/€ higher purchase cost. For DP performance: C1060 provides 77 GFlops (FMA). All newer Geforce cards support DP, but are heavily crippled in performance. Only Titan and more recent Teslas or Quadros would be worth running in DP mode. Or high end AMD GPUs. Anyway, even an old Fermi card like a mainstream GTX550Ti almost matches this performance (58 GFlops DP FMA, 120 W TDP, released 2011 at 150$), whereas bigger cards easily surpass it. From my point of view: if you pay anything at all for electricity you'll probably do yourself a favor by removing or replacing those old GPUs. There's a good reason they are so cheap nowadays. MrS Scanning for our furry friends since Jan 2002 |

|

Send message Joined: 17 Feb 13 Posts: 181 Credit: 144,871,276 RAC: 0 Level  Scientific publications

|

Thanks, Zoltan. Hi, Matt |

|

Send message Joined: 25 Nov 13 Posts: 66 Credit: 282,724,028 RAC: 0 Level  Scientific publications

|

I have problem with drivers from Nvidia site due to the Optimus system. Also any driver newer than 331.38 in *.deb repositories can't work with Boinc. So started crunching other projects. For ex, Collatz giving good credits, but for what? For a result file that is less than a half kilobyte. :D And for some mathematical problem that I don't know what it will help for. |

|

Send message Joined: 4 Apr 11 Posts: 11 Credit: 130,184,303 RAC: 0 Level  Scientific publications

|

Matt, Good luck with your new software development. I will return to other BOINC projects when my 7 TeraFlops are no longer supported by GPUGRID. This summer I started running GPUGRID tasks because a shortage of GPU tasks on the SETI@Home and POEM@Home projects, but they are back up now with plenty of tasks. I continued running your tasks because I was looking for medical tasks. You also dramatically inflate credit compared to most other projects. For example I got 300,000 credits today from GPUGrid, while other projects give me half that much credit per day on average. GPUGRID gives the most credit per runtime of any project I've found except, Collatz, and certainly more credit than any project doing research on medicine, climate, or cosmology that I've found. Thanks, Greg CaptainJack, I'm using the same 3.13.0-39-generic kernel as you. Is yours 32 or 64 bit? I'm not mixing the Telsa cards and the GTX 770's. I only have a single GPU per motherboard. My cluster is using quad CPU SuperMicro H8QME-2 motherboards that only have a single x16 slot, and lots of (nearly useless) PCI-X slots. For CPU tasks, they are great with 24 AMD 2.4MHz cores. There is lots of talk on other BOINC forums about the Telsa C1060 cards being overpriced versions of cheaper consumer video cards. I don't know a lot about them, other than they complete work units faster than the much newer and more expensive GTX770's I've bought. I've got the 770's installed on a my home theatre PC and my desktop PC, where I need the video capabilities of the card. After seeing the notice in BOINCMGR, I gave the 64 bit 343 drivers from NVIDIA's website a test on a newer machine I was working on. It's is a newer SuperMicro H8QM3-2s motherboard with more PCIe slots. I'm using a pair of GTX 770 cards in this motherboard and no Tesla cards. I'm using the 64 bit UBUNTU 14.04 with all updates. With the NVIDIA 343 drivers, Xserver won't start, but the system runs fine with 340 release. I live in the state of Tennessee in the US, where we are fortunate to have more affordable electricity than many other places as a legacy of 1940s (TVA) hydroelectric construction projects along the many lakes built on the Tennessee River for flood control and power generation. I pay about 10 cents per kilowatt hour. I probably won't be buying any more new hardware, as I am far further past my sell-by-date than my hardware is. I'm old enough that when I started programming in FORTRAN IV, supercomputers were round instead of square. I got the hardware for my cluster as a cheap bunch of untested junk, much of which would not work. It was damaged stuff from a local PC recycling center that had been discarded by the nearby Oak Ridge National Laboratory, where they keep some of our biggest supercomputers. I've been working on this cluster as a hobby to see if I could build a supercomputer from garbage. I've not run it much of the time until the weather has gotten cool. The cost to run the cluster this winter will be offset greatly by it heating my home. I've now gotten it to more than 150 CPU cores and 1500 GPU cores. Now that I've almost finished the hardware, I going to start on some parallel software ideas I wanted to play with. When I'm not using it for anything, I'll have it running BOINC tasks. It's now a bookshelf full of stuff that could win an award for the ugliest computer ever built. Thanks, Greg |

|

Send message Joined: 9 May 13 Posts: 171 Credit: 4,594,296,466 RAC: 171 Level  Scientific publications

|

Greg, I'm using 64-bit and using the NVIDIA driver installation method posted here: http://www.gpugrid.net/forum_thread.php?id=3713&nowrap=true#36671 If you scroll down a few more posts, skgiven has added some additional tips. Sounds like you have an interesting project going on there. |

|

Send message Joined: 17 Aug 08 Posts: 2705 Credit: 1,311,122,549 RAC: 0 Level  Scientific publications                          |

sis651, I'm very fond of Collatz either. But not due to the small result file, but due to the fact that we can not prove the Collatz Conjecture by trying all numbers, because frankly there's an infinite amount of them. We would have been able to disprove it by finding a single number where it doesn't hold true. We by now we have pushed so far and not found a single counter example, that it's probably true. You may want to try Einstein@Home instead. It doesn't yield as many credits as other GPU projects, but many like their science. It would also be nice if this driver issue could be sorted out, but honestly I wouldn't expect anything in this regard with Linux combined with Optimus. Greg, it sounds like you know very well what you're doing :) Just one more remark: if you say the C1060's are completing WUs faster than GTX770's, are you using double precision? Apart from weird software issues or a far too slow CPU feeding the GPU that should be the only circumstance where this could be possible. MrS Scanning for our furry friends since Jan 2002 |

|

Send message Joined: 4 Apr 11 Posts: 11 Credit: 130,184,303 RAC: 0 Level  Scientific publications

|

Jack and ET-Ape, I don't see what the size of the result file has to do with how useful a project is, as long as the result is not "42". For me, some of the BOINC projects do seem like a waste of electricity, but others might say that about SETI@Home, which I've been a supporter of since the original "Pre-BOINC" days. I run work for several projects for SETI, medical research, climate change, and Cosmology. I run both MilkyWay and Einstein. I was wearing a MilkyWay@Home T-shirt last week, and guy in my cardiologist's waiting room struck up a conversation with me about BOINC. He was talking about how some projects seem frivolous, and he didn't see the point of the one for rendering animations. I told him to download and watch the Big Buck Bunny video. I explained that students trying to learn and practice high-end animation skills don't have access to supercomputers. Steve Jobs made his fortune with Pixar, because George Lucas sold him Pixar cheap. Jobs realized that the reason Pixar's software wasn't working was because they were running it on a old DEC VAX mainframe. After he bought Pixar, he replaced the old mainframe the artists were sharing with Silicon Graphics Workstations for each artist. The software then worked fine, and they finished the original Toy Story movie. An example of Job's marketing genius was that he had the IPO for Pixar stock the same day the movie opened. For rendering of 4K-3D animations, it takes lots of computing power, so students using a desktop PC can use BOINC to render their projects, just as researchers needing data analysis can. Thanks for the link about the getting the drivers working, I'll take a look. I'm in the process of getting the machine I use as my desktop to run a virtual machine that will be part of my cluster as well. I've just started testing the GPUGrid CPU tasks. They run really cool on these nodes. Each motherboard has 24 cores, but I limit BOINC to only running 20 CPU jobs at a time. That leaves cores for feeding the GPU job, system overhead and IO overhead. The GPUGRID tasks for CPU (and Milkway nBody tasks) will grab all 20 of the CPU cores and start grinding away on a task. The machine I'm working on now has more PCie slots, so I'm trying both GTX 770 cards and a Tesla card in the same machine. The Tesla card is 50% faster than the GTX 770 card. When Matt makes the changes, I can continue to run the GPUGrid jobs for CPU, and let POEM and SETI use the GPUs. NVIDIA GPU 0: Tesla T10 Processor (driver version 340.32, device version OpenCL 1.0 CUDA, 4096MB, 4041MB available, 933 GFLOPS peak) NVIDIA GPU 1: GeForce GTX 770 (driver version 340.32, device version OpenCL 1.0 CUDA, 2048MB, 1984MB available, 624 GFLOPS peak) NVIDIA GPU 2: GeForce GTX 770 (driver version 340.32, device version OpenCL 1.0 CUDA, 2048MB, 1984MB available, 624 GFLOPS peak) Since the motherboards are too large for most cases, I've got the motherboards in a bookshelf using nylon wire ties and Ducktape. Here is a picture of what I call my Cluster Duck. |

|

Send message Joined: 27 May 14 Posts: 4 Credit: 104,056,992 RAC: 0 Level  Scientific publications

|

If you download the Nvidia GeForce Experience tool, it will keep you up to date on driver releases from Nvidia. I don't get my drivers from anyone but Nvidia. The third party manufacturers just ship out the driver Nvidia does anyway. |

©2025 Universitat Pompeu Fabra