acemdbeta application - discussion

Message boards :

News :

acemdbeta application - discussion

Message board moderation

Previous · 1 . . . 3 · 4 · 5 · 6

| Author | Message |

|---|---|

|

Send message Joined: 11 Jul 09 Posts: 1639 Credit: 10,159,968,649 RAC: 351 Level  Scientific publications

|

OK, now I'm awake, I've checked the logs for those two tasks, and the sequence of events is as I surmised. 20-Nov-2013 16:50:15 [---] NOTICES::write: sending notice 6 That was the host crash interval 20-Nov-2013 16:43:15 [GPUGRID] Starting task 1-KLAUDE_6429-1-2-RND1937_0 using acemdbeta version 815 (cuda55) in slot 7 That task crashed, but in a 'benign' way (it didn't take the driver down with it) 20-Nov-2013 16:35:47 [GPUGRID] Starting task 95-KLAUDE_6429-0-2-RND2489_1 using acemdbeta version 815 (cuda55) in slot 3 And that task validated. |

Damaraland DamaralandSend message Joined: 7 Nov 09 Posts: 152 Credit: 16,181,924 RAC: 0 Level  Scientific publications

|

This unit 3-KLAUDE_6429-0-2-RND6465 worked fine for me after it failed on 6 computers before Maybe this can help??? If you need any further conf just ask. |

|

Send message Joined: 26 Feb 12 Posts: 184 Credit: 222,376,233 RAC: 0 Level  Scientific publications

|

All KLAUDE failed here after 2 seconds. Found this in the BOINC event log. 11/23/2013 9:28:11 PM | GPUGRID | Output file 91-KLAUDE_6444-0-3-RND9028_1_1 for task 91-KLAUDE_6444-0-3-RND9028_1 absent 11/23/2013 9:28:11 PM | GPUGRID | Output file 91-KLAUDE_6444-0-3-RND9028_1_2 for task 91-KLAUDE_6444-0-3-RND9028_1 absent 11/23/2013 9:28:11 PM | GPUGRID | Output file 91-KLAUDE_6444-0-3-RND9028_1_3 for task 91-KLAUDE_6444-0-3-RND9028_1 absent |

|

Send message Joined: 28 Mar 09 Posts: 490 Credit: 11,731,645,728 RAC: 57 Level  Scientific publications

|

All KLAUDE failed here after 2 seconds. Found this in the BOINC event log. Same here! |

|

Send message Joined: 16 Mar 11 Posts: 509 Credit: 179,005,236 RAC: 0 Level  Scientific publications

|

I had several KLAUDE tasks fail after I configured my system to crunch 2 GPUgrid tasks simultaneously on my single 660Ti. They failed ~2 secs after starting but were reported as "error while computing" and did not verify. I then turned off beta tasks in my website prefs and received a NATHAN which ran fine alongside the KLAUDE I had run to ~50% completion before trying 2 simultaneous tasks. I do understand GPUgrid does not support 2 tasks on 1 GPU and I'm not expecting a fix for that, just passing along what I saw, FWIW, as I find it interesting 2 KLAUDE would not run together but 1 KLAUDE + 1 NATHAN were OK and now 1 NATHAN plus 1 SANTI are crunching fine. I expect I'll witness what others have reported (that 2 tasks don't give much of a production increase) but I wanna try it for a day or 2 just to say I tried it. At that point I'll likely turn beta tasks on again and revert to just 1 task at a time. BOINC <<--- credit whores, pedants, alien hunters |

|

Send message Joined: 11 Jul 09 Posts: 1639 Credit: 10,159,968,649 RAC: 351 Level  Scientific publications

|

It looks as if there was a bad batch of KLAUDE workunits overnight, all of which failed with ERROR: file mdioload.cpp line 209: Error reading parmtop file That includes yours, Dagorath - I don't think you can draw a conclusion that running two at a time had anything to do with the failures. |

(retired account) (retired account)Send message Joined: 22 Dec 11 Posts: 38 Credit: 28,606,255 RAC: 0 Level  Scientific publications

|

Yes, same here, lots of errors over night. Luckily enough, the Titan already hit a max.-per-day-limit of 15. Mark my words and remember me. - 11th Hour, Lamb of God

|

skgiven skgivenSend message Joined: 23 Apr 09 Posts: 3968 Credit: 1,995,359,260 RAC: 0 Level  Scientific publications                             |

Yeah, bad batch. 57-KLAUDE_6444-0-3-RND0265 ACEMD beta version 7485150 129153 24 Nov 2013 | 0:53:30 UTC 24 Nov 2013 | 0:55:10 UTC Error while computing 2.14 0.08 --- ACEMD beta version v8.15 (cuda42) 7487860 159186 24 Nov 2013 | 2:18:25 UTC 24 Nov 2013 | 5:58:30 UTC Error while computing 2.06 0.04 --- ACEMD beta version v8.14 (cuda55) 7488724 99934 24 Nov 2013 | 6:00:24 UTC 24 Nov 2013 | 6:06:35 UTC Error while computing 1.30 0.13 --- ACEMD beta version v8.15 (cuda55) 7488745 160877 24 Nov 2013 | 6:08:47 UTC 24 Nov 2013 | 6:14:58 UTC Error while computing 2.08 0.25 --- ACEMD beta version v8.15 (cuda55) 7488772 161748 24 Nov 2013 | 6:17:42 UTC 29 Nov 2013 | 6:17:42 UTC In progress --- --- --- ACEMD beta version v8.15 (cuda55)... Its the same on Windows and Linux and the errors occur on different generations of GPU from GTX400's to GTX700's. Exit status 98 (0x62) Unknown error number process exited with code 98 (0x62, -158) ERROR: file mdioload.cpp line 209: Error reading parmtop file 05:54:54 (21170): called boinc_finish I expect this batch just wasn't built correctly. - I see that some WU's have already failed 8 times - the cutoff failure point, so they won't be resent. Given that they fail after 2seconds, and there are only ~130 of these Betas the batch probably won't be around too long. FAQ's HOW TO: - Opt out of Beta Tests - Ask for Help |

|

Send message Joined: 16 Mar 11 Posts: 509 Credit: 179,005,236 RAC: 0 Level  Scientific publications

|

It looks as if there was a bad batch of KLAUDE workunits overnight, all of which failed with That's good to know, thanks. BOINC <<--- credit whores, pedants, alien hunters |

MJH MJHSend message Joined: 12 Nov 07 Posts: 696 Credit: 27,266,655 RAC: 0 Level  Scientific publications

|

Yes, my fault. I put out some broken WUs on the beta channel. Matt |

|

Send message Joined: 26 Jun 09 Posts: 815 Credit: 1,470,385,294 RAC: 0 Level  Scientific publications

|

And not only KLAUDE, a trypsin has result in yet another fatal cuda driver error and downclocked even to 1/3th of the normal clock speed. I know trypsin is nasty stuff as its purpose is to "break down" molecules, but that it can also "break down" a GPU-clock is new for me :) So I have now opt for LR only on the 660. Greetings from TJ |

skgiven skgivenSend message Joined: 23 Apr 09 Posts: 3968 Credit: 1,995,359,260 RAC: 0 Level  Scientific publications                             |

The Trp tasks are not part of the bad KLAUDE batch: trypsin_lig_75x2-NOELIA_RCDOS-0-1-RND0557_1 4940668 159186 24 Nov 2013 | 8:14:31 UTC 24 Nov 2013 | 15:31:51 UTC Completed and validated 6,504.01 2,200.87 30,000.00 ACEMD beta version v8.14 (cuda55) ...and yes, Trypsin is a very useful digestive enzyme. FAQ's HOW TO: - Opt out of Beta Tests - Ask for Help |

|

Send message Joined: 28 Mar 09 Posts: 490 Credit: 11,731,645,728 RAC: 57 Level  Scientific publications

|

The latest NOELIA_RCDOSequ betas run fine, but they do down clock the video card speed to 914 Mhz, not the 1019 Mhz speed as recorded on the Stderr output below. Notice the temperature readings, they are in the 50's, not the 60's to low 70's when I run the long tasks. This is true for both windows xp and 7. Below is a typical output for all these betas. The cards do return to normal speed when they run the long runs. Stderr output <core_client_version>7.0.64</core_client_version> <![CDATA[ <stderr_txt> # GPU [GeForce GTX 690] Platform [Windows] Rev [3203M] VERSION [55] # SWAN Device 1 : # Name : GeForce GTX 690 # ECC : Disabled # Global mem : 2048MB # Capability : 3.0 # PCI ID : 0000:04:00.0 # Device clock : 1019MHz # Memory clock : 3004MHz # Memory width : 256bit # Driver version : r325_00 : 32723 # GPU 0 : 52C # GPU 1 : 51C # GPU 2 : 51C # GPU 3 : 49C # GPU 1 : 52C # GPU 1 : 53C # GPU 1 : 54C # GPU 1 : 55C # GPU 1 : 56C # GPU 1 : 57C # GPU 1 : 58C # GPU 3 : 51C # GPU 3 : 52C # GPU 3 : 53C # GPU 3 : 54C # GPU 3 : 55C # GPU 3 : 56C # GPU 2 : 53C # GPU 2 : 54C # GPU 2 : 55C # GPU 2 : 56C # GPU 2 : 57C # GPU 2 : 58C # GPU 0 : 53C # GPU 0 : 54C # GPU 0 : 55C # GPU 0 : 56C # GPU 0 : 57C # GPU 0 : 58C # Time per step (avg over 525000 steps): 5.742 ms # Approximate elapsed time for entire WU: 3014.682 s 02:08:35 (2912): called boinc_finish </stderr_txt> ]]> |

|

Send message Joined: 11 Jul 09 Posts: 1639 Credit: 10,159,968,649 RAC: 351 Level  Scientific publications

|

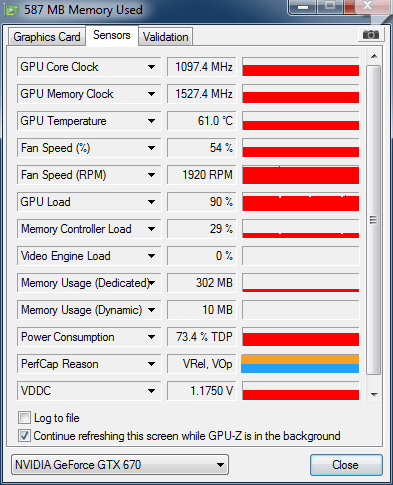

I see the latest GPU-Z gives a reason for performance capping:  That GTX 670 was capped for reliability voltage and operating voltage (it was running a SANTI_MAR423cap from the long queue at the time, not a Beta - just illustrating the point). |

|

Send message Joined: 4 Apr 09 Posts: 450 Credit: 539,316,349 RAC: 0 Level  Scientific publications

|

I see the latest GPU-Z gives a reason for performance capping: <ot> I have GPUZ 0.7.4 (which says it is the latest version) and I do not see that entry (I have a 670 also), is that a beta version and can you provide a link to where you got it from? </ot> Thanks - Steve |

skgiven skgivenSend message Joined: 23 Apr 09 Posts: 3968 Credit: 1,995,359,260 RAC: 0 Level  Scientific publications                             |

GPU-Z has been showing this for months. I'm still using 0.7.3 and it shows it, as did the previous version, and probably the one before that. Thought I posted about this several months ago?!? Anyway, its a useful tool but only works on Windows. My GTX660Ti (which is hanging out of the case against a wall complements of a riser) is limited by V.Rel and V0p (Reliability Voltage and Operating Voltage, respectively). My GTX660 is limited by Power and Reliability Voltage. My GTX770 is limited by Reliability Voltage and Operating Voltage. All in the same system. Of note is that only the GTX660 is limited by Power! Just saying... FAQ's HOW TO: - Opt out of Beta Tests - Ask for Help |

|

Send message Joined: 26 Jun 09 Posts: 815 Credit: 1,470,385,294 RAC: 0 Level  Scientific publications

|

Yes I have that seen already from GPU-Z in previous versions just as skgiven says. My 660 and 770 have limited power reliability. When changing power, nothing changed and the PSU's are powerful enough for the cards. I saw/see it with beta, SR and LR, from all scientists. Greetings from TJ |

|

Send message Joined: 17 Aug 08 Posts: 2705 Credit: 1,311,122,549 RAC: 0 Level  Scientific publications                          |

If GPU-Z reports "VRel., VOp" as throttling reason this actually means the card is running full throttle and has reached the highest boost bin. Since it would need higher voltage for the next bin, it's reported as being throttled by voltage. Unless the power limit is set tightly or cooling is poor, then this should be the default state a GPU-Grid-crunching Kepler is in. Bedrich wrote: but they do down clock the video card speed to 914 Mhz, not the 1019 Mhz speed as recorded on the Stderr output below. Notice the temperature readings, they are in the 50's, not the 60's to low 70's when I run the long tasks. This sounds like the GPU utilization was low, in which case the driver doesn't see it necessary to push to full boost. In this case GPU-Z reports "Util" as throttle reason, for "GPU utilization too low". This mostly happens with small / short WUs. Those are also the ones where running 2 concurrent WUs actually provides some throughput gains. MrS Scanning for our furry friends since Jan 2002 |

©2025 Universitat Pompeu Fabra