Message boards : Number crunching : Unsent tasks decreasing much more slowly

| Author | Message |

|---|---|

|

I've noticed that the number of Unsent Tasks is decreasing at a much slower rate even though the number of tasks in progress is growing and the Current GigaFLOPS is approaching record levels. | |

| ID: 54312 | Rating: 0 | rate:

| |

|

Toni prioritized some batches before, those have run out. That made the number of unsent task to decrease more rapidly. | |

| ID: 54314 | Rating: 0 | rate:

| |

|

Thanks! | |

| ID: 54317 | Rating: 0 | rate:

| |

|

On March 10th 2020 | 17:39:16 UTC Retvari Zoltan wrote at message #53884: I'm receiving many tasks which are the last one of their batch: At this time, the number of unsent tasks is 243.556, as can be seen at Server status page. The last tasks I'm currently receiving are similar to: 3tekA00_320_3-TONI_MDADpr4st-8-10-RND9554_0 As soon as series arrives 9-10 ones, it is predictable that unsent tasks will decrease again at a higher rate... (?) | |

| ID: 54579 | Rating: 0 | rate:

| |

As soon as series arrives 9-10 ones, it is predictable that unsent tasks will decrease again at a higher rate... (?) All my received WUs today are this kind. Current reading is 242.563 unsent tasks. We will be soon confirming or discarding this assumption. | |

| ID: 54604 | Rating: 0 | rate:

| |

I'm sure that the number of unsent tasks will drop drastically in the next few days.As soon as series arrives 9-10 ones, it is predictable that unsent tasks will decrease again at a higher rate... (?)All my received WUs today are this kind. The only question is the bottom of that drop. It depends on the priority of the tasks in the queue. If it's uniform, the number of unsent tasks will drop near 0, only the tasks stuck in slow or inactive hosts will remain in the queue (~1000 in this case). If there are lower priority tasks than the ones we receive now, then we will receive those soon. We will know if that's the case as they will have low sequence number (for example 3-10). In this case the number of unsent tasks will remain high. I guess there are no lower priority tasks, so the number of unsent tasks will drop near 0. Number of unsent task is 237.790 at the moment. (-4.773 ~2% drop in 3h 45m) | |

| ID: 54607 | Rating: 0 | rate:

| |

|

I prioritised tasks ending with _0: 1gaxA04_348_0 over the others (_1 to _4) | |

| ID: 54608 | Rating: 0 | rate:

| |

Current reading is 242.563 unsent tasks. We will be soon confirming or discarding this assumption.Current reading is 222 460 that is -20 103 (8.28%) drop in 12h 20m = 27.17 / minute If this rate is constant, the present supply will last for 5 days 16 hours 28 minutes and 50.8 seconds. :) | |

| ID: 54612 | Rating: 0 | rate:

| |

The current reading is 200,361 that is 42,202 (17.4%) decrease in 24h 10m = 29.10 / minuteCurrent reading is 242.563 unsent tasks. We will be soon confirming or discarding this assumption.Current reading is 222 460 that is -20 103 (8.28%) drop in 12h 20m = 27.17 / minute The rate is slightly increased. According to this new rate, the present supply will last 4 days 18 hours 44 minutes 6.94 seconds from now .:) | |

| ID: 54613 | Rating: 0 | rate:

| |

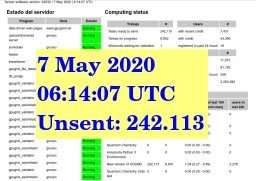

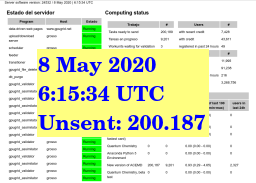

The current reading is 200,361 that is 42,202 (17.4%) decrease in 24h 10m = 29.10 / minute -1) Mr. Zoltan: Thank you very much for making this funny. I took screenshots that are confirming your data.   Reduction in unsent tasks: 41.926 in this about 24H lapse. -2) Mr. Toni/GPUGrid's Team: Thank you very much for your continuous support. This high decreasing rate has been greatly facilitated by exceptionally good communications since yesterday's morning. Whatever you did in the transition from May 6th to 7th, it supposed a drastic change between extremely sluggish to very agile communications. Please, take note of the recipy. At he moment of writing this, scheduler is stopped. I guess that this high rate in returning results has caused a new momentary buffer disk overflow... | |

| ID: 54618 | Rating: 0 | rate:

| |

The current reading is 200,361 that is 42,202 (17.4%) decrease in 24h 10m = 29.10 / minute Note that the return rate was this high all along hence there are frequent disk buffer overflows. As new tasks created from the returned tasks the number of unsent workunits remain constant, so the return rate remain hidden from us, until the batches reach their final sequence number. | |

| ID: 54621 | Rating: 0 | rate:

| |

Note that the return rate was this high all along hence there are frequent disk buffer overflows. As new tasks created from the returned tasks the number of unsent workunits remain constant, so the return rate remain hidden from us, until the batches reach their final sequence number. Yes, you're right, and I'm aware of it. Lately frequent schduler stops most probably keep relationship with this Optimized bandwith anouncement, and significantly raised number of crunchers... This combination has likely caused some bottleneck in project's resources. | |

| ID: 54622 | Rating: 0 | rate:

| |

|

It looks like the server status page needs something added - free disk space - at least for this disk areas that receive uploads. | |

| ID: 54624 | Rating: 0 | rate:

| |

|

One more conclusion that could be drawn: | |

| ID: 54631 | Rating: 0 | rate:

| |

|

What we don't know - at least, I certainly don't know, and I've not seen it described here, ever - is what exactly the processing path of that data is after our raw results are returned to the server. | |

| ID: 54632 | Rating: 0 | rate:

| |

|

Project's scheduler is just up again, with 174.874 tasks left ready to send! | |

| ID: 54640 | Rating: 0 | rate:

| |

|

All my stacked WUs have been reported as finished, and all (but one 8-10) the new WUs I've received are of the kind 9-10. | |

| ID: 54641 | Rating: 0 | rate:

| |

|

I have a couple of ghost tasks, so I suppose that many other ghost tasks are waiting to pass their deadline, so some 8-10 tasks will be re-send to other hosts. | |

| ID: 54642 | Rating: 0 | rate:

| |

|

What is the ghost recovery procedure on this project? | |

| ID: 54650 | Rating: 0 | rate:

| |

|

Ghost tasks are on GPUGRID's server side. | |

| ID: 54651 | Rating: 0 | rate:

| |

What is the ghost recovery procedure on this project?I've tried the way it works for SETI, but it didn't work here. Luckily GPUGrid has a much shorter deadline than SETI, so it's not a big problem. | |

| ID: 54652 | Rating: 0 | rate:

| |

the present supply (171,016) will last for about 4 days from now.The rate of decline seems to stabilize around 30/minute, so the supply will last for about 3 days from now. (exactly 2 days 22 hours 39 minutes and 42.9 seconds) | |

| ID: 54653 | Rating: 0 | rate:

| |

Ghost tasks are on GPUGRID's server side. [Clarification] We call "Ghost task" to that the server counts as sent to a Host, but for any reason, it was not really received. It doesn't interfere at the host side, as BOINC Manager will not see these ghost tasks, and it will continue asking for new tasks until tasks buffer is full, or maximum "2 tasks per GPU" is achieved. On the server's side, ghost tasks are wrongly being counted as "In process" tasks, while really they are not. | |

| ID: 54654 | Rating: 0 | rate:

| |

The rate of decline seems to stabilize around 30/minute, so the supply will last for about 3 days from now. What is coming next, is a mystery... | |

| ID: 54656 | Rating: 0 | rate:

| |

What is the ghost recovery procedure on this project?I've tried the way it works for SETI, but it didn't work here. Thanks Zoltan, I tried my Seti ghost recovery protocol and it didn't work either. I managed to pick up 10 ghosts and wanted to clear them. Good thing the deadline here is so short compared to Seti. | |

| ID: 54660 | Rating: 0 | rate:

| |

|

These ghost tasks seem to occur after the server runs out of disk space. Are they somehow related to that? 🤔 (unknown error) - exit code 195 (0xc3)</message> It apparently signals that the WU is bad- when you track them. After getting 6 of them I'm curious what the bug might be. Bad code? | |

| ID: 54662 | Rating: 0 | rate:

| |

|

yes, I saw a bunch of bad WUs. checking the resends, they are all erroring out also on different hosts. | |

| ID: 54663 | Rating: 0 | rate:

| |

|

Looks like a lot of tasks lost their file references on the storage. Can't pull the correct data for the tasks. <core_client_version>7.17.0</core_client_version> <