Message boards : News : Update acemd3 app

| Author | Message |

|---|---|

|

I deployed the new app, which now requires cuda 11.2 and hopefully support all the latest cards. Touching the cuda versions is always a nightmare in boinc scheduler so expect problems. | |

| ID: 57041 | Rating: 0 | rate:

| |

|

YES! Thank you so much! | |

| ID: 57042 | Rating: 0 | rate:

| |

|

I noticed the plan class is listed as "cuda1121" on the Applications page. is this a typo? will it cause any issues with getting work or running the application? | |

| ID: 57043 | Rating: 0 | rate:

| |

|

Great news! So far it's only Linux, right? | |

| ID: 57045 | Rating: 0 | rate:

| |

|

So far. | |

| ID: 57046 | Rating: 0 | rate:

| |

|

Just so people are aware, CUDA 11.2 (I assume the "1121" means CUDA 11.2.1 "update 1") means you need at least driver 460.32 on Linux. | |

| ID: 57047 | Rating: 0 | rate:

| |

|

Can someone confirm that the Linux cuda100 app is still sent out (and likely fail)? | |

| ID: 57048 | Rating: 0 | rate:

| |

Can someone confirm that the Linux cuda100 app is still sent out (and likely fail)? is this the reason that the Linux tasks have been failing recently? they need this new app? did you remove the Linux cuda100 app? ____________  | |

| ID: 57049 | Rating: 0 | rate:

| |

|

I just got a couple tasks on my RTX 3080 Ti host, it got the new app. it failed in 2 seconds. it looks like you're missing a file, or you forgot to statically link boost into the app: 16:50:34 (15968): wrapper (7.7.26016): starting https://www.gpugrid.net/result.php?resultid=32631384 but it's promising that I didnt get the "invalid architecture" error ____________  | |

| ID: 57050 | Rating: 0 | rate:

| |

|

Looks like Ubuntu 20.04.2 LTS has libboost-all-dev 1.71 installed. | |

| ID: 57051 | Rating: 0 | rate:

| |

|

i think these are sandboxed in the wrapper. so packages on the system in theory shouldnt matter right? | |

| ID: 57052 | Rating: 0 | rate:

| |

|

Just failed a couple more acemd3 tasks. What a waste . . . . as hard as they are to snag. | |

| ID: 57053 | Rating: 0 | rate:

| |

Just failed a couple more acemd3 tasks. What a waste . . . . as hard as they are to snag. did you get the new app? do you have that newer version of boost installed? ____________  | |

| ID: 57054 | Rating: 0 | rate:

| |

|

No I just have the normal CUDA 10.0 app installed. I am just investigating what would be needed to install the missing libraries. | |

| ID: 57055 | Rating: 0 | rate:

| |

|

Great to see this progress as prices of GPUs are beginning to fall and Ampere GPUs are currently dominating the market availability. I hope China's Ban on mining becomes a budgetary boon for crunchers and gamers worldwide. | |

| ID: 57056 | Rating: 0 | rate:

| |

No I just have the normal CUDA 10.0 app installed. I am just investigating what would be needed to install the missing libraries. looks like you're actually getting the new app now: http://www.gpugrid.net/result.php?resultid=32631755 ____________  | |

| ID: 57057 | Rating: 0 | rate:

| |

No I just have the normal CUDA 10.0 app installed. I am just investigating what would be needed to install the missing libraries. Huh, hadn't noticed. So maybe the New version of ACEMD v2.12 (cuda1121) is going to be the default app even for the older cards. | |

| ID: 57058 | Rating: 0 | rate:

| |

|

I think you’ll only get 11.2 app if you have a driver that’s compatible. Greater than 460.32. Just my guess. I’ll need to see if systems with and older driver will still get the cuda 100 app | |

| ID: 57059 | Rating: 0 | rate:

| |

|

I'm still trying to figure out the best way to distribute the app. The current way has hard-coded minimum-maximum driver versions for each CUDA version and it's too cumbersome to maintain. | |

| ID: 57060 | Rating: 0 | rate:

| |

I'm still trying to figure out the best way to distribute the app. The current way has hard-coded minimum-maximum driver versions for each CUDA version and it's too cumbersome to maintain. Here is an idea: How about distribution by card type? That would exclude the really slow cards, like 740M. BTW: What driver version do we need for this? | |

| ID: 57061 | Rating: 0 | rate:

| |

|

Toni, I think the first thing that needs to be fixed is the problem with boost 1.74 library not being included in the app distribution. the app is failing right away because it's not there. you either need to distribute the .so file or statically link it into the acemd3 app so it's not needed separately. | |

| ID: 57062 | Rating: 0 | rate:

| |

|

after manually installing the required boost to get past that error, I now get this error on my 3080 Ti system: 09:55:10 (4806): wrapper (7.7.26016): starting Task: https://www.gpugrid.net/result.php?resultid=32632410 I tried purging and reinstalling the nvidia drivers, but no change. it looks like this same error popped up when you first released acemd3 2 years ago: http://www.gpugrid.net/forum_thread.php?id=4935#51970 biodoc wrote: Multiple failures of this task on both windows and linux you then updated the app which fixed the problem at that time, but you didnt post exactly what was changed: http://www.gpugrid.net/forum_thread.php?id=4935&nowrap=true#52022 Toni wrote: It was a cryptic bug in the order loading shared libraries, or something like that. Otherwise unexplainably system-dependent. so whatever kind of change you made between v2.02 and v2.03 seems to be what needs fixing again. ____________  | |

| ID: 57063 | Rating: 0 | rate:

| |

I deployed the new app, which now requires cuda 11.2 and hopefully support all the latest cards. Touching the cuda versions is always a nightmare in boinc scheduler so expect problems. Thank you so much. Those efforts are for noble reasons. Regarding persistent errors: I also manually installed boost as a try at one of my Ubuntu 20.04 hosts, by means of the following commands: sudo add-apt-repository ppa:mhier/libboost-latest But a new task downloaded after that still failed: e3s644_e1s419p0f770-ADRIA_New_KIXcMyb_HIP_AdaptiveBandit-1-2-RND9285_4 Then, I've reset GPUGrid project, and it seems that it did the trick. A new task is currently running on this host, instead of failing after a few seconds past: e4s126_e3s248p0f238-ADRIA_New_KIXcMyb_HIP_AdaptiveBandit-0-2-RND6347_7 49 minutes, 1,919% progress by now. | |

| ID: 57064 | Rating: 0 | rate:

| |

I deployed the new app, which now requires cuda 11.2 and hopefully support all the latest cards. Touching the cuda versions is always a nightmare in boinc scheduler so expect problems. Thanks, I'll try a project reset. though I had already done a project reset after the new app was announced. I guess it can't hurt. ____________  | |

| ID: 57065 | Rating: 0 | rate:

| |

|

nope, even after the project reset, still the same error process exited with code 195 (0xc3, -61)</message> https://www.gpugrid.net/result.php?resultid=32632487 ____________  | |

| ID: 57066 | Rating: 0 | rate:

| |

sudo add-apt-repository ppa:mhier/libboost-latest small correction here. it's "libboost1.74", not just "boost1.74" ____________  | |

| ID: 57067 | Rating: 0 | rate:

| |

|

Maybe that your problem is an Ampere-specific one (?). | |

| ID: 57068 | Rating: 0 | rate:

| |

Maybe that your problem is an Ampere-specific one (?). I had this thought. I put in my old 2080ti to the problem-host, and will see if it starts processing, or if it's really a problem with the host-specific configuration. this isn't the first time this has happened though. and Toni previously fixed it with an app update. so it looks like that will be needed again even if it's Ampere-specifc. I think the difference in install commands comes down to the use of apt vs. apt-get. although apt-get still works, transitioning to just apt will be better in the long term. Difference between apt and apt-get ____________  | |

| ID: 57069 | Rating: 0 | rate:

| |

Maybe that your problem is an Ampere-specific one (?). well, it seems it's not Ampere specific. it failed in the same way on my 2080ti here: https://www.gpugrid.net/result.php?resultid=32632521 still the CUDA compiler error unfortunately I can't easily move the 3080ti to another system since it's a watercooled model that requires a custom water loop. ____________  | |

| ID: 57070 | Rating: 0 | rate:

| |

|

I just used the ppa method on my other two hosts. But I did not reboot. | |

| ID: 57071 | Rating: 0 | rate:

| |

I think I finally solved the issue! it's running on the 3080ti finally! first I removed the manual installation of boost. and installed the PPA version. I don't think this was the issue though. while poking around in my OS installs, I discovered that I had the CUDA 11.1 toolkit installed (likely from my previous attempts at building some apps to run on Ampere). I removed this old toolkit, cleaned up any files, rebooted, reset the project and waited for a task to show up. so now it's running finally. now to see how long it'll take a 3080ti ;). it has over 10,000 CUDA cores so I'm hoping for a fast time. 2080ti runs about 12hrs, so it'll be interesting to see how fast I can knock it out. using about 310 watts right now. but with the caveat that ever since I've had this card, I've noticed some weird power limiting behavior. I'm waiting on an RMA now for a new card, and I'm hoping it can really stretch it's legs, plan to still power limit it to about 320W though. ____________  | |

| ID: 57072 | Rating: 0 | rate:

| |

|

Congratulations! | |

| ID: 57073 | Rating: 0 | rate:

| |

|

Well, it will be your problem, not mine. Even having decent hard- and software I am pretty astonished how folks like cosmology and the likes seemingly do not understand how VM and the like works. I am pretty pissed as an amateur, though... | |

| ID: 57074 | Rating: 0 | rate:

| |

|

Just seen my first failures with libboost errors on Linux Mint 20.1, driver 460.80, GTX 1660 super. | |

| ID: 57075 | Rating: 0 | rate:

| |

Well, it will be your problem, not mine. Even having decent hard- and software I am pretty astonished how folks like cosmology and the likes seemingly do not understand how VM and the like works. I am pretty pissed as an amateur, though... what problem are you having specifically? this project has nothing to do with cosmology, and this project does not use VMs. ____________  | |

| ID: 57076 | Rating: 0 | rate:

| |

I think I finally solved the issue! it's running on the 3080ti finally! This is the moment of truth we're all waiting for. My bet is 9h 15m. | |

| ID: 57077 | Rating: 0 | rate:

| |

I think I finally solved the issue! it's running on the 3080ti finally! I’m not sure it’ll be so simple. When I checked earlier, it was tracking a 12.5hr completion time. But the 2080ti was tracking a 14.5hr completion time. Either the new run of tasks are longer, or the CUDA 11.2 app is slower? We’ll have to see. ____________  | |

| ID: 57078 | Rating: 0 | rate:

| |

|

I'm curious how you have a real estimated time remaining calculated for a brand new application. | |

| ID: 57079 | Rating: 0 | rate:

| |

|

I didn’t use the time remaining estimate from BOINC. I estimated it myself based on % complete and elapsed time, assuming a linear completion rate. | |

| ID: 57080 | Rating: 0 | rate:

| |

When I checked earlier, it was tracking a 12.5hr completion time. But the 2080ti was tracking a 14.5hr completion time.If the new tasks are longer, the awarded credit should be higher. The present ADRIA_New_KIXcMyb_HIP_AdaptiveBandit workunits "worth" 675.000 credits, while the previous ADRIA_D3RBandit_batch_nmax5000 "worth" 523.125 credits, so the present ones are longer. My estimation was 12h/1.3=9h15m (based on my optimistic 30% performance improvement expectation). Nevertheless we can use the completion times to estimate the actual performance improvement (3080Ti vs 2080Ti): The 3080 Ti completed the task in 44368s (12h 19m 28s) the 2080Ti completed the task in 52642s (14h 37m 22s), so the 3080Ti is "only" 18.65% faster. So the number of the usable CUDA cores in the 30xx series are the half of the advertised number (just as I expected), as 10240/2=5120, 5120/4352=1.1765 (so the 3080Ti has 17.65% more CUDA cores than the 2080Ti has), the CUDA cores of the 3080Ti are 1.4% faster than of the 2080Ti. | |

| ID: 57081 | Rating: 0 | rate:

| |

|

The PPA-reset trick worked - I have a new task running now. Another satisfied customer. | |

| ID: 57082 | Rating: 0 | rate:

| |

|

Some of the tasks were even longer. I have two more 2080ti reports that were 55,583 and 56,560s respectively. On Identical GPUs running the same clocks. There seems to be Some variability. If you use the slower one it puts it closer to 30%. This exposes the flaw of using a single sample to form a conclusion. More data is required. | |

| ID: 57083 | Rating: 0 | rate:

| |

|

I looked back into the 'job_log_www.gpugrid.net.txt' in the BOINC data folder to get my comparison times. I haven't run many AdaptiveBandits yet, but I think the 'D3RBandit_batch*' time was a robust average over the many sub-types. | |

| ID: 57084 | Rating: 0 | rate:

| |

|

After cross referencing runtimes for various Windows hosts, I think the new app is just slower. Windows hosts haven’t experienced an app change (yet) and haven’t shown any sudden or recent change in run time with the KIX AdaptiveBandit jobs. This suggests that that tasks haven’t really changed, leading the only other cause of the longer run time to be a slower 11.2 app. | |

| ID: 57085 | Rating: 0 | rate:

| |

|

GTX 1080 | |

| ID: 57086 | Rating: 0 | rate:

| |

|

Where did you get the ns/day numbers from? | |

| ID: 57087 | Rating: 0 | rate:

| |

|

Go to slot folder and cat progress.log | |

| ID: 57088 | Rating: 0 | rate:

| |

|

It’s not real. I’ve manipulated the coproc_info file to report what I want. | |

| ID: 57089 | Rating: 0 | rate:

| |

RTX 3070The WU you linked had one wingman run it as cuda 10.1 and the other as 11.21 with 155,037 seconds versus 68,000. Isn't that faster? https://www.gpugrid.net/workunit.php?wuid=27075862 What does ns mean? | |

| ID: 57090 | Rating: 0 | rate:

| |

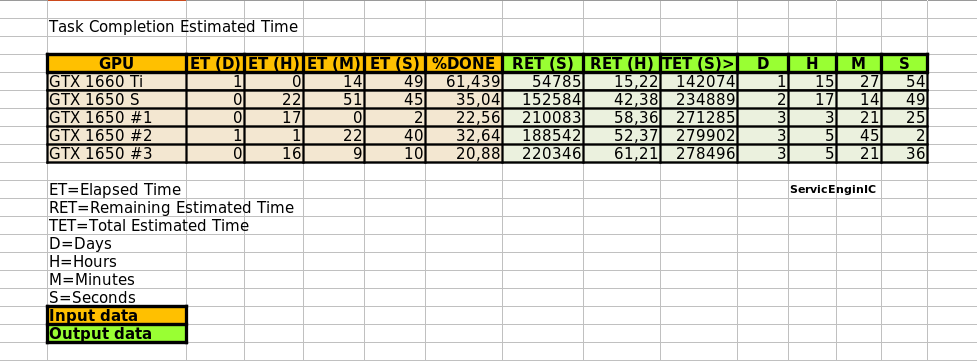

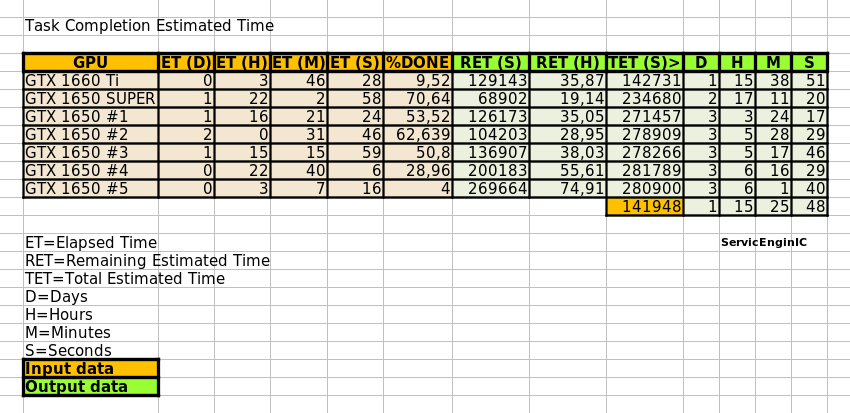

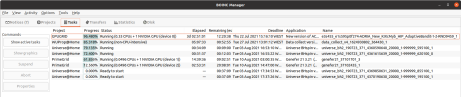

I didn’t use the time remaining estimate from BOINC. I estimated it myself based on % complete and elapsed time, assuming a linear completion rate. I usually employ the same method, since Progress % shown by BOINC Manager is quite linear. At my low-end GPUs, I'm still waiting for the first task to complete :-) Evaluating the small sample of tasks that I've received, tasks for this new version are taking longer to complete than previous ones (lets say "by the moment") Estimated completion times for the 5 GPUs that I'm monitoring are as follows:  The last three GPUs are Turing GTX 1650 ones, but different graphics cards models and clock frequencies. An editable version of the spreadsheet used can be downloaded from this link | |

| ID: 57091 | Rating: 0 | rate:

| |

RTX 3070The WU you linked had one wingman run it as cuda 10.1 and the other as 11.21 with 155,037 seconds versus 68,000. Isn't that faster? nanosecond https://en.wikipedia.org/wiki/Nanosecond#:~:text=A%20nanosecond%20(ns)%20is%20an,or%201%E2%81%841000%20microsecond. Yes there big gap to runtime on other host but it was also using NVIDIA GeForce GTX 1070 | |

| ID: 57092 | Rating: 0 | rate:

| |

It’s not real. I’ve manipulated the coproc_info file to report what I want. Ok why i ask was that device name is unknown for my 3080Ti and had some hope that driver you used would fix that. So i could go coproc file and edit instead. | |

| ID: 57093 | Rating: 0 | rate:

| |

It’s not real. I’ve manipulated the coproc_info file to report what I want. What driver are you using? The 3080ti won’t be detected until driver 460.84. Anything older will not know what GPU that is. ____________  | |

| ID: 57094 | Rating: 0 | rate:

| |

|

Greger, I just can't get my head around what it means. So out of the 8.64E13 ns in a day you only calculate for 159 ns??? I'm not familiar with that figure of merit. | |

| ID: 57095 | Rating: 0 | rate:

| |

|

The nanoseconds will be the biochemical reaction time that we're modelling - very, very, slowly - in a digital simulation. | |

| ID: 57096 | Rating: 0 | rate:

| |

Greger, I just can't get my head around what it means. So out of the 8.64E13 ns in a day you only calculate for 159 ns??? I'm not familiar with that figure of merit. Aren’t you big into folding? ns/day is a very common metric for measuring computation speed in molecular modeling. ____________  | |

| ID: 57097 | Rating: 0 | rate:

| |

It’s not real. I’ve manipulated the coproc_info file to report what I want. NVIDIA-SMI 465.27 Driver Version: 465.27 CUDA Version: 11.3 Could not use 460 for 3080Ti so i had to move latest ubuntu provided and it would this version. boinc-client detect name as Coprocessors NVIDIA NVIDIA Graphics Device (4095MB) driver: 465.27 I edit coproc_info.xml but it does not change when i update to project and if i restart boinc-client it will wipe even if ai change driverversin inside file. Maybe i could lock file to root only to prevent boinc to write permission but i better not. | |

| ID: 57098 | Rating: 0 | rate:

| |

|

You need driver 460.84 for 3080ti. You can use that one. | |

| ID: 57099 | Rating: 0 | rate:

| |

Greger, I just can't get my head around what it means. So out of the 8.64E13 ns in a day you only calculate for 159 ns??? I'm not familiar with that figure of merit. As mention before here it is possible time the device could genereate a folding event for that device but you need take in count the complexity of folding time in and amount of atoms have big affect on it and possible other parameters in modelling event. Think of see it as a box and you have x y z and it build up protein with atoms then make fold of it. In total result it would be very very short event. There was a free tool before and possible available still today that you could use to open data that done directly with after it was done. users have done this at folding@home and posted in forums. Not sure if that is free for acemd | |

| ID: 57100 | Rating: 0 | rate:

| |

You need driver 460.84 for 3080ti. You can use that one. ok thanks | |

| ID: 57101 | Rating: 0 | rate:

| |

|

Yea, snagged a WU and it's running. My guesstimate is 19:44:13 on my 3080 dialed down to 230 Watts. Record breaking long heat wave here and summer peak Time-of-Use electric rates (8.5x higher) have started. Summer is not BOINC season in The Great Basin. | |

| ID: 57102 | Rating: 0 | rate:

| |

Linux Mint repository offers 465.31 and 460.84. Is it actually worth reverting to 460.84??? I wouldn't do it until after this WU completes anyway. probably wont matter if the driver you have is working. i don't expect any performance difference between the two. I was just saying that I would use a more recent non-beta driver if i was updating, unless you need some feature in 465 branch specifically. ____________  | |

| ID: 57103 | Rating: 0 | rate:

| |

|

second 3080ti task completed in 11hrs | |

| ID: 57104 | Rating: 0 | rate:

| |

|

peak 28,9°C here today so suspend during daytime after 2 task done. | |

| ID: 57105 | Rating: 0 | rate:

| |

|

Just take in mind that any change in Nvidia driver version while a GPUgrid task is in progress, will cause it to fail when computing is restarted. | |

| ID: 57106 | Rating: 0 | rate:

| |

peak 28,9°C here today so suspend during daytime after 2 task done. The VRAM reported wrong is not because of the driver. It’s a problem with BOINC. BOINC uses a detection technique that is only 32-bit (4GB). This can only be fixed by fixing the code in BOINC. ____________  | |

| ID: 57107 | Rating: 0 | rate:

| |

|

I went back to my host and driver crashed. smi unable to open and task failed on another project. Restarted it and back on track. Few minutes later it fetch new task from GPUGrid. Let's hope it does not crash again. | |

| ID: 57108 | Rating: 0 | rate:

| |

|

GPU detection is handled by BOINC, not any individual projects. | |

| ID: 57109 | Rating: 0 | rate:

| |

|

Finally, my first result of a new version 2.12 task came out in my fastest card: | |

| ID: 57110 | Rating: 0 | rate:

| |

|

Compare old and new app on 2070S | |

| ID: 57111 | Rating: 0 | rate:

| |

My guesstimate is 19:44:13 on my 3080 dialed down to 230 Watts. 16:06:54 https://www.gpugrid.net/workunit.php?wuid=27077289 | |

| ID: 57112 | Rating: 0 | rate:

| |

|

At this moment, every of my 7 currently working GPUs have any new version 2.12 task in process. acemd3: error while loading shared libraries: libboost_filesystem.so.1.74.0: cannot open shared object file: No such file or directory Chance to remember that there is a remedy for this problem, commented at message #57064 in this same thread. One last update for estimated times to completion on my GPUs:  An editable version of the spreadsheet used can be downloaded from this link Changes since previous version: - Lines for two more GPUs are added. - A new cell is added for seconds to D:H:M:S conversion | |

| ID: 57113 | Rating: 0 | rate:

| |

So the number of the usable CUDA cores in the 30xx series are half of the advertised number (just as I expected), as 10240/2=5120, 5120/4352=1.1765 (so the 3080Ti has 17.65% more CUDA cores than the 2080Ti has), the CUDA cores of the 3080Ti are 1.4% faster than of the 2080Ti. Does using half of CUDA cores have implications for BOINCing? GG+OPNG at <cpu_usage>1.0</cpu_usage> & <gpu_usage>0.5</gpu_usage> works fine. GG+DaggerHashimoto crashes GG instantly. I hope to try 2xGG today. | |

| ID: 57114 | Rating: 0 | rate:

| |

Does using half of CUDA cores have implications for BOINCing?You can't utilize the "extra" CUDA cores by running a second task (regardless of the project). The 30xx series improved gaming experience much more, than the crunching performance. | |

| ID: 57115 | Rating: 0 | rate:

| |

So the number of the usable CUDA cores in the 30xx series are half of the advertised number (just as I expected), as 10240/2=5120, 5120/4352=1.1765 (so the 3080Ti has 17.65% more CUDA cores than the 2080Ti has), the CUDA cores of the 3080Ti are 1.4% faster than of the 2080Ti. I think you misunderstand what's happening. running 2x GPUGRID tasks concurrently wont make it "use more". it'll just slow both down, probably slower than half speed due to the constant resource fighting. if GPUGRID isn't seeing the effective 2x benefit of Turing vs Ampere, that tells me one of two things (or maybe some combination of both): 1. that app isn't as FP32 heavy as some have implied, and maybe has a decent amount of INT32 instructions. the INT32 setup of Ampere is the same as Turing 2. there is some additional optimization that needs to be applied to the ACEMD3 app to better take advantage of the extra FP32 cores on Ampere. ____________  | |

| ID: 57116 | Rating: 0 | rate:

| |

if GPUGRID isn't seeing the effective 2x benefit of Turing vs Ampere, that tells me one of two things (or maybe some combination of both): The way Ampere works is that half the cores are FP32, and the other half are either FP32 or INT32 depending on need. On Turing (and older), the INT32 half was always INT32. So you're probably right - either GPUGRID has some INT32 load that is using the cores instead, or some kind of application change is required to get it to use the other half. | |

| ID: 57118 | Rating: 0 | rate:

| |

|

I'm not convinced that the extra cores "aren't being used" at all, ie, the cores are sitting idle 100% of the time as a direct result of the architecture or something like that. I think both the application and the hardware are fully aware of the available cores/SMs. just that the application is coded in such a way that it can't take advantage of the extra resources, either in optimization or in the number of INT instructions required. | |

| ID: 57119 | Rating: 0 | rate:

| |

The 30xx series improved gaming experience much more, than the crunching performance. I'm thoroughly unimpressed by my 3080. Its performance does not scale with price making it much more expensive for doing calculations. I'll probably test it for a few more days and then sell it. I like to use some metric that's proportional to calculations and optimize calcs/Watt. In the past my experience has been reducing max power improves performance. But since Nvidia eliminated the nvidia-settings options -a [gpu:0]/GPUGraphicsClockOffset & -a [gpu:0]/GPUMemoryTransferRateOffset that I used I haven't found a good way to do it using Linux. nvidia-settings -q all It seems Nvidia chooses a performance level but I can't see how to force it to a desired level: sudo DISPLAY=:0 XAUTHORITY=/var/run/lightdm/root/:0 nvidia-settings -q '[gpu:0]/GPUPerfModes' 3080: 0, 1, 2, 3 & 4 Attribute 'GPUPerfModes' (Rig-05:0[gpu:0]): perf=0, nvclock=210, nvclockmin=210, nvclockmax=420, nvclockeditable=1, memclock=405, memclockmin=405, memclockmax=405, memclockeditable=1, memTransferRate=810, memTransferRatemin=810, memTransferRatemax=810, memTransferRateeditable=1 ; perf=1, nvclock=210, nvclockmin=210, nvclockmax=2100, nvclockeditable=1, memclock=810, memclockmin=810, memclockmax=810, memclockeditable=1, memTransferRate=1620, memTransferRatemin=1620, memTransferRatemax=1620, memTransferRateeditable=1 ; perf=2, nvclock=240, nvclockmin=240, nvclockmax=2130, nvclockeditable=1, memclock=5001, memclockmin=5001, memclockmax=5001, memclockeditable=1, memTransferRate=10002, memTransferRatemin=10002, memTransferRatemax=10002, memTransferRateeditable=1 ; perf=3, nvclock=240, nvclockmin=240, nvclockmax=2130, nvclockeditable=1, memclock=9251, memclockmin=9251, memclockmax=9251, memclockeditable=1, memTransferRate=18502, memTransferRatemin=18502, memTransferRatemax=18502, memTransferRateeditable=1 ; perf=4, nvclock=240, nvclockmin=240, nvclockmax=2130, nvclockeditable=1, memclock=9501, memclockmin=9501, memclockmax=9501, memclockeditable=1, memTransferRate=19002, memTransferRatemin=19002, memTransferRatemax=19002, memTransferRateeditable=1 Nvidia has said, "The -a and -g arguments are now deprecated in favor of -q and -i, respectively. However, the old arguments still work for this release." Sounds like they're planning to reduce or eliminate customers ability to control the products they buy. Nvidia also eliminated GPULogoBrightness so the baby-blinkie lights never turn off. | |

| ID: 57120 | Rating: 0 | rate:

| |

running 2x GPUGRID tasks concurrently wont make it "use more". it'll just slow both down, probably slower than half speed due to the constant resource fighting. At less than 5% complete with two WUs running simultaneously and having started within minutes of each other: WU1: 4840 sec at 4.7% implies 102978 sec total WU2: 5409 sec at 4.6% implies 117587 sec total From yesterday's singleton: 2 x 58014 sec = 116028 sec total if independent. | |

| ID: 57121 | Rating: 0 | rate:

| |

running 2x GPUGRID tasks concurrently wont make it "use more". it'll just slow both down, probably slower than half speed due to the constant resource fighting. my point exactly. showing roughly half speed, with no real benefit to running multiples. pushing your completion time to 32hours will only reduce your credit reward since you'll be bumped out of the +50% bonus for returning in 24hrs. ____________  | |

| ID: 57122 | Rating: 0 | rate:

| |

|

Aurum wrote: But since Nvidia eliminated the nvidia-settings options -a [gpu:0]/GPUGraphicsClockOffset & -a [gpu:0]/GPUMemoryTransferRateOffset that I used I haven't found a good way to do it using Linux. these options still work. I use them for my 3080Ti. not sure what you mean? this is exactly what I use for my 3080Ti (same on my Turing hosts) /usr/bin/nvidia-smi -pm 1 /usr/bin/nvidia-smi -acp UNRESTRICTED /usr/bin/nvidia-smi -i 0 -pl 320 /usr/bin/nvidia-settings -a "[gpu:0]/GPUPowerMizerMode=1" /usr/bin/nvidia-settings -a "[gpu:0]/GPUMemoryTransferRateOffset[4]=500" -a "[gpu:0]/GPUGraphicsClockOffset[4]=100" it works as desired. Aurum wrote: It seems Nvidia chooses a performance level but I can't see how to force it to a desired level: what do you mean by "performance level"? if you mean forcing a certain P-state, no you can't do that. and these cards will not allow getting into P0 state unless you're running a 3D application. any compute application will get a best of P2 state. this has been the case ever since Maxwell. workarounds to force P0 state stopped working since Pascal, so this isnt new. if you mean the PowerMizer preferred mode (which is analogous to the power settings in Windows) you can select that easily in Linux too. I always run mine at "prefer max performance" do this with the following command: /usr/bin/nvidia-settings -a "[gpu:0]/GPUPowerMizerMode=1" I'm unsure if this really makes much difference though except increasing idle power consumption (forcing higher clocks). the GPU seems to detect loads properly and clock up even when left on the default "Auto" selection. Aurum wrote: Nvidia also eliminated GPULogoBrightness so the baby-blinkie lights never turn off. I'm not sure this was intentional, probably something that fell through the cracks that not enough people have complained about for them to dedicate resources to fixing. there's no gain for nvidia disabling this function. but again, this stopped working with Turing, so it's been this way for like 3 years, not something new. I have mostly EVGA cards, so when I want to mess with the lighting, I just throw the card on my test bench, boot into Windows, change the LED settings there, and then put it back in the crunching rig. the settings are preserved internal to the card (for my cards) so it stays and whatever I left it as. you can probably do the same ____________  | |

| ID: 57123 | Rating: 0 | rate:

| |

|

It sure does not look like running multiple GG WUs on the same GPU has any benefit. | |

| ID: 57124 | Rating: 0 | rate:

| |

How do you prove to yourself they work? They don't even exist any more. Run nvidia-settings -q all | grep -C 10 -i GPUMemoryTransferRateOffset and you will not find either of them. | |

| ID: 57125 | Rating: 0 | rate:

| |

|

but all the slightly off-topic aside. | |

| ID: 57126 | Rating: 0 | rate:

| |

I prove they work by opening Nvidia X Server Settings and observing that the clock speed offsets have been changed in accordance with the commands and don't give any error when running them. and they have. the commands work 100%. I see you're referencing some other command. I have no idea the function of the command you're trying to use. but my command works. see for yourself: https://i.imgur.com/UFHbhNt.png ____________  | |

| ID: 57127 | Rating: 0 | rate:

| |

|

I'm still getting the CUDA compiler permission denied error. I've added the PPA and installed libboost1.74 as above, and reset the project multiple times. But every downloaded task fails after 2 seconds. | |

| ID: 57139 | Rating: 0 | rate:

| |

I'm still getting the CUDA compiler permission denied error. I've added the PPA and installed libboost1.74 as above, and reset the project multiple times. But every downloaded task fails after 2 seconds. How did you install the drivers? Have you ever installed the CUDA toolkit? This was my problem. If you have a CUDA toolkit installed, remove it. I would also be safe and totally purge your nvidia drivers and re-install fresh. ____________  | |

| ID: 57140 | Rating: 0 | rate:

| |

|

Ian&Steve C wrote: It was a great first step to getting the app working for Ampere. it's been long awaited and the new app is much appreciated and now many more cards can help contribute to the project, especially with these newer long running tasks lately. we need powerful cards to handle these tasks. and last, but not least: an app for Windows would be nice :-) | |

| ID: 57142 | Rating: 0 | rate:

| |

I'm still getting the CUDA compiler permission denied error. I've added the PPA and installed libboost1.74 as above, and reset the project multiple times. But every downloaded task fails after 2 seconds. Thanks for the quick reply. I had the CUDA toolkit ver 10 installed, but after seeing your previous post about you problem, I had already removed it. I'll try purging and reinstalling my nvidia drivers, thanks. | |

| ID: 57143 | Rating: 0 | rate:

| |

|

did you use the included removal script to remove the toolkit? or did you manually delete some files? definitely try the removal script if you havent already. good luck! | |

| ID: 57145 | Rating: 0 | rate:

| |

... For those of us who are using the python app, the correct version is installed in the miniconda folder. locate libboost_filesystem /usr/lib64/libboost_filesystem-mt.so /usr/lib64/libboost_filesystem.so /usr/lib64/libboost_filesystem.so.1.76.0 /usr/lib64/cmake/boost_filesystem-1.76.0/libboost_filesystem-variant-shared.cmake /var/lib/boinc/projects/www.gpugrid.net/miniconda/lib/libboost_filesystem.so /var/lib/boinc/projects/www.gpugrid.net/miniconda/lib/libboost_filesystem.so.1.74.0 /var/lib/boinc/projects/www.gpugrid.net/miniconda/lib/cmake/boost_filesystem-1.74.0/libboost_filesystem-variant-shared.cmake /var/lib/boinc/projects/www.gpugrid.net/miniconda/pkgs/boost-cpp-1.74.0-h312852a_4/lib/libboost_filesystem.so /var/lib/boinc/projects/www.gpugrid.net/miniconda/pkgs/boost-cpp-1.74.0-h312852a_4/lib/libboost_filesystem.so.1.74.0 /var/lib/boinc/projects/www.gpugrid.net/miniconda/pkgs/boost-cpp-1.74.0-h312852a_4/lib/cmake/boost_filesystem-1.74.0/libboost_filesystem-variant-shared.cmake I definitely don't want to downgrade my system version to run a project. Perhaps gpugrid could include the libboost that they already supply for a different app. Could the miniconda folder be somehow included in the app? | |

| ID: 57147 | Rating: 0 | rate:

| |

|

Richard Haselgrove sait at Message #57177: Look at that timeout: host 528201. Oh, Mr. Kevvy, where art thou? 156 libboost errors? You can fix that... Finally, Mr. Kevvy host #537616 processed successfully today these two tasks: e4s113_e1s796p0f577-ADRIA_New_KIXcMyb_HIP_AdaptiveBandit-1-2-RND7908_0 e5s9_e3s99p0f334-ADRIA_New_KIXcMyb_HIP_AdaptiveBandit-0-2-RND8007_4 If it was due to your fix, congratulations Mr. Kevvy, you've found the right way. Or perhaps it was some fix at tasks from server side? Hard to know till there are plenty of new tasks ready to send. Currently, 7:51:20 UTC, there are 0 tasks left ready to send, 28 tasks left in progress, as Server status page shows. | |

| ID: 57192 | Rating: 0 | rate:

| |

|

I got a note back from Mr. K - he saw the errors, and was going to check his machines. I imagine he's applied Ian's workround. | |

| ID: 57193 | Rating: 0 | rate:

| |

|

On July 3rd 2021, Ian&Steve C. wrote at Message #57087: But it’s not just 3000-series being slow. All cards seem to be proportionally slower with 11.2 vs 10.0, by about 30% While organizing screenshots on one of my hosts, I happened to find comparative images for tasks of old Linux APP V2.11 (CUDA 10.0) and new APP V2.12 (CUDA 11.2) * ACEMD V2.11 tasks on 14/06/2021:  * ACEMD V2.12 task on 20/07/2021:  Pay attention to device 0, the only comparable one. - ACEMD V2.11 task: 08:10:18 = 29418 seconds past to process 15,04%. Extrapolating, this leads to 195598 seconds of total processing time (2d 06:19:58) - ACEMD V2.12 task: 3d 02:51:01 = 269461 seconds past to process 96,48%. Extrapolating, this leads to 279292 seconds of total processing time (3d 05:34:52) That is, about 42,8% of excess processing time for this particular host and device 0 (GTX 1650 GPU) | |

| ID: 57222 | Rating: 0 | rate:

| |

|

Also bear in mind that your first screenshot shows a D3RBandit task, and your second shows a AdaptiveBandit task. | |

| ID: 57223 | Rating: 0 | rate:

| |

Also bear in mind that your first screenshot shows a D3RBandit task, and your second shows a AdaptiveBandit task. Bright observer, and sharp appointment, as always. I agree that tasks aren't probably fully comparable, but they are the most comparable I found: Same host, same device, same ADRIA WUs family, same base credit amount granted: 450000... Now I'm waiting for the next move, and wondering about what will it consist of: An amended V2.12 APP?, a new V2.13 APP?, a "superstitious-proof" new V2.14 APP? ... ;-) | |

| ID: 57225 | Rating: 0 | rate:

| |

|

Is GPU grid still doing anything? I haven't gotten any work in like a month or more. And before that is was just sporadic. I used to always have work units. Now, nothing. | |

| ID: 57230 | Rating: 0 | rate:

| |

|

I am not receiving Windows tasks anymore. My configuration is | |

| ID: 57231 | Rating: 0 | rate:

| |

|

there hasnt been an appreciable amount of work available for over a month. | |

| ID: 57232 | Rating: 0 | rate:

| |

there hasnt been an appreciable amount of work available for over a month. :-( :-( :-( | |

| ID: 57233 | Rating: 0 | rate:

| |

there hasnt been an appreciable amount of work available for over a month. Currently it's like Gpugrid Project was hibernating. From time to time, when tasks in progress reach zero, some automatism (?) launches 20 more CRYPTICSCOUT_pocket_discovery WUs. But lately only for Linux systems, and with these known problems unsolved. Waiting for everything awakening soon again... | |

| ID: 57234 | Rating: 0 | rate:

| |

|

Yes, that is all I've been getting lately. I had 4 CRYPTICSCOUT_pocket_discovery tasks 4 days ago and I got 2 more today. | |

| ID: 57235 | Rating: 0 | rate:

| |

|

Another two more today. | |

| ID: 57236 | Rating: 0 | rate:

| |

|

what I don't understand is that there is no word whatsoever from the project team about an even tentative schedule :-( | |

| ID: 57237 | Rating: 0 | rate:

| |

|

still no tasks on the two hosts that have been having some issue getting work since the new app was released. I've set NNT on all other hosts in order to try to funnel any available work to these two hosts. | |

| ID: 57238 | Rating: 0 | rate:

| |

|

Well I stepped out on a limb and side emailed the Principal Investigator listed for the project and the University regarding the lack of any communications. | |

| ID: 57239 | Rating: 0 | rate:

| |

|

It worked! And Texas is still there, the last time I checked. | |

| ID: 57243 | Rating: 0 | rate:

| |

|

Moved to libboost thread. | |

| ID: 57247 | Rating: 0 | rate:

| |

|

Hello; | |

| ID: 57427 | Rating: 0 | rate:

| |

Hello; Try once more, but let it run for five minutes after the restart. You may find the the progress display jumps back up to what it was showing before. I had a total electricity blackout a week ago, while everything was running. I was seeing 0% as the machine started up, but it jumped back up to 50% or whatever was appropriate, and completed normally. | |

| ID: 57430 | Rating: 0 | rate:

| |

|

Why are so many Acemd3 tasks failing on Windows host(s)? Mine has not succeeded on any task lately. Same errors can be seen on many other hosts too, like WU 27085364. | |

| ID: 57759 | Rating: 0 | rate:

| |

|

There's another Acemd3 test ongoing today: | |

| ID: 57780 | Rating: 0 | rate:

| |

|

all of mine failed, because they sent only the CUDA101 app to my Ampere host. | |

| ID: 57784 | Rating: 0 | rate:

| |

all of mine failed, because they sent only the CUDA101 app to my Ampere host. I am wondering that this problem has not yet been solved :-( There should have been time enough in the meantime. | |

| ID: 57787 | Rating: 0 | rate:

| |

|

I am running my 1st New version of ACEMD 2.19 (cuda1121) task and it has reached a percentage of 35.833 and shows a Running status but the Elapsed time and Time remaining are not incrementing ? | |

| ID: 58012 | Rating: 0 | rate:

| |

I am running my 1st New version of ACEMD 2.19 (cuda1121) task and it has reached a percentage of 35.833 and shows a Running status but the Elapsed time and Time remaining are not incrementing ? https://www.gpugrid.net/forum_thread.php?id=5297&nowrap=true#58008 Ya need MS Visual C++ 2015 or later. | |

| ID: 58014 | Rating: 0 | rate:

| |

|

Yes I updated my MS Visual C++ to the 2015 or newer level and my old NVDIA 1060 took right off !!! | |

| ID: 58020 | Rating: 0 | rate:

| |

|

Anybody get any new acemd3 tasks and notice if the application name changed? | |

| ID: 58385 | Rating: 0 | rate:

| |

|

Yes, I've had one running on a Linux machine for the last 10 hours or so. Still says v2.19 and cuda 1121. It's approaching 40%, so much the same speed as usual for a GTX 1660 Ti | |

| ID: 58386 | Rating: 0 | rate:

| |

|

That's interesting. | |

| ID: 58388 | Rating: 0 | rate:

| |

|

The task I mentioned has now completed and reported - visible on the link I posted last night. The actual binary executable is still acemd3, dated 28 September 2021 - you can see the name in stderr.txt | |

| ID: 58390 | Rating: 0 | rate:

| |

|

That's what I see from the client_state file: <app> <name>acemd3</name> <user_friendly_name>Advanced molecular dynamics simulations for GPUs</user_friendly_name> <non_cpu_intensive>0</non_cpu_intensive> </app> | |

| ID: 58391 | Rating: 0 | rate:

| |

|

Then the next 2 WUs show up as 1.0 and: <app> <name>acemd4</name> <user_friendly_name>Advanced molecular dynamics simulations for GPUs</user_friendly_name> <non_cpu_intensive>0</non_cpu_intensive> </app> | |

| ID: 58392 | Rating: 0 | rate:

| |

That's interesting. You'd think they'd have a link to the Apps page, but no. The first 5 of those new acemd4 WUs failed within a few minutes. Stderr output <core_client_version>7.16.6</core_client_version> <![CDATA[ <message> process exited with code 195 (0xc3, -61)</message> <stderr_txt> 07:45:46 (99083): wrapper (7.7.26016): starting 07:45:46 (99083): wrapper (7.7.26016): starting 07:45:46 (99083): wrapper: running /bin/tar (xf x86_64-pc-linux-gnu__cuda1121.tar.bz2) 07:52:57 (99083): /bin/tar exited; CPU time 424.196280 07:52:57 (99083): wrapper: running bin/python (pre_run.py) File "/var/lib/boinc-client/slots/36/pre_run.py", line 1 <soft_link>../../projects/www.gpugrid.net/T1_3-RAIMIS_TEST-0-pre_run</soft_link> ^ SyntaxError: invalid syntax 07:52:58 (99083): bin/python exited; CPU time 0.137151 07:52:58 (99083): app exit status: 0x1 07:52:58 (99083): called boinc_finish(195) </stderr_txt> ]]> | |

| ID: 58393 | Rating: 0 | rate:

| |

Then the next 2 WUs show up as 1.0 and: Just had one of those run through to completion: T5_5-RAIMIS_TEST-1-3-RND1908_0 I think that's the first I've seen from RAIMIS which both: * Was explicitly designated as a GPU task (cuda 1121) * Ran right through to validation Congratulations! It was a very quick test run - under 7 minutes - but all the moving parts seem to have been assembled into the right order. The actual binary (as listed in stderr_txt) is 'acemd', not acemd4: that might be worth tidying up in the future. | |

| ID: 58394 | Rating: 0 | rate:

| |

|

Ok. | |

| ID: 58610 | Rating: 0 | rate:

| |

|

I had 2 WUs running today. They both made it up to 66.666% complete, then they stayed there for a few hours doing nothing, There was no CPU nor GPU usage. So I aborted both of them. How long was I supposed to keep them "running" like that? | |

| ID: 58611 | Rating: 0 | rate:

| |

|

You should have either exited BOINC and restarted or suspend/resume the tasks to get them moving again. | |

| ID: 58612 | Rating: 0 | rate:

| |

|

ALL my tasks finish with | |

| ID: 58630 | Rating: 0 | rate:

| |

ALL my tasks finish with you have several more specific errors. "ACEMD failed: Error invoking kernel: CUDA_ERROR_ILLEGAL_ADDRESS (700)" "ACEMD failed: Error invoking kernel: CUDA_ERROR_LAUNCH_FAILED (719)" "ACEMD failed: Particle coordinate is nan" it's possible a driver issue for the CUDA errors. use a program called DDU (Display Driver Uninstaller) to totally wipe out the drivers. then re-install fresh from the nvidia package. In my opinion, the 470-series driver are most stable for crunching. the newer drivers will get you the slightly different CUDA 11.21 app also. "particle coordinate is nan" (nan= not a number) is usually an overclocking issue, or a bad WU. ____________  | |

| ID: 58631 | Rating: 0 | rate:

| |

|

Good afternoon everyone, | |

| ID: 58875 | Rating: 0 | rate:

| |

Good afternoon everyone, I would be surprised if you receive a reply :-( | |

| ID: 58877 | Rating: 0 | rate:

| |

Good afternoon everyone, so, did I promise too much? | |

| ID: 59080 | Rating: 0 | rate:

| |

|

The preponderance of work lately has been 99:1 for the Python tasks. | |

| ID: 59081 | Rating: 0 | rate:

| |

|

I've had eight ACEMD 3 tasks since Friday - six 'ADRIA' (the long-running ones), and two 'CRYPTICSCOUT' (significantly shorter). One oddity is that the credit for 'ADRIA' tasks has been substantially reduced, but the credit for 'CRYPTICSCOUT' hasn't. Was that deliberate, I wonder? | |

| ID: 59083 | Rating: 0 | rate:

| |

|

Richard, maybe you can answer this puzzle. | |

| ID: 59088 | Rating: 0 | rate:

| |

|

The difference in behaviour will be down to the client scheduler (not a separate program - an integral part of the client code). | |

| ID: 59091 | Rating: 0 | rate:

| |

|

OK, thanks for the comment. As usual, I overthought the problem. | |

| ID: 59092 | Rating: 0 | rate:

| |

|

I am not sure if I am wording this question properly, but does acemd3 use single or double point precision? This is more just out of curiosity versus anything else. Also, is there a way to tell what a program/app is using by looking within the OS that is running it? | |

| ID: 59094 | Rating: 0 | rate:

| |

|

Yes, the running tasks can be identified by their science applications in the running processes on a host. | |

| ID: 59095 | Rating: 0 | rate:

| |

|

Is there a way to tell if it is single or double point precision by looking/inspecting the process in the task manager (Windows)? | |

| ID: 59097 | Rating: 0 | rate:

| |

|

You would have to ask Toni whether the acemd3 application uses single or double precision. | |

| ID: 59098 | Rating: 0 | rate:

| |

|

I'm going to guess that the vast majority is FP32 and INT32. I have not observed any correlation with FP64 across devices on GPUGRID tasks, so if any FP64 operations are being done, the percentage of compute time should be so small to be only marginal. | |

| ID: 59100 | Rating: 0 | rate:

| |

|

I have received 5 acemd tasks and they all failed. | |

| ID: 59448 | Rating: 0 | rate:

| |

|

I have several of those on two machines: Stderr Ausgabe <core_client_version>7.16.6</core_client_version> <![CDATA[ <message> process exited with code 195 (0xc3, -61)</message> <stderr_txt> 14:52:08 (347837): wrapper (7.7.26016): starting 14:52:29 (347837): wrapper (7.7.26016): starting 14:52:29 (347837): wrapper: running bin/acemd3 (--boinc --device 0) 14:52:30 (347837): bin/acemd3 exited; CPU time 0.003638 14:52:30 (347837): app exit status: 0x1 14:52:30 (347837): called boinc_finish(195) </stderr_txt> ]]> Nice to have ACEMD back, but I'd consider this even nicer if the ACEMD's didn't crash. ;-) ____________ - - - - - - - - - - Greetings, Jens | |

| ID: 59629 | Rating: 0 | rate:

| |

|

Looks like I received 37 work units overnight, all failed after about 10-30 seconds. | |

| ID: 59630 | Rating: 0 | rate:

| |

|

same. all the acemd3 tasks failed without any informative error message. on Linux | |

| ID: 59631 | Rating: 0 | rate:

| |

|

3skh-ADRIA_KDeepMD_100ns_2489-0-1-RND9110_5 Stderr output I have over 40 of these so far. Host OS (win10) and drivers are up-to-date. Are these ACEMD apps also failing under Linux? ____________ "Together we crunch To check out a hunch And wish all our credit Could just buy us lunch" Piasa Tribe - Illini Nation | |

| ID: 59632 | Rating: 0 | rate:

| |

Yes, per Ian&Steve C. | |

| ID: 59633 | Rating: 0 | rate:

| |

|

I just had 13 of the apps crash with computation error (195) 5 12/20/2022 2:03:07 PM CUDA: NVIDIA GPU 0: NVIDIA GeForce GTX 1660 Ti (driver version 526.86, CUDA version 12.0, compute capability 7.5, 6144MB, 6144MB available, 5530 GFLOPS peak) 6 12/20/2022 2:03:07 PM OpenCL: NVIDIA GPU 0: NVIDIA GeForce GTX 1660 Ti (driver version 526.86, device version OpenCL 3.0 CUDA, 6144MB, 6144MB available, 5530 GFLOPS peak) | |

| ID: 59634 | Rating: 0 | rate:

| |

|

Linux: Took almost 30 minutes to download but only seconds to error out GPUGRID x86_64-pc-linux-gnu__cuda1121.zip.b4692e2ec3b7e128830af5c05a9f0037 98.225 1013587.50 K 00:28:10 587.44 KBps Downloading dual-linux GPUGRID 2.19 ACEMD 3: molecular dynamics simulations for GPUs (cuda1121) 3sni-ADRIA_KDeepMD_100ns_3150-0-1-RND1427_7 00:00:24 (-) 0.00 100.000 - 12/26/2022 11:33:32 AM 0.993C + 1NV Computation error d 12/21/2022 9:59:43 AM CUDA: NVIDIA GPU 0: NVIDIA P102-100 (driver version 470.99, CUDA version 11.4, compute capability 6.1, 5060MB, 5060MB available, 10771 GFLOPS peak) 12/21/2022 9:59:43 AM OS: Linux Ubuntu: Ubuntu 20.04.5 LTS [5.4.0-135-generic|libc 2.31] ____________ try my performance program, the BoincTasks History Reader. Find and read about it here: https://forum.efmer.com/index.php?topic=1355.0 | |

| ID: 59636 | Rating: 0 | rate:

| |

|

will there be more acemd3 tasks in the near future? | |

| ID: 59645 | Rating: 0 | rate:

| |

will there be more acemd3 tasks in the near future? I would hope so. Would be nice to return to quick running acemd3 tasks that run only on the gpu. Let's hope the developer can rework the parameters for these new tasks so that they don't fail instantly on everyone's hosts. | |

| ID: 59647 | Rating: 0 | rate:

| |

Let's hope the developer can rework the parameters for these new tasks so that they don't fail instantly on everyone's hosts. + 1 | |

| ID: 59649 | Rating: 0 | rate:

| |

|

Here's an alternate perspective, looking at the time it took to reach the error is probably a valid way to compare the speed of the various hosts that ran the same scripts. Assuming that the error is in the script, of course. | |

| ID: 59650 | Rating: 0 | rate:

| |

|

Well since the wrapper hasn't changed and the app hasn't changed, then the issue with the tasks is that the configuration of the task parameters is doing something rude. | |

| ID: 59651 | Rating: 0 | rate:

| |

|

I am surprised that the Server Status page still shows some 140 tasks "in process". | |

| ID: 59654 | Rating: 0 | rate:

| |

I would put my money on the bet that simply the task generation configuration script is generating some values that are "out of bounds" in memory access. This might well be the case, but I have a different shot at an explanation: IIRC the certificates usually had to be renewed sometime in summer or early autumn, and I think it may be possible there's no working certificate laid down for ACEMD at all. ____________ - - - - - - - - - - Greetings, Jens | |

| ID: 59660 | Rating: 0 | rate:

| |

[quote]... and I think it may be possible there's no working certificate laid down for ACEMD at all. this has happened numerous times in the past :-( So it would be no surprise if also now this is the reason for the problem. | |

| ID: 59668 | Rating: 0 | rate:

| |

|

I managed to get one of the ACEMD3 tasks that came out and it ran fine on my old GTX 1060. | |

| ID: 59717 | Rating: 0 | rate:

| |

|

sad to see that they didnt update the CUDA version to support Ada | |

| ID: 59718 | Rating: 0 | rate:

| |

|

Yea :/ My 4090 is also waiting for work. | |

| ID: 59729 | Rating: 0 | rate:

| |

|

Either new acemd tasks are smaller or new version is faster. | |

| ID: 59756 | Rating: 0 | rate:

| |

Either new acemd tasks are smaller or new version is faster. my experience from the past few days is that they differ in size. Also the credit points earned differ accordingly. | |

| ID: 59779 | Rating: 0 | rate:

| |

Message boards : News : Update acemd3 app