Message boards : News : Experimental Python tasks (beta) - task description

| Author | Message |

|---|---|

|

Hello everyone, just wanted to give some updates about the machine learning - python jobs that Toni mentioned earlier in the "Experimental Python tasks (beta) " thread. | |

| ID: 56977 | Rating: 0 | rate:

| |

|

Highly anticipated and overdue. Needless to say, kudos to you and your team for pushing the frontier on the computational abilities of the client software. Looking forward to contribute in the future, hopefully with more than I have at hand right now. "problems [so far] unattainable in smaller scale settings"? 5. What is the ultimate goal of this ML-project? Have only one latest gen trained agents group at the end that is the result of the continuous reinforeced learning iterations? Have several and test/benchmark them against each other? Thx! Keep up the great work! | |

| ID: 56978 | Rating: 0 | rate:

| |

|

will you be utilizing the tensor cores present in the nvidia RTX cards? the tensor cores are designed for this kind of workload. | |

| ID: 56979 | Rating: 0 | rate:

| |

|

This is a welcome advance. Looking forward to contributing. | |

| ID: 56989 | Rating: 0 | rate:

| |

|

Thank you very much for this advance. | |

| ID: 56990 | Rating: 0 | rate:

| |

|

Wish you sucess. | |

| ID: 56994 | Rating: 0 | rate:

| |

|

Ian&Steve C. wrote on June 17th: will you be utilizing the tensor cores present in the nvidia RTX cards? the tensor cores are designed for this kind of workload. I am courious what the answer will be | |

| ID: 56996 | Rating: 0 | rate:

| |

|

also, can the team comment on not just GPU "under"utilization. these have NO GPU utilization. | |

| ID: 57000 | Rating: 0 | rate:

| |

|

I understand this is basic research in ML. However, I wonder which problems it would be used for here. Personally I'm here for the bio-science. If the topic of the new ML research differs significantly and it seems to be successful based on first trials, I'd suggest to set it up as a seperate project. | |

| ID: 57009 | Rating: 0 | rate:

| |

|

This is why I asked what "problems" are currently envisioned to be tackled by the resulting model. But IMO and understanding this is a ML project specifically set up to be trained on biomedical data sets. Thus, I'd argue that the science being done is still bio-related nonetheless. Would highly appreciate a feedback to loads of great questions here in this thread so far. | |

| ID: 57014 | Rating: 0 | rate:

| |

| ID: 57020 | Rating: 0 | rate:

| |

|

I noticed some python tasks in my task history. All failed for me and failed so far for everyone else. Has anyone completed any? | |

| ID: 58044 | Rating: 0 | rate:

| |

|

Host 132158 is getting some. The first failed with: File "/tmp/pip-install-kvyy94ud/atari-py_bc0e384c30f043aca5cad42554937b02/setup.py", line 28, in run sys.stderr.write("Unable to execute '{}'. HINT: are you sure `make` is installed?\n".format(' '.join(cmd))) NameError: name 'cmd' is not defined ---------------------------------------- ERROR: Failed building wheel for atari-py ERROR: Command errored out with exit status 1: command: /var/lib/boinc-client/slots/0/gpugridpy/bin/python -u -c 'import io, os, sys, setuptools, tokenize; sys.argv[0] = '"'"'/tmp/pip-install-kvyy94ud/atari-py_bc0e384c30f043aca5cad42554937b02/setup.py'"'"'; __file__='"'"'/tmp/pip-install-kvyy94ud/atari-py_bc0e384c30f043aca5cad42554937b02/setup.py'"'"';f = getattr(tokenize, '"'"'open'"'"', open)(__file__) if os.path.exists(__file__) else io.StringIO('"'"'from setuptools import setup; setup()'"'"');code = f.read().replace('"'"'\r\n'"'"', '"'"'\n'"'"');f.close();exec(compile(code, __file__, '"'"'exec'"'"'))' install --record /tmp/pip-record-k6sefcno/install-record.txt --single-version-externally-managed --compile --install-headers /var/lib/boinc-client/slots/0/gpugridpy/include/python3.8/atari-py cwd: /tmp/pip-install-kvyy94ud/atari-py_bc0e384c30f043aca5cad42554937b02/ Looks like a typo. | |

| ID: 58045 | Rating: 0 | rate:

| |

|

Shame the tasks are misconfigured. I ran through a dozen of them on a host with errors. With the scarcity of work, every little bit is appreciated and can be used. | |

| ID: 58058 | Rating: 0 | rate:

| |

|

@abouh, could you check your configuration again? The tasks are failing during the build process with cmake. cmake normally isn't installed in Linux and when it is it is not normally installed into the PATH environment. | |

| ID: 58061 | Rating: 0 | rate:

| |

|

Hello everyone, sorry for the late reply. | |

| ID: 58104 | Rating: 0 | rate:

| |

|

Multiple different failure modes among the four hosts that have failed (so far) to run workunit 27102466. | |

| ID: 58112 | Rating: 0 | rate:

| |

|

The error reported in the job with result ID 32730901 is due to a conda environment error detected and solved during previous testing bouts. | |

| ID: 58114 | Rating: 0 | rate:

| |

|

OK, I've reset both my Linux hosts. Fortunately I'm on a fast line for the replacement download... | |

| ID: 58115 | Rating: 0 | rate:

| |

|

Task e1a15-ABOU_rnd_ppod_3-0-1-RND2976_3 was the first to run after the reset, but unfortunately it failed too. | |

| ID: 58116 | Rating: 0 | rate:

| |

|

I reset the project on my host. still failed. | |

| ID: 58117 | Rating: 0 | rate:

| |

|

I couldn't get your imgur image to load, just a spinner. | |

| ID: 58118 | Rating: 0 | rate:

| |

|

Yeah I get a message that Imgur is over capacity (first time I’ve ever seen that). Their site must be having maintenance or getting hammered. It was working earlier. I guess just try again a little later. | |

| ID: 58119 | Rating: 0 | rate:

| |

|

I've had two tasks complete on a host that was previously erroring out: | |

| ID: 58120 | Rating: 0 | rate:

| |

|

Hello everyone, | |

| ID: 58123 | Rating: 0 | rate:

| |

|

Yes I was progressively testing for how many steps the Agents could be trained and I forgot to increase the credits proportionally to the training steps. I will correct that in the immediate next batch, sorry and thanks for making us notice. | |

| ID: 58124 | Rating: 0 | rate:

| |

|

On mine, free memory (as reported in top) dropped from approximately 25,500 (when running an ACEMD task) to 7,000. | |

| ID: 58125 | Rating: 0 | rate:

| |

|

thanks for the clarification. | |

| ID: 58127 | Rating: 0 | rate:

| |

I agree with PDW that running work on all CPUs threads when BOINC expects at most that 1 CPU thread will be used will be problematic for most users who run CPU work from other projects. The normal way of handling that is to use the [MT] (multi-threaded) plan class mechanism in BOINC - these trial apps are being issued using the same [cuda1121] plan class as the current ACEMD production work. Having said that, it might be quite tricky to devise a combined [CUDA + MT] plan class. BOINC code usually expects a simple-minded either/or solution, not a combination. And I don't really like the standard MT implementation, which defaults to using every possible CPU core in the volunteer's computer. Not polite. MT can be tamed by using an app_config.xml or app_info.xml file, but you may need to tweak both <cpu_usage> (for BOINC scheduling purposes) and something like a command line parameter to control the spawning behaviour of the app. | |

| ID: 58132 | Rating: 0 | rate:

| |

|

given the current state of these beta tasks, I have done the following on my 7xGPU 48-thread system. allowed only 3x Python Beta tasks to run since the systems only have 64GB ram and each process is using ~20GB. <app_config> <app> <name>acemd3</name> <gpu_versions> <cpu_usage>1.0</cpu_usage> <gpu_usage>1.0</gpu_usage> </gpu_versions> </app> <app> <name>PythonGPU</name> <gpu_versions> <cpu_usage>5.0</cpu_usage> <gpu_usage>1.0</gpu_usage> </gpu_versions> <max_concurrent>3</max_concurrent> </app> </app_config> will see how it works out when more python beta tasks flow. and adjust as the project adjusts settings. abouh, before you start releasing more beta tasks, could you give us a heads up to what we should expect and/or what you changed about them? ____________  | |

| ID: 58134 | Rating: 0 | rate:

| |

|

I finished up a python gpu task last night on one host and saw it spawned a ton of processes that used up 17GB of system memory. I have 32GB minimum in all my hosts and it was not a problem. | |

| ID: 58135 | Rating: 0 | rate:

| |

I finished up a python gpu task last night on one host and saw it spawned a ton of processes that used up 17GB of system memory. I have 32GB minimum in all my hosts and it was not a problem. Good to know Keith. Did you by chance get a look at GPU utilization? Or CPU thread utilization of the spawns? ____________  | |

| ID: 58136 | Rating: 0 | rate:

| |

I finished up a python gpu task last night on one host and saw it spawned a ton of processes that used up 17GB of system memory. I have 32GB minimum in all my hosts and it was not a problem. Gpu utilization was at 3%. Each spawn used up about 170MB of memory and fluctuated around 13-17% cpu utilization. | |

| ID: 58137 | Rating: 0 | rate:

| |

|

good to know. so what I experienced was pretty similar. | |

| ID: 58138 | Rating: 0 | rate:

| |

|

Yes primarily Universe and a few TN-Grid tasks were running also. | |

| ID: 58140 | Rating: 0 | rate:

| |

|

I will send some more tasks later today with similar requirements as the last ones, with 32 multithreading reinforcement learning environments running in parallel for the agent to interact with. | |

| ID: 58141 | Rating: 0 | rate:

| |

|

I got 3 of them just now. all failed with tracebacks after several minutes of run time. seems like there's still some coding bugs in the application. all wingmen are failing similarly: | |

| ID: 58143 | Rating: 0 | rate:

| |

|

the new one I just got seems to be doing better. less CPU use, and it looks like i'm seeing the mentioned 60-80% spikes on the GPU occasionally. | |

| ID: 58144 | Rating: 0 | rate:

| |

|

I normally test the jobs locally first, to then run a couple of small batches of tasks in GPUGrid in case some error that did not appear locally occurs. The first small batch failed so I could fix the error in the second one. Now that the second batch succeeded will send a bigger batch of tasks. | |

| ID: 58145 | Rating: 0 | rate:

| |

|

I must be crunching one of the fixed second batch currently on this daily driver. Seems to be progressing nicely. | |

| ID: 58146 | Rating: 0 | rate:

| |

|

these new ones must be pretty long. | |

| ID: 58147 | Rating: 0 | rate:

| |

|

I got the first one of the Python WUs for me, and am a little concerned. After 3.25 hours it is only 10% complete. GPU usage seems to be about what you all are saying, and same with CPU. However, I also only have 8 cores/16 threads, with 6 other CPU work units running (TN Grid and Rosetta 4.2). Should I be limiting the other work to let these run? (16 GB RAM). | |

| ID: 58148 | Rating: 0 | rate:

| |

|

I don't think BOINC knows how to handle interpreting the estimated run_times of these Python tasks. I wouldn't worry about it. | |

| ID: 58149 | Rating: 0 | rate:

| |

|

I had the same feeling, Keith | |

| ID: 58150 | Rating: 0 | rate:

| |

|

also those of us running these, should probably prepare for VERY low credit reward. | |

| ID: 58151 | Rating: 0 | rate:

| |

|

I got one task early on that rewarded more than reasonable credit. | |

| ID: 58152 | Rating: 0 | rate:

| |

|

That task was short though. The threshold is around 2million credit reward if I remember. | |

| ID: 58153 | Rating: 0 | rate:

| |

|

confirmed. Peak FLOP Count One-time cheats ____________  | |

| ID: 58154 | Rating: 0 | rate:

| |

|

Yep, I saw that. Same credit as before and now I remember this bit of code being brought up before back in the old Seti days. | |

| ID: 58155 | Rating: 0 | rate:

| |

|

Awoke to find 4 PythonGPU WUs running on 3 computers. All had OPN & TN-Grid WUs running with CPU use flat-lined at 100%. Suspended all other CPU WUs to see what PG was using and got a band mostly contained in the range 20 to 40%. Then I tried a couple of scenarios. | |

| ID: 58157 | Rating: 0 | rate:

| |

|

I did something similar with my two 7xGPU systems. | |

| ID: 58158 | Rating: 0 | rate:

| |

|

Hello everyone, | |

| ID: 58161 | Rating: 0 | rate:

| |

|

thanks! | |

| ID: 58162 | Rating: 0 | rate:

| |

1. Detected multiple CUDA out of memory errors. Locally the jobs use 6GB of GPU memory. It seems difficult to lower the GPU memory requirements for now, so jobs running in GPUs with less memory should fail. I've tried to set preferences at all my less than 6GB RAM GPU hosts for not receiving Python Runtime (GPU, beta) app: Run only the selected applicationsACEMD3: yes But I've still received one more Python GPU task at one of them. This makes me to get in doubt whether GPUGRID preferences are currently working as intended or not... Task e1a1-ABOU_rnd_ppod_8-0-1-RND5560_0 RuntimeError: CUDA out of memory. | |

| ID: 58163 | Rating: 0 | rate:

| |

This makes me to get in doubt whether GPUGRID preferences are currently working as intended or not... my question is a different one: as long as the GPUGRID team now concentrates on Python, no more ACEMD tasks will come? | |

| ID: 58164 | Rating: 0 | rate:

| |

But I've still received one more Python GPU task at one of them. I had the same problem, you need to set the 'Run test applications' to No It looks like having that set to Yes will over ride any specific application setting you set. | |

| ID: 58166 | Rating: 0 | rate:

| |

|

Thanks, I'll try | |

| ID: 58167 | Rating: 0 | rate:

| |

This makes me to get in doubt whether GPUGRID preferences are currently working as intended or not... Hard to say. Toni and Gianni both stated the work would be very limited and infrequent until they can fill the new PhD positions. But there have been occasional "drive-by" drops of cryptic scout work I've noticed along with the occasional standard research acemd3 resend. Sounds like @abouh is getting ready to drop a larger debugged batch of Python on GPU tasks. | |

| ID: 58168 | Rating: 0 | rate:

| |

Sounds like @abouh is getting ready to drop a larger debugged batch of Python on GPU tasks. Would be great if they work on Windows, too :-) | |

| ID: 58169 | Rating: 0 | rate:

| |

|

Today I will send a couple of batches with short tasks for some final debugging of the scripts and then later I will send a big batch of debugged tasks. | |

| ID: 58170 | Rating: 0 | rate:

| |

|

The idea is to make it work for Windows in the future as well, once it works smoothly on linux. | |

| ID: 58171 | Rating: 0 | rate:

| |

|

Thanks, looks like they are small enough to fit on a 16GB system now. using about 12GB. | |

| ID: 58172 | Rating: 0 | rate:

| |

Thanks, looks like they are small enough to fit on a 16GB system now. using about 12GB. not sure what happened to it. take a look. https://gpugrid.net/result.php?resultid=32731651 ____________  | |

| ID: 58173 | Rating: 0 | rate:

| |

|

Looks like a needed package was not retrieved properly with a "deadline exceeded" error. | |

| ID: 58174 | Rating: 0 | rate:

| |

Looks like a needed package was not retrieved properly with a "deadline exceeded" error. It's interesting, looking at the stderr output. it appears that this app is communicating over the internet to send and receive data outside of BOINC. and to servers that are not belonging to the project. (i think the issue is that I was connected to my VPN checking something else and I left the connection active and it might have had an issue reaching the site it was trying to access) not sure how kosher that is. I think BOINC devs don't intend/desire this kind of behavior. some people might have some security concerns of the app doing these things outside of BOINC. might be a little smoother to do all communication only between the host and the project and only via the BOINC framework. if data needs to be uploaded elsewhere, it might be better for the project to do that on the backend. just my .02 ____________  | |

| ID: 58175 | Rating: 0 | rate:

| |

1. Detected multiple CUDA out of memory errors. Locally the jobs use 6GB of GPU memory. It seems difficult to lower the GPU memory requirements for now, so jobs running in GPUs with less memory should fail. I'm getting CUDA out of memory failures and all my cards have 10 to 12 GB of GDDR: 1080 Ti, 2080 Ti, 3080 Ti and 3080. There must be something else going on. I've also stopped trying to time-slice with PythonGPU. It should have a dedicated GPU and I'm leaving 32 CPU threads open for it. I keep looking for Pinocchio but have yet to see him. Where does it come from? Maybe I never got it. | |

| ID: 58176 | Rating: 0 | rate:

| |

The idea is to make it work for Windows in the future as well, once it works smoothly on linux. okay, sounds good; thanks for the information | |

| ID: 58177 | Rating: 0 | rate:

| |

|

I'm running one of the new batch and at first the task was only using 2.2GB of gpu memory but now it has clocked backup to 6.6GB of gpu memory. | |

| ID: 58178 | Rating: 0 | rate:

| |

|

Just had one that's listed as "aborted by user." I didn't abort it. | |

| ID: 58179 | Rating: 0 | rate:

| |

|

RuntimeError: CUDA out of memory. Tried to allocate 112.00 MiB (GPU 0; 11.77 GiB total capacity; 3.05 GiB already allocated; 50.00 MiB free; 3.21 GiB reserved in total by PyTorch) If reserved memory is >> allocated memory try setting max_split_size_mb to avoid fragmentation. See documentation for Memory Management and PYTORCH_CUDA_ALLOC_CONF | |

| ID: 58180 | Rating: 0 | rate:

| |

|

The ray errors are normal and can be ignored. | |

| ID: 58181 | Rating: 0 | rate:

| |

1. Detected multiple CUDA out of memory errors. Locally the jobs use 6GB of GPU memory. It seems difficult to lower the GPU memory requirements for now, so jobs running in GPUs with less memory should fail. I'm not doing anything at all in mitigation for the Python on GPU tasks other than to only run one at a time. I've been successful in almost all cases other than the very first trial ones in each evolution. | |

| ID: 58182 | Rating: 0 | rate:

| |

|

What was halved was the amount of Agent training per task, and therefore the total amount of time required to completed it. | |

| ID: 58183 | Rating: 0 | rate:

| |

|

During the task, the performance of the Agent is intermittently sent to https://wandb.ai/ to track how the agent is doing in the environment as training progresses. It immensely helps to understand the behaviour of the agent and facilitates research, as it allows visualising the information in a structured way. | |

| ID: 58184 | Rating: 0 | rate:

| |

|

Pinocchio probably only caused problems in a subset of hosts, as it was due to one of the firsts test batches having a wrong conda environment requirements file. It was a small batch. | |

| ID: 58185 | Rating: 0 | rate:

| |

|

My machines are probably just above the minimum spec for the current batches - 16 GB RAM, and 6 GB video RAM on a GTX 1660. | |

| ID: 58186 | Rating: 0 | rate:

| |

What was halved was the amount of Agent training per task, and therefore the total amount of time required to completed it. Halved? I've got one at nearly 21.5 hours on a 3080Ti and still going | |

| ID: 58187 | Rating: 0 | rate:

| |

|

This shows the timing discrepancy, a few minutes before task 32731655 completed. | |

| ID: 58188 | Rating: 0 | rate:

| |

|

i still think the 5,000,000 GFLOPs count is far too low. since these run for 12-24hrs depending on host (GPU speed does not seem to be a factor in this since GPU utilization is so low, most likely CPU/memory bound) and there seems to be a bit of a discrepancy in run time per task. I had a task run for 9hrs on my 3080Ti, while another user claims 21+ hrs on his 3080Ti. and I've had several tasks get killed around 12hrs for exceeded time limit, while others ran for longer. lots of inconsistencies here. | |

| ID: 58189 | Rating: 0 | rate:

| |

|

Because this project still uses DCF, the 'exceeded time limit' problem should go away as soon as you can get a single task to complete. Both my machines with finished tasks are now showing realistic estimates, but with DCFs of 5+ and 10+ - I agree, the FLOPs estimate should be increased by that sort of multiplier to keep estimates balanced against other researchers' work for the project. | |

| ID: 58190 | Rating: 0 | rate:

| |

|

my system that completed a few tasks had a DCF of 36+ | |

| ID: 58191 | Rating: 0 | rate:

| |

checkpointing also still isn't working. See my screenshot. "CPU time since checkpoint: 16:24:44" | |

| ID: 58192 | Rating: 0 | rate:

| |

|

I've checked a sched_request when reporting. <result> <name>e1a26-ABOU_rnd_ppod_11-0-1-RND6936_0</name> <final_cpu_time>55983.300000</final_cpu_time> <final_elapsed_time>36202.136027</final_elapsed_time> That's task 32731632. So it's the server applying the 'sanity(?) check' "elapsed time not less than CPU time". That's right for a single core GPU task, but not right for a task with multithreaded CPU elements. | |

| ID: 58193 | Rating: 0 | rate:

| |

|

As mentioned by Ian&Steve C., GPU speed influences only partially task completion time. | |

| ID: 58194 | Rating: 0 | rate:

| |

|

I will look into the reported issues before sending the next batch, to see if I can find a solution for both the problem of jobs being killed due to “exceeded time limit” and the progress and checkpointing problems. | |

| ID: 58195 | Rating: 0 | rate:

| |

From what Ian&Steve C. mentioned, I understand that increasing the "Estimated Computation Size", however BOINC calculates that, could solve the problem of jobs being killed? The jobs reach us with a workunit description: <workunit> <name>e1a24-ABOU_rnd_ppod_11-0-1-RND1891</name> <app_name>PythonGPU</app_name> <version_num>401</version_num> <rsc_fpops_est>5000000000000000.000000</rsc_fpops_est> <rsc_fpops_bound>250000000000000000.000000</rsc_fpops_bound> <rsc_memory_bound>4000000000.000000</rsc_memory_bound> <rsc_disk_bound>10000000000.000000</rsc_disk_bound> <file_ref> <file_name>e1a24-ABOU_rnd_ppod_11-0-run</file_name> <open_name>run.py</open_name> <copy_file/> </file_ref> <file_ref> <file_name>e1a24-ABOU_rnd_ppod_11-0-data</file_name> <open_name>input.zip</open_name> <copy_file/> </file_ref> <file_ref> <file_name>e1a24-ABOU_rnd_ppod_11-0-requirements</file_name> <open_name>requirements.txt</open_name> <copy_file/> </file_ref> <file_ref> <file_name>e1a24-ABOU_rnd_ppod_11-0-input_enc</file_name> <open_name>input</open_name> <copy_file/> </file_ref> </workunit> It's the fourth line, '<rsc_fpops_est>', which causes the problem. The job size is given as the estimated number of floating point operations to be calculated, in total. BOINC uses this, along with the estimated speed of the device it's running on, to estimate how long the task will take. For a GPU app, it's usually the speed of the GPU that counts, but in this case - although it's described as a GPU app - the dominant factor might be the speed of the CPU. BOINC doesn't take any direct notice of that. The jobs are killed when they reach the duration calculated from the next line, '<rsc_fpops_bound>'. A quick and dirty fix while testing might be to increase that value even above the current 50x the original estimate, but that removes a valuable safeguard during normal running. | |

| ID: 58196 | Rating: 0 | rate:

| |

|

I see, thank you very much for the info. I asked Toni to help me adjusting the "rsc_fpops_est" parameter. Hopefully the next jobs won't be aborted by the server. | |

| ID: 58197 | Rating: 0 | rate:

| |

|

Thanks @abouh for working with us in debugging your application and work units. | |

| ID: 58198 | Rating: 0 | rate:

| |

|

Thank you for your kind support. During the task, the agent first interacts with the environments for a while, then uses the GPU to process the collected data and learn from it, then interacts again with the environments, and so on. This behavior can be seen at some tests described at my Managing non-high-end hosts thread. | |

| ID: 58200 | Rating: 0 | rate:

| |

|

I just sent another batch of tasks. | |

| ID: 58201 | Rating: 0 | rate:

| |

I just sent another batch of tasks. Thank you very much for this kind of Christmas present! Merry Christmas to everyone crunchers worldwide 🎄✨ | |

| ID: 58202 | Rating: 0 | rate:

| |

|

1,000,000,000 GFLOPs - initial estimate 1690d 21:37:58. That should be enough! | |

| ID: 58203 | Rating: 0 | rate:

| |

I tested locally and the progress and the restart.chk files are correctly generated and updated. In a preliminary sight of one new Python GPU task received today: - Progress estimation is now working properly, updating by 0,9% increments. - Estimated computation size has raised to 1,000,000,000 GFLOPs, as also confirmed by Richard Haselgrove - Checkpointing seems to be working also, and is being stored at about every two minutes. - Learning cycle period has reduced to 11 seconds from 21 seconds observed at previous task. sudo nvidia-smi dmon - GPU dedicated RAM usage seems to have been reduced, but I don't know if enough for running at 4 GB RAM GPUs (?) - Currrent progress for task e1a20-ABOU_rnd_ppod_13-0-1-RND1192_0 is 28,9% after 2 hours and 13 minutes running. This leads to a total true execution time of about 7 hours and 41 minutes at my Host #569442 Well done! | |

| ID: 58204 | Rating: 0 | rate:

| |

|

Same observed behavior. Gpu memory halved, progress indicator normal and GFLOPS in line with actual usage. | |

| ID: 58208 | Rating: 0 | rate:

| |

- GPU dedicated RAM usage seems to have been reduced, but I don't know if enough for running at 4 GB RAM GPUs (?) I'm answering to myself: I enabled Python GPU tasks requesting in my GTX 1650 SUPER 4 GB system, and I happened to catch this previously failed task e1a21-ABOU_rnd_ppod_13-0-1-RND2308_1 This task has passed the initial processing steps, and has reached the learning cycle phase. At this point, memory usage is just at the limit of the 4 GB GPU available RAM. Waiting to see whether this task will be succeeding or not. System RAM usage keeps being very high. 99% of the 16 GB available RAM at this system is currently in use. | |

| ID: 58209 | Rating: 0 | rate:

| |

- Currrent progress for task e1a20-ABOU_rnd_ppod_13-0-1-RND1192_0 is 28,9% after 2 hours and 13 minutes running. This leads to a total true execution time of about 7 hours and 41 minutes at my Host #569442 That's roughly the figure I got in the early stages of today's tasks. But task 32731884 has just finished with <result> <name>e1a17-ABOU_rnd_ppod_13-0-1-RND0389_3</name> <final_cpu_time>59637.190000</final_cpu_time> <final_elapsed_time>39080.805144</final_elapsed_time> That's very similar (and on the same machine) as the one I reported in message 58193. So I don't think the task duration has changed much: maybe the progress %age isn't quite linear (but not enough to worry about). | |

| ID: 58210 | Rating: 0 | rate:

| |

|

Hello, 21:28:07 (152316): wrapper (7.7.26016): starting I have found an issue from Richard Haselgrove talking about this error: https://github.com/BOINC/boinc/issues/4125 It seems like the users getting this error could simply solve it by setting PrivateTmp=true. Is that correct? What is the appropriate way to modify that? ____________ | |

| ID: 58218 | Rating: 0 | rate:

| |

It seems like the users getting this error could simply solve it by setting PrivateTmp=true. Is that correct? What is the appropriate way to modify that? Right. I gave a step-by-step solution based on Richard Haselgrove finding at my Message #55986 It worked fine for all my hosts. | |

| ID: 58219 | Rating: 0 | rate:

| |

|

Thank you! | |

| ID: 58220 | Rating: 0 | rate:

| |

|

Some new (to me) errors in https://www.gpugrid.net/result.php?resultid=32732017 | |

| ID: 58221 | Rating: 0 | rate:

| |

|

it seems checkpointing still isnt working correctly. | |

| ID: 58222 | Rating: 0 | rate:

| |

|

I saw the same issue on my last task which was checkpointed past 20% yet reset to 10% upon restart. | |

| ID: 58223 | Rating: 0 | rate:

| |

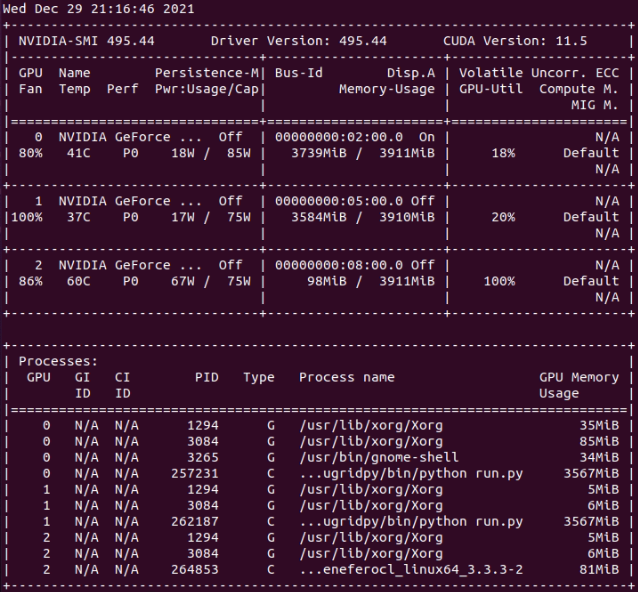

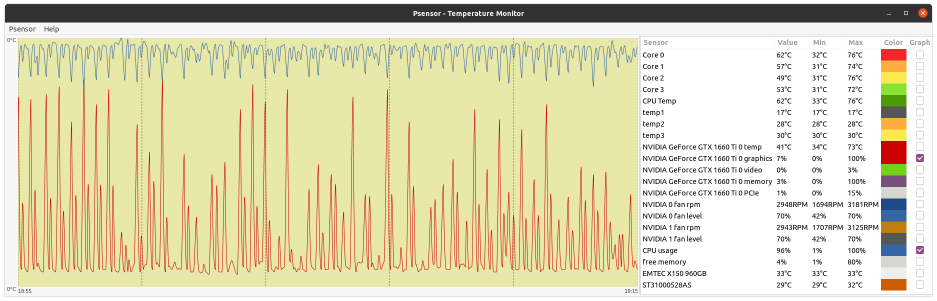

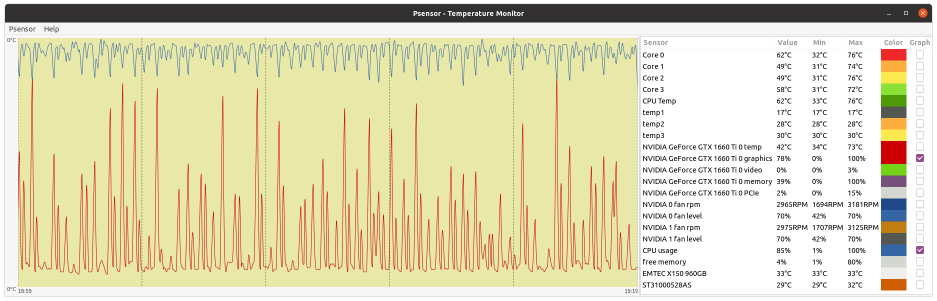

- GPU dedicated RAM usage seems to have been reduced, but I don't know if enough for running at 4 GB RAM GPUs (?) Two of my hosts with 4 GB dedicated RAM GPUs have succeeded their latest Python GPU tasks so far. If it is planned to be kept GPU RAM requirements this way, it widens the app to a quite greater number of hosts. Also I happened to catch two simultaneous Python tasks at my triple GTX 1650 GPU host. I then urgently suspended requesting for Gpugrid tasks at BOINC Manager... Why? This host system RAM size is 32 GB. When the second Python task started, free system RAM decreased to 1% (!). I grossly estimate that environment for each Python task takes about 16 GB system RAM. I guess that an eventual third concurrent task might have crashed itself, or even crashed the whole three Python tasks due to lack of system RAM. I was watching to Psensor readings when the first of the two Python tasks finished, and then the free system memory drastically increased again from 1% to 38%. I also took a nvidia-smi screenshot, where can be seen that each Python task was respectively running at GPU 0 and GPU 1, while GPU 2 was processing a PrimeGrid CUDA GPU task.  | |

| ID: 58225 | Rating: 0 | rate:

| |

|

now that I've upgraded my single 3080Ti host from a 5950X w/16GB ram to a 7402P/128GB ram, I want to see if I can even run 2x GPUGRID tasks on the same GPU. I see about 5GB VRAM use on the tasks I've processed so far. so with so much extra system ram and 12GB VRAM, it might work lol. | |

| ID: 58226 | Rating: 0 | rate:

| |

|

Regarding the checkpointing problem, the approach I follow is to check the progress file (if exists) at the beginning of the python script and then continue the job from there. | |

| ID: 58227 | Rating: 0 | rate:

| |

now that I've upgraded my single 3080Ti host from a 5950X w/16GB ram to a 7402P/128GB ram, I want to see if I can even run 2x GPUGRID tasks on the same GPU. I see about 5GB VRAM use on the tasks I've processed so far. so with so much extra system ram and 12GB VRAM, it might work lol. The last two tasks on my system with a 3080Ti ran concurrently and completed successfully. https://www.gpugrid.net/results.php?hostid=477247 | |

| ID: 58228 | Rating: 0 | rate:

| |

|

Errors in e6a12-ABOU_rnd_ppod_15-0-1-RND6167_2 (created today): | |

| ID: 58248 | Rating: 0 | rate:

| |

|

One user mentioned that could not solve the error INTERNAL ERROR: cannot create temporary directory! This is the configuration he is using: ### Editing /etc/systemd/system/boinc-client.service.d/override.conf I was just wondering if there is any possible reason why it should not work ____________ | |

| ID: 58249 | Rating: 0 | rate:

| |

|

I am using a systemd file generated from a PPA maintained by Gianfranco Costamagna. It's automatically generated from Debian sources, and kept up-to-date with new releases automatically. It's currently supplying a BOINC suite labelled v7.16.17 [Unit] Description=Berkeley Open Infrastructure Network Computing Client Documentation=man:boinc(1) After=network-online.target [Service] Type=simple ProtectHome=true PrivateTmp=true ProtectSystem=strict ProtectControlGroups=true ReadWritePaths=-/var/lib/boinc -/etc/boinc-client Nice=10 User=boinc WorkingDirectory=/var/lib/boinc ExecStart=/usr/bin/boinc ExecStop=/usr/bin/boinccmd --quit ExecReload=/usr/bin/boinccmd --read_cc_config ExecStopPost=/bin/rm -f lockfile IOSchedulingClass=idle # The following options prevent setuid root as they imply NoNewPrivileges=true # Since Atlas requires setuid root, they break Atlas # In order to improve security, if you're not using Atlas, # Add these options to the [Service] section of an override file using # sudo systemctl edit boinc-client.service #NoNewPrivileges=true #ProtectKernelModules=true #ProtectKernelTunables=true #RestrictRealtime=true #RestrictAddressFamilies=AF_INET AF_INET6 AF_UNIX #RestrictNamespaces=true #PrivateUsers=true #CapabilityBoundingSet= #MemoryDenyWriteExecute=true [Install] WantedBy=multi-user.target That has the 'PrivateTmp=true' line in the [Service] section of the file, rather than isolated at the top as in your example. I don't know Linux well enough to know how critical the positioning is. We had long discussions in the BOINC development community a couple of years ago, when it was discovered that the 'PrivateTmp=true' setting blocked access to BOINC's X-server based idle detection. The default setting was reversed for a while, until it was discovered that the reverse 'PrivateTmp=false' setting caused the problem creating temporary directories that we observe here. I think that the default setting was reverted to true, but the discussion moved into the darker reaches of the Linux package maintenance managers, and the BOINC development cycle became somewhat disjointed. I'm no longer fully up-to-date with the state of play. | |

| ID: 58250 | Rating: 0 | rate:

| |

|

A simpler answer might be ### Lines below this comment will be discarded so the file as posted won't do anything at all - in particular, it won't run BOINC! | |

| ID: 58251 | Rating: 0 | rate:

| |

|

Thank you! I reviewed the code and detected the source of the error. I am currently working to solve it. | |

| ID: 58253 | Rating: 0 | rate:

| |

|

Everybody seems to be getting the same error in today's tasks: | |

| ID: 58254 | Rating: 0 | rate:

| |

|

I believe I got one of the test, fixed tasks this morning based on the short crunch time and valid report. | |

| ID: 58255 | Rating: 0 | rate:

| |

|

Yes, your workunit was "created 7 Jan 2022 | 17:50:07 UTC" - that's a couple of hours after the ones I saw. | |

| ID: 58256 | Rating: 0 | rate:

| |

|

I just sent a batch that seems to fail with File "/var/lib/boinc-client/slots/30/python_dependencies/ppod_buffer_v2.py", line 325, in before_gradients For some reason it did not crash locally. "Fortunately" it will crash after only a few minutes, and it is easy to solve. I am very sorry for the inconvenience... I will send also a corrected batch with tasks of normal duration. I have tried to reduce the GPU memory requirements a bit in the new tasks. ____________ | |

| ID: 58263 | Rating: 0 | rate:

| |

|

Got one of those - failed as you describe. | |

| ID: 58264 | Rating: 0 | rate:

| |

|

I got 20 bad WU's today on this host: https://www.gpugrid.net/results.php?hostid=520456 Stderr Ausgabe <core_client_version>7.16.6</core_client_version> <![CDATA[ <message> process exited with code 195 (0xc3, -61)</message> <stderr_txt> 13:25:53 (6392): wrapper (7.7.26016): starting 13:25:53 (6392): wrapper (7.7.26016): starting 13:25:53 (6392): wrapper: running /usr/bin/flock (/var/lib/boinc-client/projects/www.gpugrid.net/miniconda.lock -c "/bin/bash ./miniconda-installer.sh -b -u -p /var/lib/boinc-client/projects/www.gpugrid.net/miniconda && /var/lib/boinc-client/projects/www.gpugrid.net/miniconda/bin/conda install -m -y -p gpugridpy --file requirements.txt ") 0%| | 0/45 [00:00<?, ?it/s] concurrent.futures.process._RemoteTraceback: ''' Traceback (most recent call last): File "concurrent/futures/process.py", line 368, in _queue_management_worker File "multiprocessing/connection.py", line 251, in recv TypeError: __init__() missing 1 required positional argument: 'msg' ''' The above exception was the direct cause of the following exception: Traceback (most recent call last): File "entry_point.py", line 69, in <module> File "concurrent/futures/process.py", line 484, in _chain_from_iterable_of_lists File "concurrent/futures/_base.py", line 611, in result_iterator File "concurrent/futures/_base.py", line 439, in result File "concurrent/futures/_base.py", line 388, in __get_result concurrent.futures.process.BrokenProcessPool: A process in the process pool was terminated abruptly while the future was running or pending. [6689] Failed to execute script entry_point 13:25:58 (6392): /usr/bin/flock exited; CPU time 3.906269 13:25:58 (6392): app exit status: 0x1 13:25:58 (6392): called boinc_finish(195) </stderr_txt> ]]> | |

| ID: 58265 | Rating: 0 | rate:

| |

|

I errored out 12 tasks created from 10:09:55 to 10:40:06. | |

| ID: 58266 | Rating: 0 | rate:

| |

|

And two of those were the batch error resends that now have failed. | |

| ID: 58268 | Rating: 0 | rate:

| |

|

You need to look at the creation time of the master WU, not of the individual tasks (which will vary, even within a WU, let alone a batch of WUs). | |

| ID: 58269 | Rating: 0 | rate:

| |

|

I have seen this error a few times. concurrent.futures.process.BrokenProcessPool: A process in the process pool was terminated abruptly while the future was running or pending. Do you think it could be due to a lack of resources? I think Linux starts killing processes if you are over capacity. ____________ | |

| ID: 58270 | Rating: 0 | rate:

| |

|

Might be the OOM-Killer kicking in. You would need to grep -i kill /var/log/messages* to check if processes were killed by the OOM-Killer. If that is the case you would have to configure /etc/sysctl.conf to let the system be less sensitive to brief out of memory conditions. | |

| ID: 58271 | Rating: 0 | rate:

| |

|

I Googled the error message, and came up with this stackoverflow thread. "The main module must be importable by worker subprocesses. This means that ProcessPoolExecutor will not work in the interactive interpreter. Calling Executor or Future methods from a callable submitted to a ProcessPoolExecutor will result in deadlock." Other search results may provide further clues. | |

| ID: 58272 | Rating: 0 | rate:

| |

|

Thanks! out of the possible explanations that could cause the error listed in the thread, I suspect it could be OS killing the threads do to a lack of resources. Could be not enough RAM, or maybe python raises this error if the ratio cores / processes is high? (I have seen some machines with 4 CPUs, and the tasks spawns 32 reinforcement learning environments). | |

| ID: 58273 | Rating: 0 | rate:

| |

|

What version of Python are the hosts that have the errors running? | |

| ID: 58274 | Rating: 0 | rate:

| |

What version of Python are the hosts that have the errors running? Same Python version as current mine. In case of doubt about conflicting Python versions, I published the solution that I applied to my hosts at Message #57833 It worked for my Ubuntu 20.04.3 LTS Linux distribution, but user mmonnin replied that this didn't work for him. mmonnin kindly published an alternative way at his Message #57840 | |

| ID: 58275 | Rating: 0 | rate:

| |

|

I saw the prior post and was about to mention the same thing. Not sure which one works as the PC has been able to run tasks. | |

| ID: 58276 | Rating: 0 | rate:

| |

|

All jobs should use the same python version (3.8.10), I define it in the requirements.txt file of the conda environment. | |

| ID: 58277 | Rating: 0 | rate:

| |

|

I have a failed task today involving pickle. | |

| ID: 58278 | Rating: 0 | rate:

| |

|

The tasks run on my Tesla K20 for a while, but then fail when they need to use PyTorch, which requires higher CUDA Capability. Oh well. Guess I'll stick to the ACEMED tasks. The error output doesn't list the requirements properly, but from a little Googling, it was updated to require 3.7 within the past couple years. The only Kepler card that has 3.7 is the Tesla K80. [W NNPACK.cpp:79] Could not initialize NNPACK! Reason: Unsupported hardware. /var/lib/boinc-client/slots/2/gpugridpy/lib/python3.8/site-packages/torch/cuda/__init__.py:120: UserWarning: Found GPU%d %s which is of cuda capability %d.%d. PyTorch no longer supports this GPU because it is too old. The minimum cuda capability supported by this library is %d.%d. While I'm here, is there any way to force the project to update my hardware configuration? It thinks my host has two Quadro K620s instead of one of those and the Tesla. | |

| ID: 58279 | Rating: 0 | rate:

| |

While I'm here, is there any way to force the project to update my hardware configuration? It thinks my host has two Quadro K620s instead of one of those and the Tesla. this is a problem (feature?) of BOINC, not the project. the project only knows what hardware you have based on what BOINC communicates to the project. with cards from the same vendor (nvidia/AMD/Intel) BOINC only lists the "best" card and then appends a number that's associated with how many total devices you have from that vendor. it will only list different models if they are from different vendors. within the nvidia vendor group, BOINC figures out the "best" device by checking the compute capability first, then memory capacity, then some third metric that i cant remember right now. BOINC deems the K620 to be "best" because it has a higher compute capability (5.0) than the Tesla K20 (3.5) even though the K20 is arguably the better card with more/faster memory and more cores. all in all, this has nothing to do with the project, and everything to do with BOINC's GPU ranking code. ____________  | |

| ID: 58280 | Rating: 0 | rate:

| |

While I'm here, is there any way to force the project to update my hardware configuration? It thinks my host has two Quadro K620s instead of one of those and the Tesla. Its often said as the "Best" card but its just the 1st https://www.gpugrid.net/show_host_detail.php?hostid=475308 This host has a 1070 and 1080 but just shows 2x 1070s as the 1070 is in the 1st slot. Any way to check for a "best" would come up with the 1080. Or the 1070Ti that used to be there with the 1070. | |

| ID: 58281 | Rating: 0 | rate:

| |

In your case, the metrics that BOINC is looking at are identical between the two cards (actually all three of the 1070, 1070Ti, and 1080 have identical specs as far as BOINC ranking is concerned). All have the same amount of VRAM and have the same compute capability. So the tie goes to device number I guess. If you were to swap the 1080 for even a weaker card with a better CC (like a GTX 1650) then that would get picked up instead, even when not in the first slot. ____________  | |

| ID: 58282 | Rating: 0 | rate:

| |

|

Ah, I get it. I thought it was just stuck, because it did have two K620s before. I didn't realize BOINC was just incapable of acknowledging different cards from the same vendor. Does this affect project statistics? The Milkyway@home folks are gonna have real inflated opinions of the K620 next time they check the numbers haha | |

| ID: 58283 | Rating: 0 | rate:

| |

|

Interesting I had seen this error once before locally, and I assumed it was due to a corrupted input file. | |

| ID: 58284 | Rating: 0 | rate:

| |

|

This is the document I had found about fixing the BrokenProcessPool error. | |

| ID: 58285 | Rating: 0 | rate:

| |

|

@abouh: Thank you for PM me twice! | |

| ID: 58286 | Rating: 0 | rate:

| |

Also I happened to catch two simultaneous Python tasks at my triple GTX 1650 GPU host. After upgrading system RAM from 32 GB to 64 GB at above mentioned host, it has successfully processed three concurrent ABOU Python GPU tasks: e2a43-ABOU_rnd_ppod_baseline_rnn-0-1-RND6933_3 - Link: https://www.gpugrid.net/result.php?resultid=32733458 e2a21-ABOU_rnd_ppod_baseline_rnn-0-1-RND3351_3 - Link: https://www.gpugrid.net/result.php?resultid=32733477 e2a27-ABOU_rnd_ppod_baseline_rnn-0-1-RND5112_1 - Link: https://www.gpugrid.net/result.php?resultid=32733441 More details at regarding Message #58287 | |

| ID: 58288 | Rating: 0 | rate:

| |

|

Hello everyone, Traceback (most recent call last): It seems like the task is not allowed to create a new dirs inside its working directory. Just wondering if it could be some kind of configuration problem, just like the "INTERNAL ERROR: cannot create temporary directory!" for which a solution was already shared. ____________ | |

| ID: 58289 | Rating: 0 | rate:

| |

|

My question would be: what is the working directory? /home/boinc-client/slots/1/... but the final failure concerns /var/lib/boinc-client That sounds like a mixed-up installation of BOINC: 'home' sounds like a location for a user-mode installation of BOINC, but '/var/lib/' would be normal for a service mode installation. It's reasonable for the two different locations to have different write permissions. What app is doing the writing in each case, and what account are they running under? Could the final write location be hard-coded, but the others dependent on locations supplied by the local BOINC installation? | |

| ID: 58290 | Rating: 0 | rate:

| |

|

Hi | |

| ID: 58291 | Rating: 0 | rate:

| |

|

Right so the working directory is /home/boinc-client/slots/1/... to which the script has full access. The script tries to create a directory to save the logs, but I guess it should not do it in /var/lib/boinc-client So I think the problem is just that the package I am using to log results by default saves them outside the working directory. Should be easy to fix. ____________ | |

| ID: 58292 | Rating: 0 | rate:

| |

|

BOINC has the concept of a "data directory". Absolutely everything that has to be written should be written somewhere in that directory or its sub-directories. Everything else must be assumed to be sandboxed and inaccessible. | |

| ID: 58293 | Rating: 0 | rate:

| |

The PC now as 1080 and 1080Ti with the Ti having more VRAM. BOINC shows 2x 1080. The 1080 is GPU 0 in nvidia-smi and so have the other BOINC displayed GPUs. The Ti is in the physical 1st slot. This PC happened to pick up two Python tasks. They aren't taking 4 days this time. 5:45 hr:min at 38.8% and 31 min at 11.8%. | |

| ID: 58294 | Rating: 0 | rate:

| |

what motherboard? and what version of BOINC?, your hosts are hidden so I cannot inspect myself. PCIe enumeration and ordering can be inconsistent against consumer boards. My server boards seem to enumerate starting from the slot furthest from the CPU socket, while most consumer boards are the opposite with device0 at the slot closest to the CPU socket. or do you perhaps run a locked coproc_info.xml file, this would prevent any GPU changes from being picked up by BOINC if it can't write to the coproc file. edit: also I forgot that most versions of BOINC incorrectly detect nvidia GPU memory. they will all max out at 4GB due to a bug in BOINC. So to BOINC your 1080Ti has the same amount of memory as your 1080. and since the 1080Ti is still a pascal card like the 1080, it has the same compute capability, so you're running into the same specs between them all still to get it to sort properly, you need to fix BOINC code, or use a GPU with higher or lower compute capability. put a Turing card in the system not in the first slot and BOINC will pick it up as GPU0 ____________  | |

| ID: 58295 | Rating: 0 | rate:

| |

|

The tests continue. Just reported e2a13-ABOU_rnd_ppod_baseline_cnn_nophi_2-0-1-RND9761_1, with final stats <result> <name>e2a13-ABOU_rnd_ppod_baseline_cnn_nophi_2-0-1-RND9761_1</name> <final_cpu_time>107668.100000</final_cpu_time> <final_elapsed_time>46186.399529</final_elapsed_time> That's an average CPU core count of 2.33 over the entire run - that's high for what is planned to be a GPU application. We can manage with that - I'm sure we all want to help develop and test the application for the coming research run - but I think it would be helpful to put more realistic usage values into the BOINC scheduler. | |

| ID: 58296 | Rating: 0 | rate:

| |

|

It's not a GPU application. It uses both CPU and GPU. | |

| ID: 58297 | Rating: 0 | rate:

| |

|

Do you mean changing some of the BOINC parameters like it was done in the case of <rsc_fpops_est>? | |

| ID: 58298 | Rating: 0 | rate:

| |

|

It would need to be done in the plan class definition. Toni said that you define your plan classes in C++ code, so there are some examples in Specifying plan classes in C++. | |

| ID: 58299 | Rating: 0 | rate:

| |

|

it seems to work better now but I've reached time limit after 1800sec 19:39:23 (6124): task /usr/bin/flock reached time limit 1800 application ./gpugridpy/bin/python missing | |

| ID: 58300 | Rating: 0 | rate:

| |

|

I'd like to hear what others are using for ncpus for their Python tasks in their app_config files. | |

| ID: 58301 | Rating: 0 | rate:

| |

|

I'm still running them at 1 CPU plus 1 GPU. They run fine, but when they are busy on the CPU-only sections, they steal time from the CPU tasks that are running at the same time - most obviously from CPDN. | |

| ID: 58302 | Rating: 0 | rate:

| |

|

You could employ ProcessLasso on the apps and up their priority I suppose. | |

| ID: 58303 | Rating: 0 | rate:

| |

I'd like to hear what others are using for ncpus for their Python tasks in their app_config files. I think that Python GPU App is very efficient in adapting to any amount of CPU cores, and taking profit of available CPU resources. This seems to be in some way independent of ncpus parameter at Gpugrid app_config.xml Setup at my twin GPU system is as follows: <app> <name>PythonGPU</name> <gpu_versions> <gpu_usage>1.0</gpu_usage> <cpu_usage>0.49</cpu_usage> </gpu_versions> </app> And setup for my triple GPU system is as follows: <app> <name>PythonGPU</name> <gpu_versions> <gpu_usage>1.0</gpu_usage> <cpu_usage>0.33</cpu_usage> </gpu_versions> </app> The finality for this is being able to respectively run two or three concurrent Python GPU tasks without reaching a full "1" CPU core (2 x 0.49 = 0.98; 3 x 0.33 = 0.99). Then, I manually control CPU usage by setting "Use at most XX % of the CPUs" at BOINC Manager for each system, according to its amount of CPU cores. This allows me to run concurrently "N" Python GPU tasks and a fixed number of other CPU tasks as desired. But as said, Gpugrid Python GPU app seems to take CPU resources as needed for successfully processing its tasks... at the cost of slowing down the other CPU applications. | |

| ID: 58304 | Rating: 0 | rate:

| |

|

Yes, I use Process Lasso on all my Windows machines, but I haven't explored its use under Linux. | |

| ID: 58305 | Rating: 0 | rate:

| |

|

This message 19:39:23 (6124): task /usr/bin/flock reached time limit 1800 Indicates that, after 30 minutes, the installation of miniconda and the task environment setup have not been finished. Consequently, python is not found later on to execute the task since it is one of the requirements of the miniconda environment. application ./gpugridpy/bin/python missing Therefore, it is not an error in itself, it just means that the miniconda setup went too slow for some reason (in theory 30 minutes should be enough time). Maybe the machine is slower than usual for some reason. Or the connection is slow and dependencies are not being downloaded. We could extend this timeout, but normally if 30 minutes is not enough for the miniconda setup another underlying problem could exists. ____________ | |

| ID: 58306 | Rating: 0 | rate:

| |

|

it seems to be a reasonably fast system. my guess is another type of permissions issue which is blocking the python install and it hits the timeout, or the CPUs are being too heavily used and not giving enough resources to the extraction process. | |

| ID: 58307 | Rating: 0 | rate:

| |

|

There is no Linux equivalent of Process Lasso. | |

| ID: 58308 | Rating: 0 | rate:

| |

|

Well, that got me a long way. E: Unable to locate package python-qwt5-qt4 E: Unable to locate package python-configobj Unsurprisingly, the next step returns Traceback (most recent call last): File "./procexp.py", line 27, in <module> from PyQt5 import QtCore, QtGui, QtWidgets, uic ModuleNotFoundError: No module named 'PyQt5' htop, however, shows about 30 multitasking processes spawned from main, each using around 2% of a CPU core (varying by the second) at nice 19. At the time of inspection, that is. I'll go away and think about that. | |

| ID: 58309 | Rating: 0 | rate:

| |

|

I've one task now that had the same timeout issue getting python. The host was running fine on these tasks before and I don't know what has changed. | |

| ID: 58310 | Rating: 0 | rate:

| |

|

You might look into schedtool as an alternative. | |

| ID: 58311 | Rating: 0 | rate:

| |

I'd like to hear what others are using for ncpus for their Python tasks in their app_config files.Very interesting. Does this actually limit PythonGPU to using at most 5 cpu threads? Does it work better than: <app_config> <!-- i9-7980XE 18c36t 32 GB L3 Cache 24.75 MB --> <app> <name>PythonGPU</name> <plan_class>cuda1121</plan_class> <gpu_versions> <cpu_usage>1.0</cpu_usage> <gpu_usage>1.0</gpu_usage> </gpu_versions> <avg_ncpus>5</avg_ncpus> <cmdline>--nthreads 5</cmdline> <fraction_done_exact/> </app> </app_config> Edit 1: To answer my own question I changed cpu_usage to 5 and am running a single PythonGPU WU with nothing else going on. The System Monitor shows 5 CPUs are running in the 60 to 80% range with all othe CPU running in the 10 to 40% range. Is there any way to stop it from taking over ones entire computer? Edit 2: I turned on WCG and the group of 5 went up to 100% and all the rest went to OPN in the 80 to 95% range. | |

| ID: 58317 | Rating: 0 | rate:

| |

|

No. Setting that value won’t change how much CPU is actually used. It just tells BOINC how much of the CPU is being used so that it can probably account resources. | |

| ID: 58318 | Rating: 0 | rate:

| |

|

This morning, in a routine system update, I noticed that BOINC Client / Manager was updated from Version 7.16.17 to Version 7.18.1. | |

| ID: 58320 | Rating: 0 | rate:

| |

|

Which distro/repository are you using? I have Mint with Gianfranco Costamagna's PPA: that's usually the fastest to update, and I see v7.18.1 is being offered there as well - although I haven't installed it yet. | |

| ID: 58321 | Rating: 0 | rate:

| |

Which distro/repository are you using? I have Mint with Gianfranco Costamagna's PPA: that's usually the fastest to update, and I see v7.18.1 is being offered there as well - although I haven't installed it yet. It bombed out on the Rosetta pythons; they did not run at all (a VBox problem undoubtedly). And it failed all the validations on QuChemPedIA, which does not use VirtualBox on the Linux version. But it works OK on CPDN, WCG/ARP and Einstein/FGRBP (GPU). All were on Ubuntu 20.04.3. So be prepared to bail out if you have to. | |

| ID: 58322 | Rating: 0 | rate:

| |

Which distro/repository are you using? I'm using the regular repository for Ubuntu 20.04.3 LTS I took screenshot of offered updates before updating. | |

| ID: 58324 | Rating: 0 | rate:

| |

|

My PPA gives slightly more information on the available update: | |

| ID: 58325 | Rating: 0 | rate:

| |

|

OK, I've taken a deep breath and enough coffee - applied all updates. [Unit] Note the line I've picked out. That starts with a # sign, for comment, so it has no effect: PrivateTmp is undefined in this file. New work became available just as I was preparing to update, so I downloaded a task and immediately suspended it. After the updates, and enough reboots to get my NVidia drivers functional again (it took three this time), I restarted BOINC and allowed the task to run. Task 32736884 Our old enemy "INTERNAL ERROR: cannot create temporary directory!" is back. Time for a systemd over-ride file, and to go fishing for another task. Edit - updated the file, as described in message 58312, and got task 32736938. That seems to be running OK, having passed the 10% danger point. Result will be in sometime after midnight. | |

| ID: 58327 | Rating: 0 | rate:

| |

|

I see your task completed normally with the PrivateTmp=true uncommented in the service file. | |

| ID: 58328 | Rating: 0 | rate:

| |

|

No, that's the first time I've seen that particular warning. The general structure is right for this machine, but it does't usually reach as high as 11 - GPUGrid normally gets slot 7. Whatever - there were some tasks left waiting after the updates and restarts. | |

| ID: 58329 | Rating: 0 | rate:

| |

|

Oh, I was not aware of this warning. | |

| ID: 58330 | Rating: 0 | rate:

| |

|

Yes, this experiments is with a slightly modified version of the algorithm, which should be faster. It runs the same number of interactions with the reinforcement learning environment, so the credits amount is the same. | |

| ID: 58331 | Rating: 0 | rate:

| |

|

I'll take a look at the contents of the slot directory, next time I see a task running. You're right - the entire '/var/lib/boinc-client/slots/n/...' structure should be writable, to any depth, by any program running under the boinc user account. | |

| ID: 58332 | Rating: 0 | rate:

| |

|

The directory paths are defined as environment variables in the python script. # Set wandb paths Then the directories are created by the wandb python package (which handles logging of relevant training data). I suspect it could be in the creation that the permissions are defined. So it is not a BOINC problem. I will change the paths in future jobs to: # Set wandb paths Note that "os.getcwd()" is the working directory, so "/var/lib/boinc-client/slots/11/" in this case ____________ | |

| ID: 58333 | Rating: 0 | rate:

| |

Oh, I was not aware of this warning. what happens if that directory doesn't exist? several of us run BOINC in a different location. since it's in /var/lib/ the process wont have permissions to create the directory, unless maybe if BOINC is run as root. ____________  | |

| ID: 58334 | Rating: 0 | rate:

| |

|

'/var/lib/boinc-client/' is the default BOINC data directory for Ubuntu BOINC service (systemd) installations. It most certainly exists, and is writable, on my machine, which is where Keith first noticed the error message in the report of a successful run. During that run, much will have been written to .../slots/11 | |

| ID: 58335 | Rating: 0 | rate:

| |

|

I'm aware it's the default location on YOUR computer, and others running the standard ubuntu repository installer. but the message from abouh sounded like this directory was hard coded since he put the entire path. and for folks running BOINC in another location, this directory will not be the same. if it uses a relative file path, then it's fine, but I was seeking clarification. | |

| ID: 58336 | Rating: 0 | rate:

| |

|

Hard path coding was removed before this most recent test batch. | |

| ID: 58337 | Rating: 0 | rate:

| |

/var/lib/boinc-client/ does not exist on my system. /var/lib is write protected, creating a directory there requires elevated privileges, which I'm sure happens during install from the repository. Yes. I do the following dance whenever setting up BOINC from Ubuntu Software or LocutusOfBorg: Join the root group: sudo adduser (Username) root • Join the BOINC group: sudo adduser (Username) boinc • Allow access by all: sudo chmod -R 777 /etc/boinc-client • Allow access by all: sudo chmod -R 777 /var/lib/boinc-client I also do these to allow monitoring by BoincTasks over the LAN on my Win10 machine: • Copy “cc_config.xml” to /etc/boinc-client folder • Copy “gui_rpc_auth.cfg” to /etc/boinc-client folder • Reboot | |

| ID: 58338 | Rating: 0 | rate:

| |

|

The directory should be created wherever you run BOINC, that is not a problem. | |

| ID: 58339 | Rating: 0 | rate:

| |

I do the following dance whenever setting up BOINC from Ubuntu Software or LocutusOfBorg:By doing so, you nullify your system's security provided by different access rights levels. This practice should be avoided by all costs. | |

| ID: 58340 | Rating: 0 | rate:

| |

|

I see that the BOINC PPA distribution has been updated yet again - still v7.18.1, but a new build timed at 17:10 yesterday, 4 February. | |

| ID: 58341 | Rating: 0 | rate:

| |

I do the following dance whenever setting up BOINC from Ubuntu Software or LocutusOfBorg:By doing so, you nullify your system's security provided by different access rights levels. I am on an isolated network behind a firewall/router. No problem at all. | |

| ID: 58342 | Rating: 0 | rate:

| |

I am on an isolated network behind a firewall/router. No problem at all.That qualifies as famous last words. | |

| ID: 58343 | Rating: 0 | rate:

| |

I see that the BOINC PPA distribution has been updated yet again - still v7.18.1, but a new build timed at 17:10 yesterday, 4 February. All I know is that the new build does not work at all on Cosmology with VirtualBox 6.1.32. A work unit just suspends immediately on startup. | |

| ID: 58344 | Rating: 0 | rate:

| |

I am on an isolated network behind a firewall/router. No problem at all.That qualifies as famous last words. It has lasted for many years. EDIT: They are all dedicated crunching machines. I have only BOINC and Folding on them. If they are a problem, I should pull out now. | |

| ID: 58345 | Rating: 0 | rate:

| |

I see that the BOINC PPA distribution has been updated yet again - still v7.18.1, but a new build timed at 17:10 yesterday, 4 February.My Ubuntu 20.04.3 machines updated themselves this morning to v7.18.1 for the 3rd time. (available version: 7.18.1+dfsg+202202041710~ubuntu20.04.1) | |

| ID: 58346 | Rating: 0 | rate:

| |

In your scenario, it's not a problem.I am on an isolated network behind a firewall/router. No problem at all.That qualifies as famous last words. It's dangerous to suggest that lazy solution to everyone, as their computers could be in a very different scenario. https://pimylifeup.com/chmod-777/ | |

| ID: 58347 | Rating: 0 | rate:

| |

In your scenario, it's not a problem.I am on an isolated network behind a firewall/router. No problem at all.That qualifies as famous last words. You might as well carry your warnings over to the Windows crowd, which doesn't have much security at all. | |

| ID: 58348 | Rating: 0 | rate:

| |

You might as well carry your warnings over to the Windows crowd, which doesn't have much security at all.Excuse me? | |

| ID: 58349 | Rating: 0 | rate:

| |

You might as well carry your warnings over to the Windows crowd, which doesn't have much security at all.Excuse me? What comparable isolation do you get in Windows from one program to another? Or what security are you talking about? Port security from external sources? | |

| ID: 58350 | Rating: 0 | rate:

| |

Security descriptors introduced into the NTFS 1.2 file system released in 1996 with Windows NT 4.0. The access control lists in NTFS are more complex in some aspects than in Linux. All modern Windows use NTFS by default.What comparable isolation do you get in Windows from one program to another?You might as well carry your warnings over to the Windows crowd, which doesn't have much security at all.Excuse me? User Account Control is introduced in 2007 with Windows Vista (=apps doesn't run as administrator even if the user has administrative privileges until the user elevates it through an annoying popup) Or what security are you talking about? Port security from external sources?Windows firewall is introced with Windows XP SP2 in 2004. This is my last post in this thread about (undermining) filesystem security. | |

| ID: 58351 | Rating: 0 | rate:

| |

I see that the BOINC PPA distribution has been updated yet again - still v7.18.1, but a new build timed at 17:10 yesterday, 4 February. Updated my second machine. It appears that this re-release is NOT releated to the systemd problem: the PrivateTmp=true line is still commented out. Re-apply the fix (#1) from message 58312 after applying this update, if you wish to continue running the Python test apps. | |

| ID: 58352 | Rating: 0 | rate:

| |

|

I think you are correct, except in the term "undermining", which is not appropriate for isolated crunching machines. There is a billion-dollar AV industry for Windows. Apparently someone has figured out how to undermine it there. But I agree that no more posts are necessary. | |

| ID: 58353 | Rating: 0 | rate:

| |

|

While chmod 777-ing in general is a bad practice. There’s little harm in blowing up the BOINC directory like that. Worst that can happen is you modify or delete a necessary file by accident and break BOINC. Just reinstall and learn the lesson. Not the end of the world in this instance. | |

| ID: 58354 | Rating: 0 | rate:

| |

I see that the BOINC PPA distribution has been updated yet again - still v7.18.1, but a new build timed at 17:10 yesterday, 4 February. Ubuntu 20.04.3 LTS is still on the older 7.16.6 version. apt list boinc-client Listing... Done boinc-client/focal 7.16.6+dfsg-1 amd64 | |

| ID: 58355 | Rating: 0 | rate:

| |

I see that the BOINC PPA distribution has been updated yet again - still v7.18.1, but a new build timed at 17:10 yesterday, 4 February.My Ubuntu 20.04.3 machines updated themselves this morning to v7.18.1 for the 3rd time. Curious how your Ubuntu release got this newer version. I did a sudo apt update and apt list boinc-client and apt show boinc-client and still come up with older 7.16.6 version. | |

| ID: 58356 | Rating: 0 | rate:

| |

|

I think they use a different PPA, not the standard Ubuntu version. | |

| ID: 58357 | Rating: 0 | rate:

| |

It's from http://ppa.launchpad.net/costamagnagianfranco/boinc/ubuntuMy Ubuntu 20.04.3 machines updated themselves this morning to v7.18.1 for the 3rd time. Sorry for the confusion. | |

| ID: 58358 | Rating: 0 | rate:

| |

I think they use a different PPA, not the standard Ubuntu version. You're right. I've checked, and this is my complete repository listing. There are new pending updates for BOINC package, but I've recently catched an ACEMD3 ADRIA new task, and I'm not updating until it be finished and reported. My experience warns that these tasks are highly prone to fail if something is changed while processing. | |

| ID: 58359 | Rating: 0 | rate:

| |

Which distro/repository are you using? Ah. Your reply here gave me a different impression. Slight egg on face, but both our Linux update manager screenshots fail to give source information in their consolidated update lists. Maybe we should put in a feature request? | |

| ID: 58360 | Rating: 0 | rate:

| |

|

ACEMD3 task finished on my original machine, so I updated BOINC from PPA 2022-01-30 to 2022-02-04. | |

| ID: 58361 | Rating: 0 | rate:

| |

|

Got a new task (task 32738148). Running normally, confirms override to systemd is preserved. wandb: WARNING Path /var/lib/boinc-client/slots/7/.config/wandb/wandb/ wasn't writable, using system temp directory (we're back in slot 7 as usual) There are six folders created in slot 7: agent_demos gpugridpy int_demos monitor_logs python_dependencies ROMS There are no hidden folders, and certainly no .config wandb data is in: /tmp/systemd-private-f670b90d460b4095a25c37b7348c6b93-boinc-client.service-7Jvpgh/tmp There are 138 folders in there, including one called simply wandb wandb contains: debug-internal.log debug.log latest-run run-20220206_163543-1wmmcgi5 The first two are files, the last two are folders. There is no subfolder called wandb - so no recursion, such as the warning message suggests. Hope that helps. | |

| ID: 58362 | Rating: 0 | rate:

| |

|

Thanks! the content of the slot directory is correct. | |

| ID: 58363 | Rating: 0 | rate:

| |

|

wandb: Run data is saved locally in /var/lib/boinc-client/slots/7/wandb/run-20220209_082943-1pdoxrzo | |

| ID: 58364 | Rating: 0 | rate:

| |

|

Great, thanks a lot for the confirmation. So now it seems the directory is appropriate one. | |

| ID: 58365 | Rating: 0 | rate:

| |

|

Pretty happy to see that my little Quadro K620s could actually handle one of the ABOU work units. Successfully ran one in under 31 hours. It didn't hit the memory too hard, which helps. The K620 has a DDR3 memory bus so the bandwidth is pretty limited. Traceback (most recent call last): File "run.py", line 40, in <module> assert os.path.exists('output.coor') AssertionError 11:22:33 (1966061): ./gpugridpy/bin/python exited; CPU time 0.295254 11:22:33 (1966061): app exit status: 0x1 11:22:33 (1966061): called boinc_finish(195) | |

| ID: 58367 | Rating: 0 | rate:

| |

|

All tasks goes in errors on this machine : https://www.gpugrid.net/results.php?hostid=591484 | |

| ID: 58368 | Rating: 0 | rate:

| |

|

I got two of those yesterday as well. They are described as "Anaconda Python 3 Environment v4.01 (mt)" - declared to run as multi-threaded CPU tasks. I do have working GPUs (on host 508381), but I don't think these tasks actually need a GPU. | |

| ID: 58369 | Rating: 0 | rate:

| |

|

We were running those kind of tasks a year ago. Looks like the researcher has made an appearance again. | |

| ID: 58370 | Rating: 0 | rate:

| |

|

I just downloaded one, but it errored out before I could even catch it starting. It ran for 3 seconds, required four cores of a Ryzen 3950X on Ubuntu 20.04.3, and had an estimated time of 2 days. I think they have some work to do. | |

| ID: 58371 | Rating: 0 | rate:

| |

|

PPS - It ran for two minutes on an equivalent Ryzen 3950X running BOINC 7.16.6, and then errored out. | |

| ID: 58372 | Rating: 0 | rate:

| |

|

I just ran 4 of the Python CPU tasks wu's on my Ryzen 7 5800H, Ubuntu 20.04.3 LTS, 16 GB ram. Each was run on 4 CPU threads at the same time. The first 0,6% took over 10 minutes, then they jumped to 10%, continued a while longer until 17 minutes were over and then erroed out all at more or less the same moment in the task. Here is one example: 32743954 | |

| ID: 58373 | Rating: 0 | rate:

| |

|

A RAIMIS MT task - which accounts for the 4 threads. Run NVIDIA GeForce RTX 3060 Laptop GPU (4095MB) Traceback (most recent call last): | |

| ID: 58374 | Rating: 0 | rate:

| |

|

I am running two of the Anacondas now. They each reserve four threads, but are apparently only using one of them, since BoincTasks shows 25% CPU usage. | |

| ID: 58380 | Rating: 0 | rate:

| |

|

Hey Richard. In how far is my GPU's memory involved in a CPU task? | |

| ID: 58381 | Rating: 0 | rate:

| |

Hey Richard. In how far is my GPU's memory involved in a CPU task? It shouldn't be - that's why I drew attention to it. I think both AbouH and RAIMIS are experimenting with different applications, which exploit both GPUs and multiple CPUs. It isn't at all obvious how best to manage a combination like that under BOINC - the BOINC developers only got as far as thinking about either/or, not both together. So far, Abou seems to have got further down the road, but I'm not sure how much further development is required. We watch and wait, and help where we can. | |

| ID: 58382 | Rating: 0 | rate:

| |

|

My first two Anacondas ended OK after 31 hours. But they were _2 and _3. | |

| ID: 58383 | Rating: 0 | rate:

| |

I am running a _4 now. After 18 minutes it is OK, but the CPU usage is still trending down to a single core after starting out high. It stopped making progress after running for a day and reaching 26% complete, so I aborted it. I will wait until they fix things before jumping in again. But my results were different than the others, so maybe it will do them some good. | |

| ID: 58384 | Rating: 0 | rate:

| |

|

Hello everyone! I am sorry for the late reply. | |

| ID: 58417 | Rating: 0 | rate:

| |

|

Is this a record? 08/03/2022 17:57:22 | GPUGRID | Started download of windows_x86_64__cuda1131.tar.bz2.e9a2e4346c92bfb71fae05c8321e9325 08/03/2022 18:35:03 | GPUGRID | Finished download of windows_x86_64__cuda1131.tar.bz2.e9a2e4346c92bfb71fae05c8321e9325 08/03/2022 18:35:26 | GPUGRID | Starting task e1a4-ABOU_pythonGPU_beta2_test-0-1-RND8371_5 08/03/2022 18:36:21 | GPUGRID | [sched_op] Reason: Unrecoverable error for task e1a4-ABOU_pythonGPU_beta2_test-0-1-RND8371_5 08/03/2022 18:36:21 | GPUGRID | Computation for task e1a4-ABOU_pythonGPU_beta2_test-0-1-RND8371_5 finished Edit 2 - "application C:\Windows\System32\tar.exe missing". I can deal with that. Download from https://sourceforge.net/projects/gnuwin32/files/tar/ NO - that wasn't what it said it was. Looking again. | |

| ID: 58464 | Rating: 0 | rate:

| |

|

No, this isn't working. Apparently, tar.exe is included in Windows 10 - but I'm still running Windows 7/64, and a copy from a W10 machine won't run ("Not a valid Win32 application"). Giving up for tonight - I've got too much waiting to juggle. I'll try again with a clearer head tomorrow. | |

| ID: 58465 | Rating: 0 | rate:

| |

|

Yeah estimates must have astronomical as I am at over 2 months Time left at 3/4 completion on 2 tasks. | |

| ID: 58466 | Rating: 0 | rate:

| |

|

No need to go back to the drawing board, in principle. Here is what is happening: | |

| ID: 58467 | Rating: 0 | rate:

| |

|

In this new version of the app, we send the whole conda environment in a compressed file ONLY ONCE, and unpack it in the machine. The conda environment is what weights around 2.5 GB (depends on whether the machine has cuda10 or cuda11). However, while the environment remains the same there will be no need to re-download it in every job. This is how acemd app works. | |

| ID: 58468 | Rating: 0 | rate:

| |

|

Some problems we are facing are, as Richard mentioned, that before W10 there is no tar.exe. tar.exe: Error opening archive: Can't initialize filter; unable to run program "bzip2 -d" In theory tar.exe is able to handle bzip2 files. We suspect it could be a problem with PATH env variable (which we will test). Also, tar gz could be a more compatible format for Windows. ____________ | |

| ID: 58469 | Rating: 0 | rate:

| |

|

Don't worry, it's only my own personal drawing board that I'm going back to! | |

| ID: 58470 | Rating: 0 | rate:

| |

|

Thank you very much! I will send a small batch of test jobs as soon as I can to check if for windows 10 the bzip2 error is caused by an erroneous PATH variable. And the next step will be trying with tar.gz as mentioned. | |

| ID: 58471 | Rating: 0 | rate:

| |

|

How about some checkpoints. I have a python task that was nearly completed, a ACEMD4 task downloaded next with like 8 billion days ETA. It interrupted the python task. 14hours of work and it went back to 10%. I only have 0.05 days work queue on that client so the python app was at least 95% complete. | |

| ID: 58472 | Rating: 0 | rate:

| |

|

was it a PythonGPU task for Linux mmonnin? I have checked your recent jobs, seemed to be successful. | |

| ID: 58473 | Rating: 0 | rate:

| |

|

I have a python task for Linux running, recently started. CPU time 00:33:10 CPU time since checkpoint 00:01:33 Elapsed time 00:33:27 but that isn't the acid test: the question is whether it can read back the checkpoint data files when restarted. I'll pause it after a checkpoint, let the machine finish the last 20 minutes of the task it booted aside, and see what happens on restart. Sometimes BOINC takes a little while to update progress after a pause - you have to watch it, not just take the first figure you see. Results will be reported in task 32773760 overnight, but I'll post here before that. Edit - looks good so far: restart.chk, progress, run.log all present with a good timestamp. | |

| ID: 58474 | Rating: 0 | rate:

| |

|

Perfect thanks! That it takes a little while to update progress after a pause, can happen. | |

| ID: 58475 | Rating: 0 | rate:

| |