Message boards : Server and website : LR WUs ready-to-send

| Author | Message |

|---|---|

|

Oops... Long runs = 1 | |

| ID: 40078 | Rating: 0 | rate:

| |

|

Sometimes experiments finish. That's a good thing. | |

| ID: 40079 | Rating: 0 | rate:

| |

|

Perhaps though some notice or discussion of this on the News board would be appreciated. | |

| ID: 40081 | Rating: 0 | rate:

| |

|

I think it will have to wait until early next week. | |

| ID: 40082 | Rating: 0 | rate:

| |

|

Thomas, I expect that will be the case. | |

| ID: 40083 | Rating: 0 | rate:

| |

|

I'm not part of the GPUG crew so I don't know and I don't want to speculate on this subject, Barry. | |

| ID: 40084 | Rating: 0 | rate:

| |

|

Well - in the last 30 minutes I've done a BOINC "update" on my two hosts and got a new WU each time. Perhaps the server status is telling porkies... :) | |

| ID: 40085 | Rating: 0 | rate:

| |

Well - in the last 30 minutes I've done a BOINC "update" on my two hosts and got a new WU each time. Perhaps the server status is telling porkies... :) It was for this reason that I opened this thread. To get feedback from other GPUG volunteers. Thanks so much tomba and happy weekend to you :) ____________ [CSF] Thomas H.V. Dupont Founder of the team CRUNCHERS SANS FRONTIERES 2.0 www.crunchersansfrontieres | |

| ID: 40086 | Rating: 0 | rate:

| |

Thanks so much tomba and happy weekend to you :) Et un week-end heureux de vous aussi, Thomas. Salutations de les collines au-dessus de St Tropez. | |

| ID: 40087 | Rating: 0 | rate:

| |

Thanks so much tomba and happy weekend to you :) Dans la langue de Molière ! Merci tomba ! ____________ [CSF] Thomas H.V. Dupont Founder of the team CRUNCHERS SANS FRONTIERES 2.0 www.crunchersansfrontieres | |

| ID: 40088 | Rating: 0 | rate:

| |

Dans la langue de Molière ! Merci tomba !  | |

| ID: 40089 | Rating: 0 | rate:

| |

|

Yes -- I see that new work was released shortly after my whining. The power I have <rueful self-deprecating smile> Well - in the last 30 minutes I've done a BOINC "update" on my two hosts and got a new WU each time. Perhaps the server status is telling porkies... :) | |

| ID: 40094 | Rating: 0 | rate:

| |

|

4 systems sitting idle and I was trying to make the push for 100,000,000 by tomorrow midnight. That ain't happening now. | |

| ID: 40100 | Rating: 0 | rate:

| |

|

905-NOELIA_PNP-9-10-RND6302_1 | |

| ID: 40103 | Rating: 0 | rate:

| |

|

Out of 7 NVidia GPUs, I have 3 idle. I was able to bog down the other 4 in order to make them last longer (until Monday) so they won't go completely idle. They are checking for tasks every once in a while each and I am getting only tasks that time out on other people's computers or error out on their computers. Oddly enough, because of the timing, I have the one computer with 2 cards that has 4 tasks: 2 working and 2 waiting. Depending on new tasks coming out, I may make the 100,000 on Monday or Tuesday now. | |

| ID: 40110 | Rating: 0 | rate:

| |

|

I've posted something similar on another thread, but just to reiterate: Einstein at home has GPU work available, despite not making that clear in the BOINC project selection page. So that's my backup project, set at 0 priority. Much better for the GPU work to go somewhere then to just let them idle while we wait for more GPUgrid resends. | |

| ID: 40111 | Rating: 0 | rate:

| |

|

hahaha, the others still idle of GPUGrid work, the one with the WUs to spare just got a 3rd spare. | |

| ID: 40112 | Rating: 0 | rate:

| |

.....Is there a way to transfer the WUs from one PC to another over the network or are they assigned and stuck? While someone may be able to make a theoretical argument about transferring tasks from one computer to another, they should be for all practical purposes considered "assigned and stuck." | |

| ID: 40115 | Rating: 0 | rate:

| |

|

thx | |

| ID: 40116 | Rating: 0 | rate:

| |

|

It looks like they might have just got new WUs together maybe. I just filled up all my GPUs for short and long runs all at once. | |

| ID: 40118 | Rating: 0 | rate:

| |

|

I think you must have gotten a few resends at once. Certainly the servers are still running low. | |

| ID: 40119 | Rating: 0 | rate:

| |

|

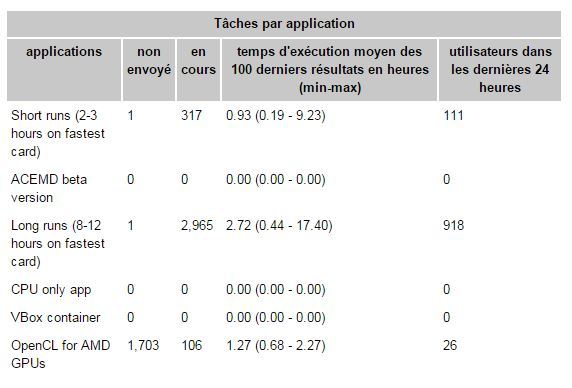

Both queues needs to be refilled. | |

| ID: 40128 | Rating: 0 | rate:

| |

:D | |

| ID: 40129 | Rating: 0 | rate:

| |

|

Always happy to feed the beast with some fresh WU :D | |

| ID: 40130 | Rating: 0 | rate:

| |

|

Thanks for the new WU's. While my boxes were idle, BarryAZ passed me on RAC. Time to fix that. | |

| ID: 40131 | Rating: 0 | rate:

| |

Always happy to feed the beast with some fresh WU :D THX, beasts are happy. How about wu's for the kitties ? (Short runs) | |

| ID: 40132 | Rating: 0 | rate:

| |

Thanks for the new WU's. +2 Thanks Gerard ! :) ____________ [CSF] Thomas H.V. Dupont Founder of the team CRUNCHERS SANS FRONTIERES 2.0 www.crunchersansfrontieres | |

| ID: 40134 | Rating: 0 | rate:

| |

|

Short runs are back! | |

| ID: 40135 | Rating: 0 | rate:

| |

Thanks for the new WU's. While my boxes were idle, BarryAZ passed me on RAC. Time to fix that. Brian, set up some backup projects (like SETI and Einstein@Home), with a resource share of 0, so your GPUs stay busy, but pick up GPUGrid whenever they can! | |

| ID: 40138 | Rating: 0 | rate:

| |

|

Attached to BOINC client Einstein@home, with resource sharing of 0. Removed CPU tasks from project preferences, let's see how it works. | |

| ID: 40143 | Rating: 0 | rate:

| |

Always happy to feed the beast with some fresh WU :D My beasts are hungry again... | |

| ID: 40144 | Rating: 0 | rate:

| |

|

Sniff, sniff... | |

| ID: 40145 | Rating: 0 | rate:

| |

|

I'm preparing a new set of WU. In a couple of hours the equilibration should have finished and they will be launched. Stay tuned! | |

| ID: 40146 | Rating: 0 | rate:

| |

|

I got one long Gerard ten minutes ago. | |

| ID: 40147 | Rating: 0 | rate:

| |

I got one long Gerard ten minutes ago. +1 :) I'm preparing a new set of WU. In a couple of hours the equilibration should have finished and they will be launched. Stay tuned! Thanks Gerard ! ____________ [CSF] Thomas H.V. Dupont Founder of the team CRUNCHERS SANS FRONTIERES 2.0 www.crunchersansfrontieres | |

| ID: 40148 | Rating: 0 | rate:

| |

|

Why exactly is this happening again? | |

| ID: 40150 | Rating: 0 | rate:

| |

|

Weekend : Sniff, sniff. | |

| ID: 40160 | Rating: 0 | rate:

| |

|

Guys: | |

| ID: 40161 | Rating: 0 | rate:

| |

|

I just sent some more simulations for the weekend. Remember that boinc and our project in particular, as it is set up right now, will respawn approximately 1 WU for each correctly completed WU; therefore you shouldn't look at the unsent WU but at the "in progress" queue to assess the load of GPUGRID. In other words, if you see some WU in the unsent queue it just means that GPUGRID is running at its maximum speed and as soon as you finish the current workunit a new one will be generated in the immidiate future. Happy crunching! | |

| ID: 40162 | Rating: 0 | rate:

| |

Message boards : Server and website : LR WUs ready-to-send